The First Step In Data Streaming

Streaming First As Data Architecture Finding pain is key to starting your real time data streaming journey, as tim berglund, vp of developer relations at confluent, shares. #datastreaming #strea. It involves 6 main steps: 1. data production. the first step in the process is when data is generated and sent from various sources such as iot devices, web applications, or social media platforms. 2. data ingestion. in the second step, the data is received and stored by consumers such as streaming platforms or message brokers.

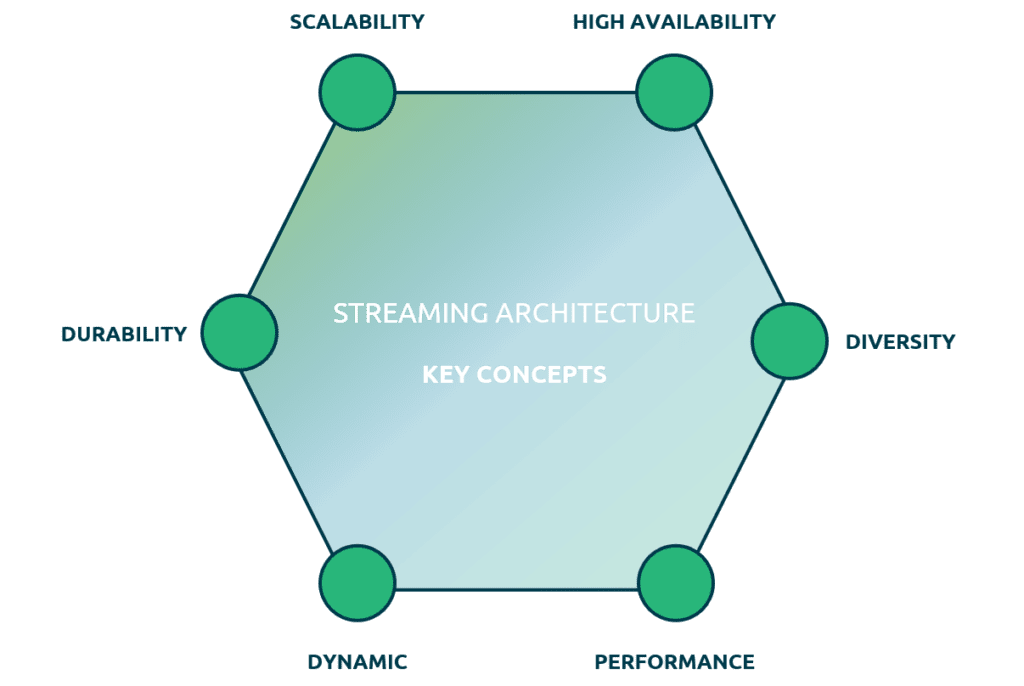

Big Data Streaming The first step in building out your streaming data architecture is setting up the data ingestion layer or stream processor. common tools to handle diverse and distributed data sources with high throughput and low latency include:. Data streaming is when small bits of data are sent consistently, typically through multiple channels. over time, the amount of data sent often amounts to terabytes and would be too overwhelming for manual evaluation. Data stream refers to the continuous flow of data generated by various sources in real time. it plays a crucial role in modern technology, enabling applications to process and analyze information as it arrives, leading to timely insights and actions. The first step in a streaming data pipeline is where information enters the pipeline. one very popular platform is apache kafka, a powerful open source tool used by thousands of companies.

Data Streaming Benefits Use Cases Components Examples Estuary Data stream refers to the continuous flow of data generated by various sources in real time. it plays a crucial role in modern technology, enabling applications to process and analyze information as it arrives, leading to timely insights and actions. The first step in a streaming data pipeline is where information enters the pipeline. one very popular platform is apache kafka, a powerful open source tool used by thousands of companies. With kafka and associated tools, developers can create stream processing pipelines that transform data for real time applications. Real time data streaming involves the processing and analysis of data as it arrives. the core concept is to receive data from a producer, buffer it temporarily, and then process it in real time using a stream processing framework like apache kafka. This guide introduces data streaming from a data science perspective. we’ll explain what it is, why it matters, and how to use tools like apache kafka, apache flink, and pyflink to build real time pipelines. In the world of streaming data pipelines, data production is like the starting point. it's all about gathering information from various sources, such as devices, apps, and sensors. the goal is to grab this data quickly and send it along for further processing.

Data Streaming Benefits Use Cases Components Examples Estuary With kafka and associated tools, developers can create stream processing pipelines that transform data for real time applications. Real time data streaming involves the processing and analysis of data as it arrives. the core concept is to receive data from a producer, buffer it temporarily, and then process it in real time using a stream processing framework like apache kafka. This guide introduces data streaming from a data science perspective. we’ll explain what it is, why it matters, and how to use tools like apache kafka, apache flink, and pyflink to build real time pipelines. In the world of streaming data pipelines, data production is like the starting point. it's all about gathering information from various sources, such as devices, apps, and sensors. the goal is to grab this data quickly and send it along for further processing.

Data Streaming Benefits Use Cases Components Examples Estuary This guide introduces data streaming from a data science perspective. we’ll explain what it is, why it matters, and how to use tools like apache kafka, apache flink, and pyflink to build real time pipelines. In the world of streaming data pipelines, data production is like the starting point. it's all about gathering information from various sources, such as devices, apps, and sensors. the goal is to grab this data quickly and send it along for further processing.

Comments are closed.