Stream Processing Simplified

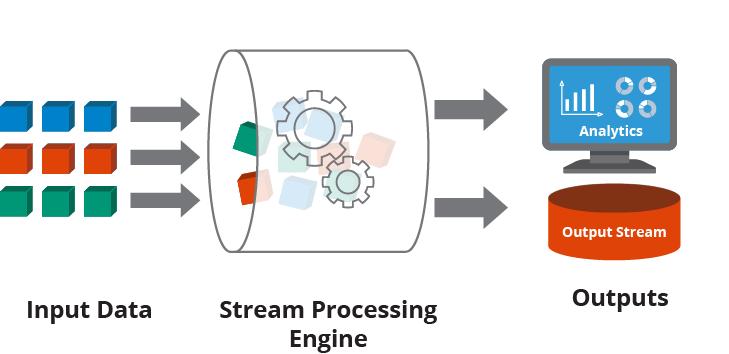

What Is Stream Processing A Layman S Overview Hazelcast Stream processing is a technique of data processing and management which uses a continuous data stream and analyzes, transforms, filter, or enhance it in real time. once processed, the data is sent to an application, data storage, or another stream processing engine. Stream processing is a data management technique that involves ingesting a continuous data stream to quickly analyze, filter, transform or enhance the data in real time. once processed, the data is passed off to an application, data store or another stream processing engine.

Ksql Stream Processing Simplified Ppt From apache kafka to real time analytics, this guide breaks down stream processing with clear examples, key benefits, and practical use cases. stream processing has emerged as an essential component in the modern data architecture stack. In this video, joe explains the fundamentals of stream processing and how it empowers developers to run continuous aggregation queries on real time data. discover practical use cases that showcase the power of stream processing in various industries. Stream processing relies on a streamlined architecture to ensure the whole process is fast and seamless. this helps solve multiple challenges that batch processing faces. the steps are as follows: stream processing ensures there's no lag time between these steps. Stream processing is a method of handling data continuously and in real time as it is generated, rather than processing batches of stored data after a delay. it focuses on analyzing and acting on data immediately, often using tools designed to handle high throughput, low latency scenarios.

Ksql Stream Processing Simplified Ppt Stream processing relies on a streamlined architecture to ensure the whole process is fast and seamless. this helps solve multiple challenges that batch processing faces. the steps are as follows: stream processing ensures there's no lag time between these steps. Stream processing is a method of handling data continuously and in real time as it is generated, rather than processing batches of stored data after a delay. it focuses on analyzing and acting on data immediately, often using tools designed to handle high throughput, low latency scenarios. Stream processing is a continuous method of ingesting, analyzing, and processing data as it is generated. unlike traditional batch processing, where data is collected and processed in chunks, stream processing works on the data as it is produced in real time. In this article, we're going to examine how stream processing enables those use cases, what challenges stream processing presents, and what the future might look like, especially as ai starts playing a bigger role in how we process and analyze streaming data. Stream processing enables continuous data ingestion, streaming, filtering, and transformation as events happen in real time. once processed, the data can be passed off to an application, data store, or another stream processing engine to provide actionable insights quickly. Stream processing is a big data technology that focuses on the real time processing of continuous streams of data in motion. a stream processing framework simplifies parallel hardware and software by restricting the performance of parallel computation.

Comments are closed.