Sparkarchitecture 190419130916 Pdf Apache Spark Apache Hadoop

Hadoop Vs Apache Spark Pdf Apache Hadoop Apache Spark This document discusses apache spark, an open source cluster computing framework for real time data processing. spark is up to 100 times faster than hadoop for large scale data processing due to its in memory cluster computing. Difficultly of programming directly in hadoop mapreduce performance bottlenecks, or batch not fitting use cases difficultly of programming directly in hadoop mapreduce performance bottlenecks, or batch not fitting use cases better support iterative jobs typical for machine learning word count implementations hadoop mr – 61 lines in java.

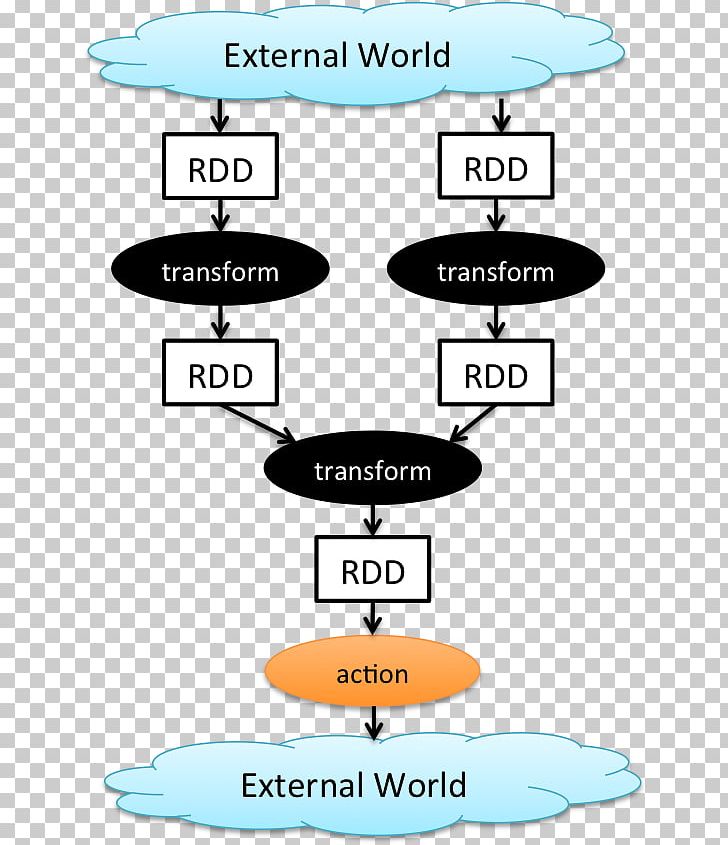

Apache Spark Pdf Apache Spark Apache Hadoop What is spark? fast and expressive cluster computing engine compatible with apache hadoop efficient. What is apache spark apache spark is an open source big data processing framework built around speed, ease of use, and sophisticated analytics ¡ general data processing engine compatible with hadoop data ¡ used to query, analyze and transform data ¡ developed in 2009 at amplab at university of california, berkeley. To achieve this while maximizing flexibility, spark can run over a variety of cluster managers, including hadoop yarn, apache mesos, and a simplecluster manager included in spark itself called the standalone scheduler. Apache spark has a well defined layer architecture that is designed on two main abstractions: resilient distributed dataset (rdd): rdd is an immutable (read only), fundamental collection of.

Apache Spark Apache Hadoop Massively Parallel Hadoop Yarn Big Data Png To achieve this while maximizing flexibility, spark can run over a variety of cluster managers, including hadoop yarn, apache mesos, and a simplecluster manager included in spark itself called the standalone scheduler. Apache spark has a well defined layer architecture that is designed on two main abstractions: resilient distributed dataset (rdd): rdd is an immutable (read only), fundamental collection of. Spark architecture consists of four components, including the spark driver, executors, cluster administrators, and worker nodes. it uses the dataset and data frames as the fundamental data storage mechanism to optimise the spark process and big data computation. What is hadoop ? a scalable fault tolerant distributed system for data storage and processing. core hadoop: hadoop distributed file system (hdfs) hadoop yarn: job scheduling and cluster resource management hadoop map reduce: framework for distributed data processing. hadoop.apac. Brief history of apache spark origins at uc berkeley (2009 2010): spark was developed in 2009 by a team at uc berkeley’s amplab, led by matei zaharia. it was designed as a faster alternative to hadoop’s mapreduce, focusing on in memory processing for improved speed in iterative tasks. In this lecture you will learn: what spark is and its main features. the components of the spark stack. the high level spark architecture. the notion of resilient distributed dataset (rdd). the main transformations and actions on rdds. apache spark is a distributed computing framework designed to be fast and general purpose. speed.

Spark Architecture Pdf Apache Spark Apache Hadoop Spark architecture consists of four components, including the spark driver, executors, cluster administrators, and worker nodes. it uses the dataset and data frames as the fundamental data storage mechanism to optimise the spark process and big data computation. What is hadoop ? a scalable fault tolerant distributed system for data storage and processing. core hadoop: hadoop distributed file system (hdfs) hadoop yarn: job scheduling and cluster resource management hadoop map reduce: framework for distributed data processing. hadoop.apac. Brief history of apache spark origins at uc berkeley (2009 2010): spark was developed in 2009 by a team at uc berkeley’s amplab, led by matei zaharia. it was designed as a faster alternative to hadoop’s mapreduce, focusing on in memory processing for improved speed in iterative tasks. In this lecture you will learn: what spark is and its main features. the components of the spark stack. the high level spark architecture. the notion of resilient distributed dataset (rdd). the main transformations and actions on rdds. apache spark is a distributed computing framework designed to be fast and general purpose. speed.

Comments are closed.