Solved 15 Let X1 Xn Be Independent Random Variables Having Chegg

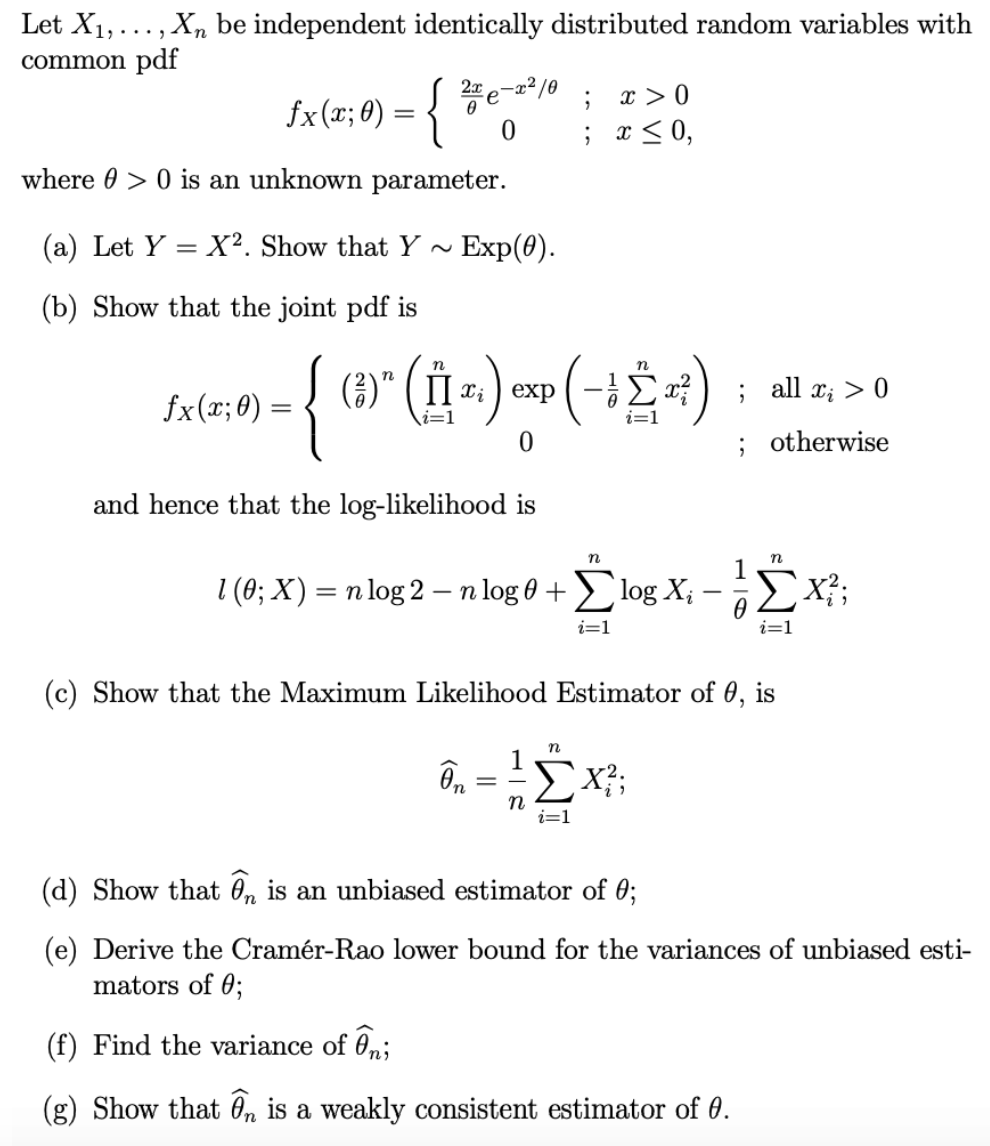

Solved Let X1 Xn Be Independent Identically Chegg There are 3 steps to solve this one. 15 let x 1,…,x n be independent random variables having a common density with mean μ and variance σ2. set x ˉ =(x 1 ⋯ x n) n. (a) by writing x k − x ˉ =(x k −μ)−(x ˉ −μ), show that ∑k=1n (x k −x ˉ)2 = ∑k=1n (x k −μ)2 −n(x ˉ −μ)2. (b) conclude from (a) that e(∑k=1n (x k −x ˉ)2)=(n− 1)σ2. Exercise. verify this by constructing non trivial (i.e. non constant) random variables for which theorem 2 and theorem 1 are tight, i.e. hold with equality.

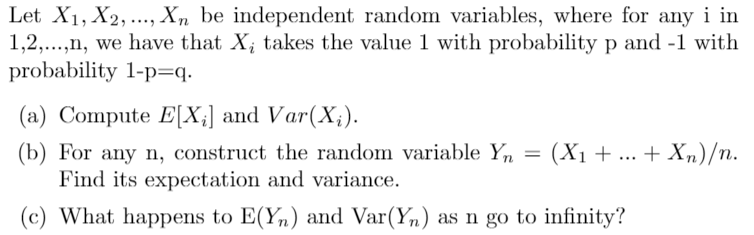

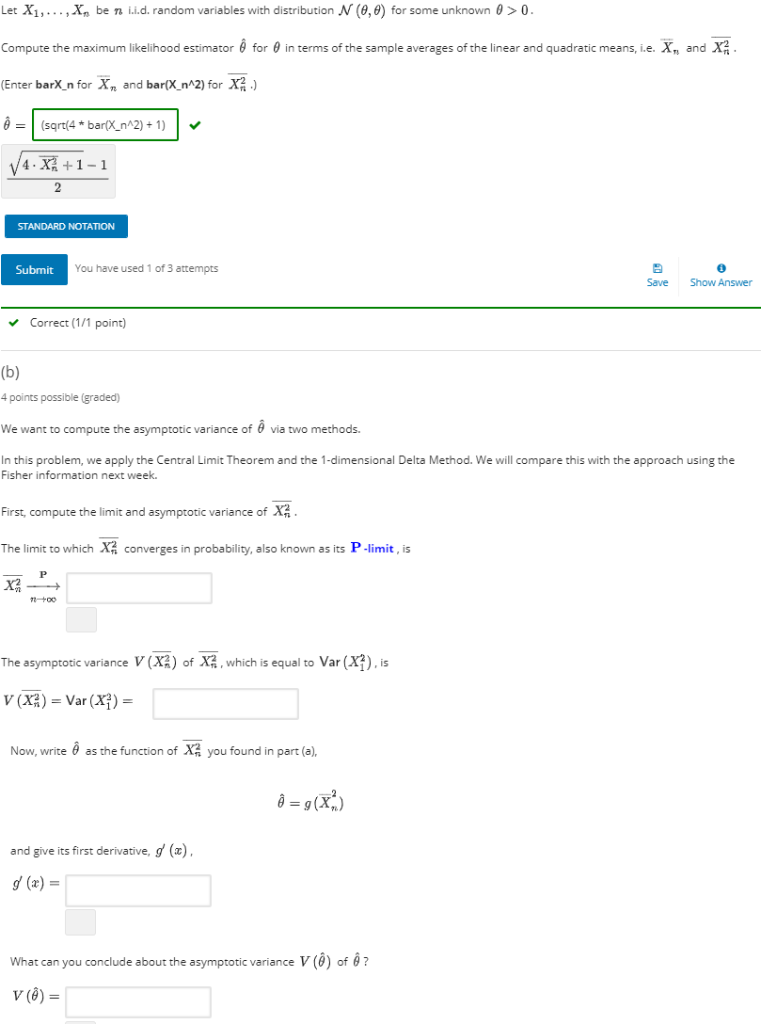

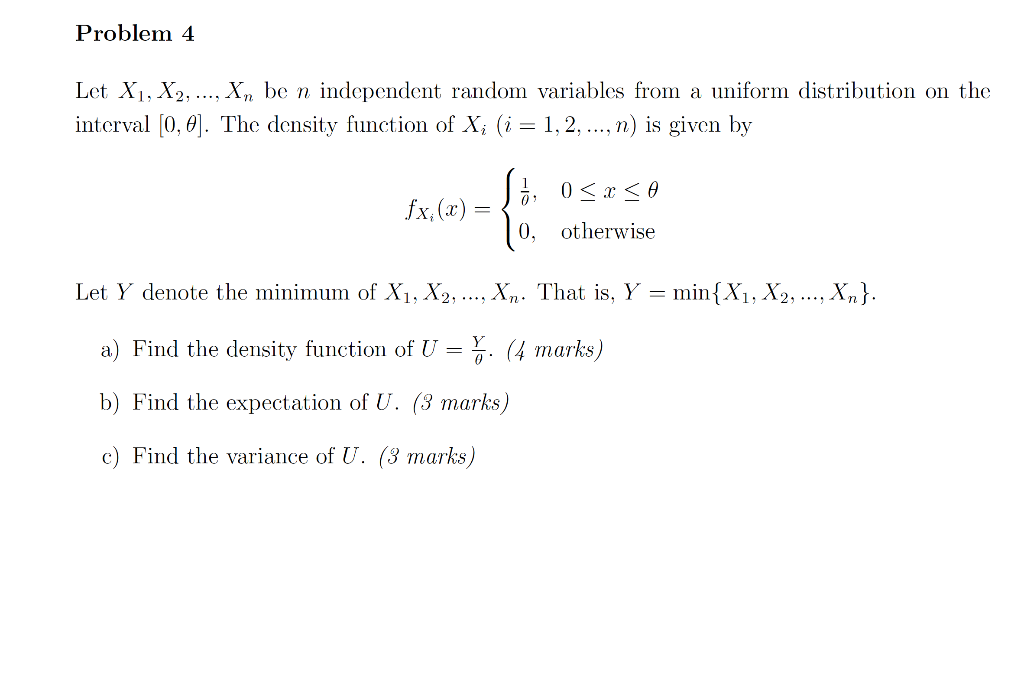

Solved Let X1 X2 Xn Be Independent Random Variables Chegg Show that x 1, ,x n are independent. i know if x x and y y are independent random variables, and g(⋅) g (·) and h(⋅) h (·) are measurable and real valued then g(x) g (x) and h(y) h (y) are independent. how can i use this result to my current problem. F(x) = p 2 n( n ) 2 n 1 ; 1 2 ! x2 < x < 1: let x tn. then, e(x) = 0 and var(x) = n 2. the t distribution is similar to the n standard normal distribution n(0; 1), but it has heavier tails. however as n ! 1 the t distribution converges to n(0; 1) (see graph below). n(0, 1). Problem let x1 x 1, x2 x 2, x3 x 3, ⋯ be a sequence of i.i.d. uniform(0, 1) u n i f o r m (0, 1) random variables. define the sequence yn y n as yn = min(x1,x2, ⋯,xn). y n = min (x 1, x 2,, x n) prove the following convergence results independently (i.e, do not conclude the weaker convergence modes from the stronger ones). yn →d 0 y n →. Let the mutually independent random variables x1, x2, and x3 be n(0, 1), n(2, 4), and n(−1, 1), respectively. compute the probability that exactly two of these three variables are less than zero.

Let X1 Xn Be N I I D Random Variables With Chegg Problem let x1 x 1, x2 x 2, x3 x 3, ⋯ be a sequence of i.i.d. uniform(0, 1) u n i f o r m (0, 1) random variables. define the sequence yn y n as yn = min(x1,x2, ⋯,xn). y n = min (x 1, x 2,, x n) prove the following convergence results independently (i.e, do not conclude the weaker convergence modes from the stronger ones). yn →d 0 y n →. Let the mutually independent random variables x1, x2, and x3 be n(0, 1), n(2, 4), and n(−1, 1), respectively. compute the probability that exactly two of these three variables are less than zero. Write down the likelihood as a function of the observed data x1, . . . , xn, and the unknown parameter p. compute the mle of p. in order to do this you need to find a zero of the derivative of the likelihood, and also check that the second derivative of the likelihood at the point is negative. compute the method of moments estimator for p. Let x1,…,xn be independent random variables having a common distribution function that is specified up to an unknown parameter θ. let t=t (x) be a function of the data x= (x1,…,xn). if the conditional distribution of x1,…,xn given t (x) does not depend on θ then t (x) is said to be a sufficient statistic for θ. let xi s be poisson random variables. Exercise 4.15: let x1,…,xn be independent random variables such that pr (xi=1−pi)=pi and pr (xi=−pi)=1−pi. let x=∑i=1nxi. prove pr (∣x∣≥a)≤2e−2a2 n hint: you may need to assume the inequality piei.11−pi) (1−pi)e−λpi≤eλ2 8. this inequality is difficult to prove directly. your solution’s ready to go!. Let x1 x 1, x2 x 2, , xn x n be i.i.d. random variables with expected value exi = μ <∞ e x i = μ <∞ and variance 0

Solved Problem 4 Let X1 X2 Xn Be N Independent Random Chegg Write down the likelihood as a function of the observed data x1, . . . , xn, and the unknown parameter p. compute the mle of p. in order to do this you need to find a zero of the derivative of the likelihood, and also check that the second derivative of the likelihood at the point is negative. compute the method of moments estimator for p. Let x1,…,xn be independent random variables having a common distribution function that is specified up to an unknown parameter θ. let t=t (x) be a function of the data x= (x1,…,xn). if the conditional distribution of x1,…,xn given t (x) does not depend on θ then t (x) is said to be a sufficient statistic for θ. let xi s be poisson random variables. Exercise 4.15: let x1,…,xn be independent random variables such that pr (xi=1−pi)=pi and pr (xi=−pi)=1−pi. let x=∑i=1nxi. prove pr (∣x∣≥a)≤2e−2a2 n hint: you may need to assume the inequality piei.11−pi) (1−pi)e−λpi≤eλ2 8. this inequality is difficult to prove directly. your solution’s ready to go!. Let x1 x 1, x2 x 2, , xn x n be i.i.d. random variables with expected value exi = μ <∞ e x i = μ <∞ and variance 0

Comments are closed.