Runtimeerror Stack Expects Each Tensor To Be Equal Size In Pytorch How To Resolve It

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 3 This should be the way to solving this, but as a temporary remedy in case anything is urgent or a quick test is nice, simply change batch size to 1 to prevent torch from trying to stack things with different shapes up. If size is an int, smaller edge of the image will be matched to this number. i.e, if height > width, then image will be rescaled to (size * height width, size).

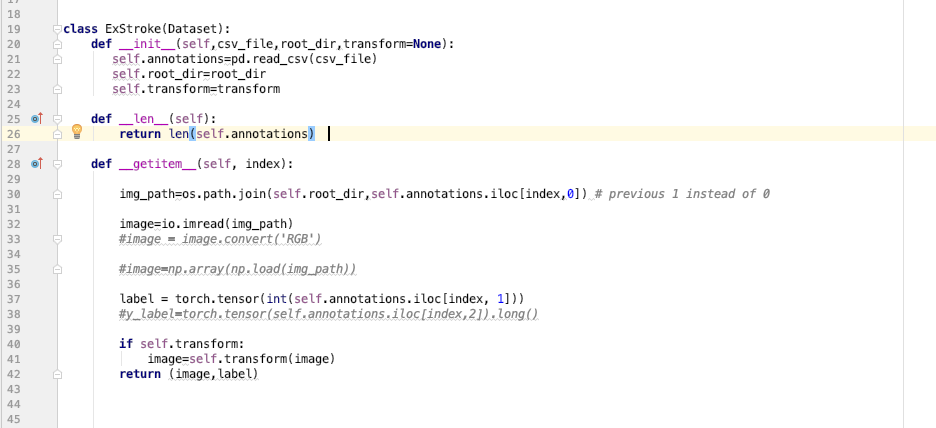

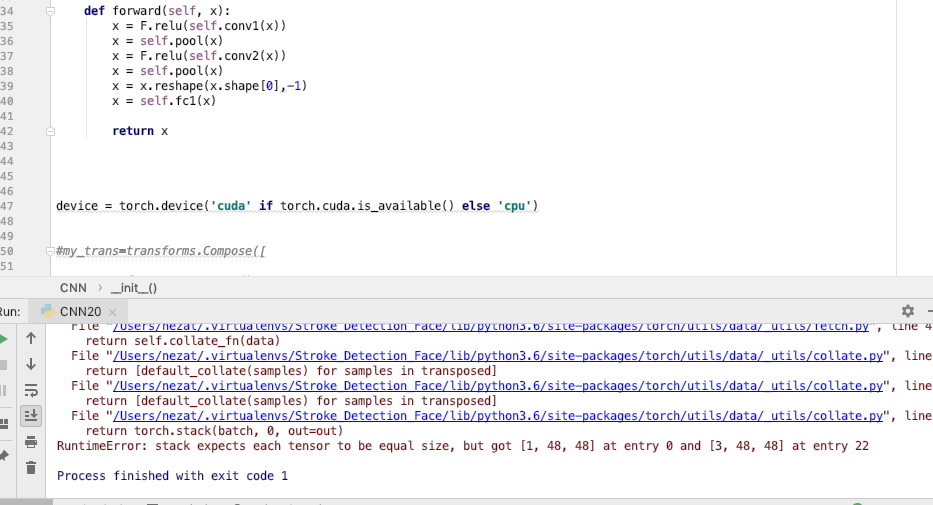

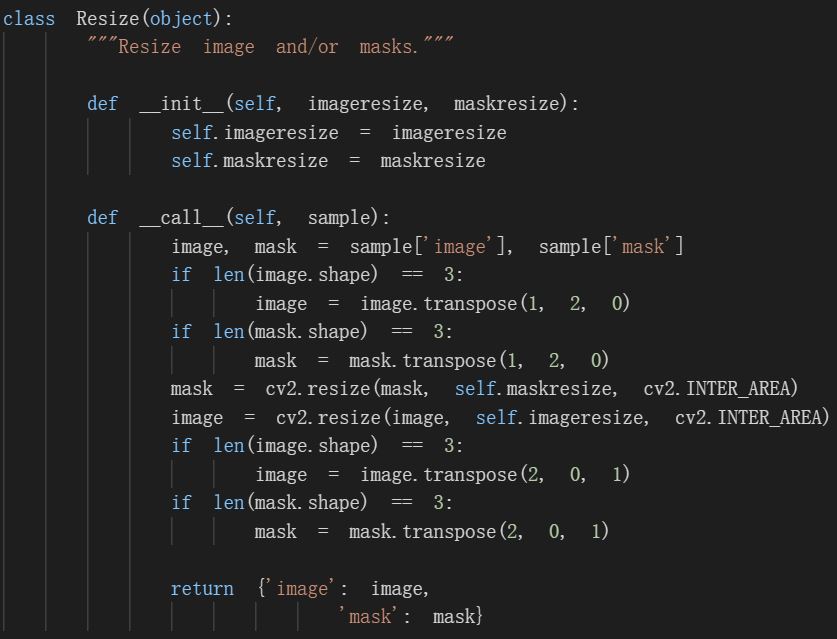

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 3 这里我取的 patch size =128,对于3通道图片本应该得到tensor的尺寸是 [3,128,128],但是后面莫名出现了尺寸为 [3,106,128] 的因此报错。 一般会有两种原因,要么是图片出了问题,要么是自己处理的方式出了问题,下面都默认问题出现在自己的代码中进行讨论。. Learn how to fix the tensor size error in your pytorch convolutional neural network due to inconsistent image dimensions in your dataset. more. Runtimeerror: caught runtimeerror in dataloader worker process 0. the above exception was the direct cause of the following exception: collate error on the key ‘fixed image’ of dictionary data. When pytorch creates a batch, it stacks batch size images from your training set into one tensor. pytorch has no way to tell how to stack images with different sizes. one workaround would be to set batch size to 1 (in your loader) and stack the images yourself in the getitem method.

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 12 Runtimeerror: caught runtimeerror in dataloader worker process 0. the above exception was the direct cause of the following exception: collate error on the key ‘fixed image’ of dictionary data. When pytorch creates a batch, it stacks batch size images from your training set into one tensor. pytorch has no way to tell how to stack images with different sizes. one workaround would be to set batch size to 1 (in your loader) and stack the images yourself in the getitem method. How to solve this error? can someone specifically give me a good example of why this error would happen and how to fix it? here is the error: runtimeerror: stack expects each tensor to be equal size,. Discover effective solutions to fixing the "runtimeerror: stack expects each tensor to be equal size" error in pytorch. understand common causes and how to avoid this issue when. By default, dataloader tries to stack the tensors to form a batch (calls torch.stack on the current batch), but it fails if the tensors are not of equal size. with the collate fn it is possible to override this behavior and define your own “stacking procedure”. Opacus assumes the first dimension is the batch dimension, but you seem to be playing with it by running 'permute' in your transformer. this is the code i used to debug the issue: it will show you which layers thought that the batch size was 1022 and which thought the batch size was 32.

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 3 How to solve this error? can someone specifically give me a good example of why this error would happen and how to fix it? here is the error: runtimeerror: stack expects each tensor to be equal size,. Discover effective solutions to fixing the "runtimeerror: stack expects each tensor to be equal size" error in pytorch. understand common causes and how to avoid this issue when. By default, dataloader tries to stack the tensors to form a batch (calls torch.stack on the current batch), but it fails if the tensors are not of equal size. with the collate fn it is possible to override this behavior and define your own “stacking procedure”. Opacus assumes the first dimension is the batch dimension, but you seem to be playing with it by running 'permute' in your transformer. this is the code i used to debug the issue: it will show you which layers thought that the batch size was 1022 and which thought the batch size was 32.

Comments are closed.