Running Jobs Using Data Interfaces

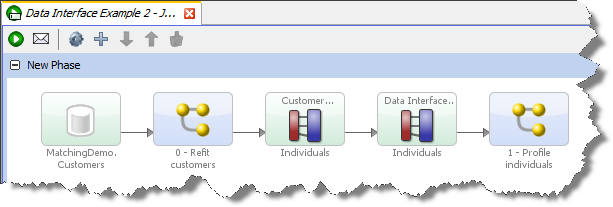

Working With Interfaces Pdf Class Computer Programming In this example job, a process is used that both reads from and writes to data interfaces. the user selects mappings to allow the process to run in real time, but also to log its real time responses to staged data. Learn about running jobs on nrel's high performance computing (hpc) systems. to allow multiple users to share the system, the hpc system uses the slurm workload manager job scheduler and resource manager. slurm has commands for job submission, job monitoring, and job control (hold, delete, and resource request modification).

Running Jobs Using Data Interfaces A data interface in edq is a template of a set of attributes representing a given entity, used to create processes that read from, or write to, interfaces rather than directly from or to sources or targets of data. Learn how to process or transform data by running a databricks job in azure data factory pipelines. Click the jobs drop down menu next to the job search field to filter by criteria such as jobs with active runs, active runs, jobs with finished runs, and finished runs. Learn the advantages of using data interfaces and how to use them. data interfaces feature mappings that allow you to easily reconfigure processes or jobs to read from different staged data (including snapshots), reference data or realtime streams (for example web services).

Running Jobs Using Data Interfaces Click the jobs drop down menu next to the job search field to filter by criteria such as jobs with active runs, active runs, jobs with finished runs, and finished runs. Learn the advantages of using data interfaces and how to use them. data interfaces feature mappings that allow you to easily reconfigure processes or jobs to read from different staged data (including snapshots), reference data or realtime streams (for example web services). The jobs api allows you to create, edit, and delete jobs. you can use a databricks job to run a data processing or data analysis task in a databricks cluster with scalable resources. your job can consist of a single task or can be a large, multi task workflow with complex dependencies. The infosphere® datastage® development kit and other job control interfaces are tools that you can use to run jobs directly on the engine. these tools provide flexibility for running jobs in multiple scenarios without the need to use the director client. It is possible to link two or more processes that contain data interfaces, provided one is configured as a reader and the other as a writer. We have an open source python library that focuses on creating front end web interfaces using only python. we also have some tools for data orchestration to save and compare data pipeline results easily.

Running Jobs Using Data Interfaces The jobs api allows you to create, edit, and delete jobs. you can use a databricks job to run a data processing or data analysis task in a databricks cluster with scalable resources. your job can consist of a single task or can be a large, multi task workflow with complex dependencies. The infosphere® datastage® development kit and other job control interfaces are tools that you can use to run jobs directly on the engine. these tools provide flexibility for running jobs in multiple scenarios without the need to use the director client. It is possible to link two or more processes that contain data interfaces, provided one is configured as a reader and the other as a writer. We have an open source python library that focuses on creating front end web interfaces using only python. we also have some tools for data orchestration to save and compare data pipeline results easily.

Comments are closed.