Robots Txt File Editing To Block Dynamic Url S Blog

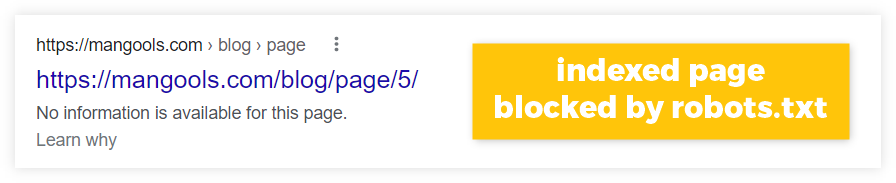

Robots Txt File Editing To Block Dynamic Url S Blog Also, robots.txt doesn’t necessarily remove urls from search engine results. it only forbids that search engines crawl the content of the blocked urls (=pages). if you want to remove your urls from search engine indexes, that’s another question (probably for webmasters). A robots.txt file lives at the root of your site. learn how to remove & edit robots.txt file from your website, see examples, and explore robots.txt rules.

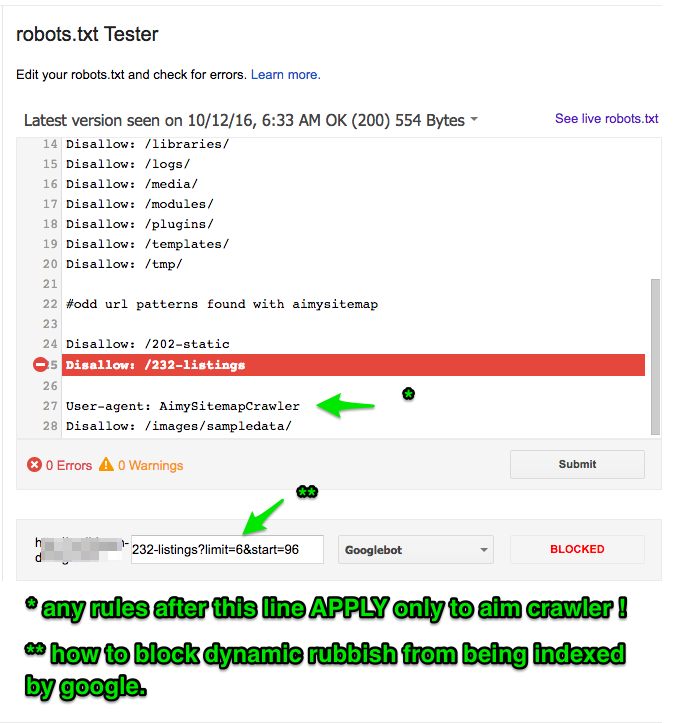

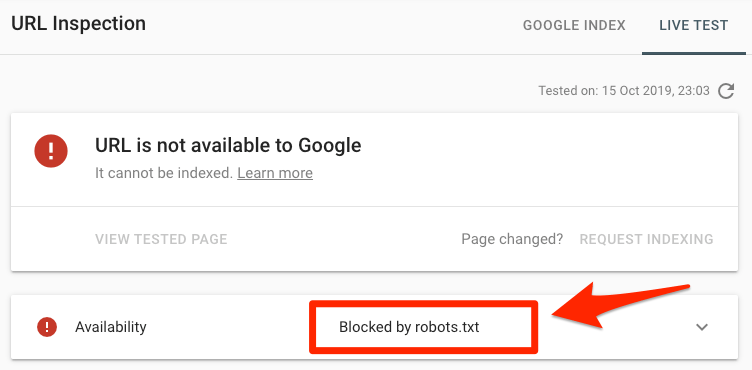

Robots Txt And Seo Everything You Need To Know Robots.txt is key in preventing search engine robots from crawling restricted areas of your site. learn how to block urls with robots.txt. I've seen basic articles online about allowing disallowing certain subdirectories in robots.txt, but i have a more detailed need than that. google search console keeps trying to discover crawl certain urls on my site that it shouldn't be doing. Learn how to safely configure your robots.txt file to block unwanted bots without accidentally deindexing your entire site. explore real world examples and wildcard patterns to target crawl restrictions efficiently and avoid common mistakes. The “disallow” directive in the robots.txt file is used to block specific web crawlers from accessing designated pages or sections of a website. optimizing robots.txt with the “disallow” directive can also help reduce the load on a website’s server.

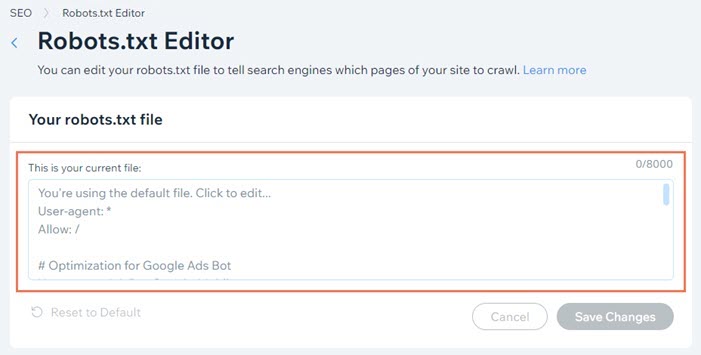

Editing Your Site S Robots Txt File Help Center Wix Learn how to safely configure your robots.txt file to block unwanted bots without accidentally deindexing your entire site. explore real world examples and wildcard patterns to target crawl restrictions efficiently and avoid common mistakes. The “disallow” directive in the robots.txt file is used to block specific web crawlers from accessing designated pages or sections of a website. optimizing robots.txt with the “disallow” directive can also help reduce the load on a website’s server. Would preventing the spiders from indexing any urls with a "?" or "&" (which would only be dynamic urls variations) cause any problems? or is this just not an ideal best practice? thanks!. This guide is designed for beginners who want to learn how to block urls using a robots.txt file without causing seo issues. we’ll cover everything from what a robots.txt file is, how it works, and step by step instructions to set it up correctly. To block a certain type of urls on robots.txt or .htaccess, you can use directives to restrict access to specific urls or directories. in robots.txt, you can use the "disallow" directive followed by the url or directory you want to block from being crawled by search engine bots. Creating a robots.txt file is possible with text editors that support utf 8 encoding, or through built in tools in popular cms platforms like wordpress, magento, and shopify. check your robots.txt file with tools like google search console or seo platforms like se ranking.

What Is Robots Txt What Can You Do With It Mangools Would preventing the spiders from indexing any urls with a "?" or "&" (which would only be dynamic urls variations) cause any problems? or is this just not an ideal best practice? thanks!. This guide is designed for beginners who want to learn how to block urls using a robots.txt file without causing seo issues. we’ll cover everything from what a robots.txt file is, how it works, and step by step instructions to set it up correctly. To block a certain type of urls on robots.txt or .htaccess, you can use directives to restrict access to specific urls or directories. in robots.txt, you can use the "disallow" directive followed by the url or directory you want to block from being crawled by search engine bots. Creating a robots.txt file is possible with text editors that support utf 8 encoding, or through built in tools in popular cms platforms like wordpress, magento, and shopify. check your robots.txt file with tools like google search console or seo platforms like se ranking.

Comments are closed.