Resolving The Runtimeerror Stack Expects Each Tensor To Be Equal Size In Pytorch Cnn Models

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 3 I'm trying resnet implementation from scratch. this error occurred during training after setting the dataset in dataloader: runtimeerror: stack expects each tensor to be equal size, but got [3, 224. Learn how to fix the tensor size error in your pytorch convolutional neural network due to inconsistent image dimensions in your dataset. this video is bas.

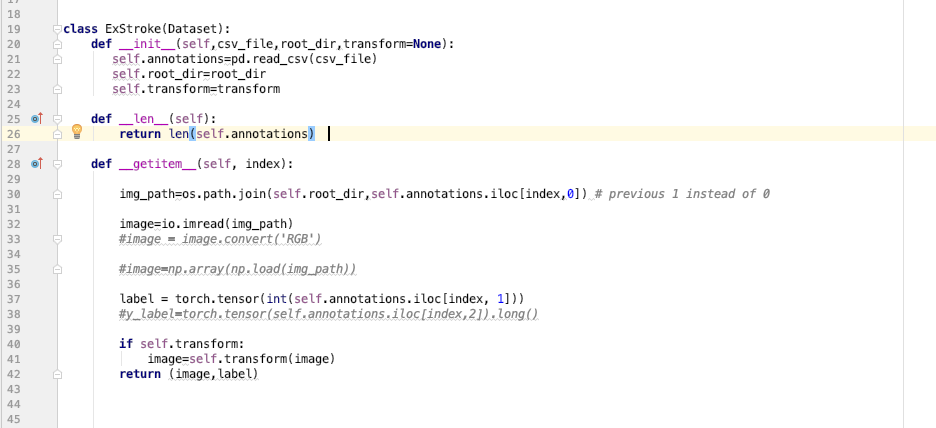

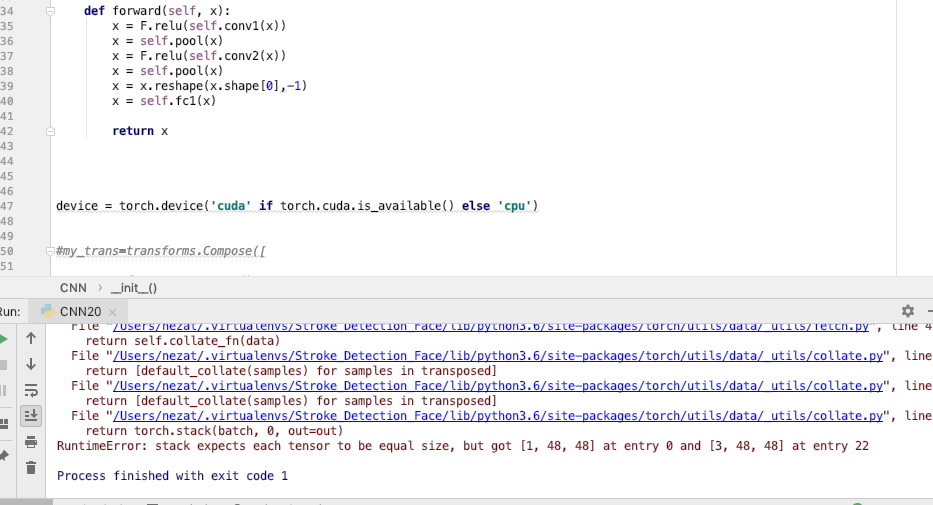

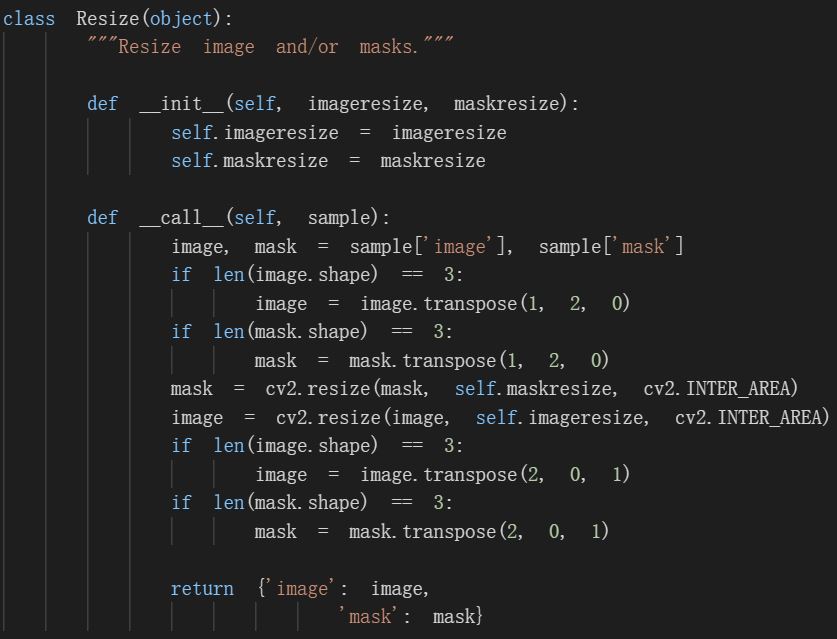

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 3 Runtimeerror: stack expects each tensor to be equal size, but got [7, 768] at entry 0 and [8, 768] at entry 1 because torch.stack takes tensors of the same shape and stacks them into a larger tensor. 这里我取的 patch size =128,对于3通道图片本应该得到tensor的尺寸是 [3,128,128],但是后面莫名出现了尺寸为 [3,106,128] 的因此报错。 一般会有两种原因,要么是图片出了问题,要么是自己处理的方式出了问题,下面都默认问题出现在自己的代码中进行讨论。 网上能找到的方法大多让你去调小 batch size,最极端的大可以直接改成 batch size=1 那就不会出现tensor拼接的问题了,这个属于纯粹的自我欺骗行为,不建议任何人效仿。 经过长时间的排查,我定位到出问题的图片读入时的尺寸为 (500,370,3),crop之后得到的tensor尺寸为 [3,106,128],于是开始找crop这里的问题。 部分修改前的代码如下:. You could resize or pad the samples to create tensors in the same shape. device = torch.device (“mps”) labels = { num segments = 100. mfcc train = for ii in range (443): mfcc train = torch.from numpy (np.array (mfcc train)) mfcc test = for ii in range (290): mfcc test = torch.from numpy (np.array (mfcc test)) for ii in range (443):. Discover effective solutions to fixing the "runtimeerror: stack expects each tensor to be equal size" error in pytorch. understand common causes and how to avoid this issue.

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 12 You could resize or pad the samples to create tensors in the same shape. device = torch.device (“mps”) labels = { num segments = 100. mfcc train = for ii in range (443): mfcc train = torch.from numpy (np.array (mfcc train)) mfcc test = for ii in range (290): mfcc test = torch.from numpy (np.array (mfcc test)) for ii in range (443):. Discover effective solutions to fixing the "runtimeerror: stack expects each tensor to be equal size" error in pytorch. understand common causes and how to avoid this issue. If size is a sequence like (h, w), output size will be matched to this. if size is an int, smaller edge of the image will be matched to this number. i.e, if height > width, then image will be rescaled to (size * height width, size). I think the tokenized texts are not of the same length as indicated by this warning message. if you adjust the input length to be the same at each batch, i think the error will go away. 実現したいこと python3とpytorchを用いてcnnによるyesかnoの画像分類を行いたいです。 発生している問題・エラーメッセージ 画像データのサイズをすべて合わせているにもかかわらず、 以下のエラーが発生しています。. Return torch.stack(batch, 0, out=out) runtimeerror: stack expects each tensor to be equal size, but got [157] at entry 0 and [154] at entry 1 i found in some github post that this error can be because of batch size, so i changed the batch size to 8 and then the error is as follows: batch size = 8.

Runtimeerror Stack Expects Each Tensor To Be Equal Size But Got 3 If size is a sequence like (h, w), output size will be matched to this. if size is an int, smaller edge of the image will be matched to this number. i.e, if height > width, then image will be rescaled to (size * height width, size). I think the tokenized texts are not of the same length as indicated by this warning message. if you adjust the input length to be the same at each batch, i think the error will go away. 実現したいこと python3とpytorchを用いてcnnによるyesかnoの画像分類を行いたいです。 発生している問題・エラーメッセージ 画像データのサイズをすべて合わせているにもかかわらず、 以下のエラーが発生しています。. Return torch.stack(batch, 0, out=out) runtimeerror: stack expects each tensor to be equal size, but got [157] at entry 0 and [154] at entry 1 i found in some github post that this error can be because of batch size, so i changed the batch size to 8 and then the error is as follows: batch size = 8.

Comments are closed.