Python Pyspark Tutorial Part 2 How To Create Pyspark Dataframe From Dictionaries List Of Tuples

Pyspark Create Dataframe From List Working Examples In this article, we are going to discuss the creation of a pyspark dataframe from a list of tuples. to do this, we will use the createdataframe () method from pyspark. this method creates a dataframe from rdd, list or pandas dataframe. here data will be the list of tuples and columns will be a list of column names. syntax:. In pyspark, we often need to create a dataframe from a list, in this article, i will explain creating dataframe and rdd from list using pyspark examples. a list is a data structure in python that holds a collection tuple of items. list items are enclosed in square brackets, like [data1, data2, data3].

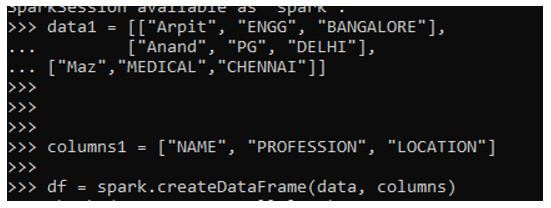

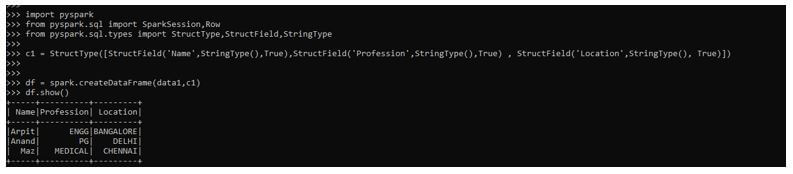

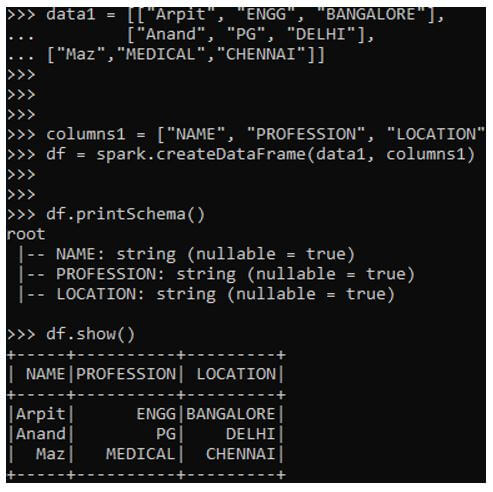

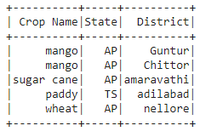

Pyspark Create Dataframe From List Working Examples You can use the following methods to create a dataframe from a list in pyspark: method 1: create dataframe from list. #define list of data. data = [10, 15, 22, 27, 28, 40] #create dataframe with one column. df = spark.createdataframe(data, integertype()) method 2: create dataframe from list of lists. data = [['a', 'east', 11], . ['a', 'east', 8], . In this video we will show we can create spark dataframe using basic structure like spark list of tuples and pandas dataframepyspark is the python api for ap. I am trying to manually create a pyspark dataframe given certain data: structfield("time epocs", decimaltype(), true), structfield("lat", decimaltype(), true), structfield("long", decimaltype(), true), this gives an error when i try to display the dataframe, so i am not sure how to do this. A pyspark dataframe can be created via pyspark.sql.sparksession.createdataframe typically by passing a list of lists, tuples, dictionaries and pyspark.sql.row s, a pandas dataframe and an rdd consisting of such a list. pyspark.sql.sparksession.createdataframe takes the schema argument to specify the schema of the dataframe.

Pyspark Create Dataframe From List Working Examples I am trying to manually create a pyspark dataframe given certain data: structfield("time epocs", decimaltype(), true), structfield("lat", decimaltype(), true), structfield("long", decimaltype(), true), this gives an error when i try to display the dataframe, so i am not sure how to do this. A pyspark dataframe can be created via pyspark.sql.sparksession.createdataframe typically by passing a list of lists, tuples, dictionaries and pyspark.sql.row s, a pandas dataframe and an rdd consisting of such a list. pyspark.sql.sparksession.createdataframe takes the schema argument to specify the schema of the dataframe. One approach to create a pyspark dataframe from multiple lists is to first convert the lists to a numpy array and then create a pyspark dataframe from the numpy array using the createdataframe () function. this approach requires the pyspark.sql.types module to specify the schema of the dataframe. consider the code shown below. In this article, we are going to discuss how to create a pyspark dataframe from a list. to do this first create a list of data and a list of column names. then pass this zipped data to spark.createdataframe () method. this method is used to create dataframe. There are several ways to create a dataframe in pyspark: 1) from a list or a dictionary (i.e., from a collection). 2) from an external data source (e.g., csv, json, parquet, etc.). 3) from an rdd (resilient distributed dataset). 1. I will show you how to create pyspark dataframe from python objects directly, using sparksession createdataframe method in a variety of situations.

Pyspark Create Dataframe From List Working Examples One approach to create a pyspark dataframe from multiple lists is to first convert the lists to a numpy array and then create a pyspark dataframe from the numpy array using the createdataframe () function. this approach requires the pyspark.sql.types module to specify the schema of the dataframe. consider the code shown below. In this article, we are going to discuss how to create a pyspark dataframe from a list. to do this first create a list of data and a list of column names. then pass this zipped data to spark.createdataframe () method. this method is used to create dataframe. There are several ways to create a dataframe in pyspark: 1) from a list or a dictionary (i.e., from a collection). 2) from an external data source (e.g., csv, json, parquet, etc.). 3) from an rdd (resilient distributed dataset). 1. I will show you how to create pyspark dataframe from python objects directly, using sparksession createdataframe method in a variety of situations.

Create Pyspark Dataframe From List Of Tuples Geeksforgeeks There are several ways to create a dataframe in pyspark: 1) from a list or a dictionary (i.e., from a collection). 2) from an external data source (e.g., csv, json, parquet, etc.). 3) from an rdd (resilient distributed dataset). 1. I will show you how to create pyspark dataframe from python objects directly, using sparksession createdataframe method in a variety of situations.

Pyspark Create Dataframe From List Geeksforgeeks

Comments are closed.