Python Pandas Tutorial 15 Handle Large Datasets In Pandas Memory Optimization Tips For Pandas

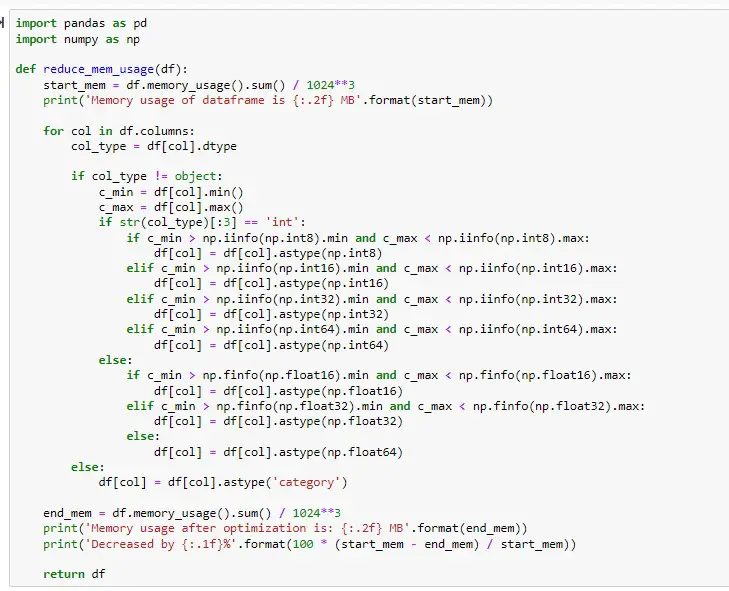

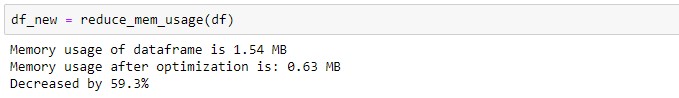

How To Use Pandas With Large Datasets Optimization Often datasets that you load in pandas are very big and you may run out of memory. in this video we will cover some memory optimization tips in pandas .more. How to handle large datasets in python? use efficient datatypes: utilize more memory efficient data types (e.g., int32 instead of int64, float32 instead of float64) to reduce memory usage. load less data: use the use cols parameter in pd.read csv() to load only the necessary columns, reducing memory consumption.

How To Handle Large Datasets In Python With Pandas Python Simplified Here is a simplified version of my code: import pandas as pd # load the dataset df = pd.read csv('large dataset.csv') # example operation: filtering and aggregating result = df[df['column name'] > threshold value].groupby('another column').mean(). How to handle large datasets in python? reduce memory consumption by loading only the necessary columns using the `usecols` parameter in `pd.read csv ()`. this technique only requires. Conquer large datasets with pandas in python! this tutorial unveils strategies for efficient csv handling, optimizing memory usage. whether you're a novice or an experienced data wrangler, learn step by step techniques to streamline your workflow and enhance data processing speed. Let's look at some methods for using pandas to manage bigger datasets in python. you can use python to process millions of records using these methods. there are various methods for handling large data, such as sampling, chunking, and optimization data types. let's discuss this on by one.

How To Handle Large Datasets In Python With Pandas Python Simplified Conquer large datasets with pandas in python! this tutorial unveils strategies for efficient csv handling, optimizing memory usage. whether you're a novice or an experienced data wrangler, learn step by step techniques to streamline your workflow and enhance data processing speed. Let's look at some methods for using pandas to manage bigger datasets in python. you can use python to process millions of records using these methods. there are various methods for handling large data, such as sampling, chunking, and optimization data types. let's discuss this on by one. In this tutorial, we will walk you through ways to enhance your experience with large datasets in pandas. first, try loading the dataset with a memory optimization parameter. also, try changing the data type, especially to a memory friendly type, and drop any unnecessary columns. Since you are reading this article, i assume you probably want to use pandas for a rich set of features even though the dataset is large. but, is it possible to use pandas on larger than memory datasets? the answer is yes. you can handle large datasets in python using pandas with some techniques. but, up to a certain extent. This tutorial focuses on the techniques and strategies to optimize the use of pandas for handling large datasets. by mastering these techniques, you’ll be able to process data faster, reduce memory usage, and write more efficient code. Whether you’re working with gigabytes or terabytes of data, processing them efficiently is key to maintaining performance and minimizing memory usage. in this post, we’ll explore advanced techniques and best practices in pandas that can help you handle large datasets without running into memory bottlenecks or performance degradation.

How To Handle Large Datasets In Python With Pandas Python Simplified In this tutorial, we will walk you through ways to enhance your experience with large datasets in pandas. first, try loading the dataset with a memory optimization parameter. also, try changing the data type, especially to a memory friendly type, and drop any unnecessary columns. Since you are reading this article, i assume you probably want to use pandas for a rich set of features even though the dataset is large. but, is it possible to use pandas on larger than memory datasets? the answer is yes. you can handle large datasets in python using pandas with some techniques. but, up to a certain extent. This tutorial focuses on the techniques and strategies to optimize the use of pandas for handling large datasets. by mastering these techniques, you’ll be able to process data faster, reduce memory usage, and write more efficient code. Whether you’re working with gigabytes or terabytes of data, processing them efficiently is key to maintaining performance and minimizing memory usage. in this post, we’ll explore advanced techniques and best practices in pandas that can help you handle large datasets without running into memory bottlenecks or performance degradation.

Larget Datasets With Pandas Pandas Video Tutorial Linkedin Learning This tutorial focuses on the techniques and strategies to optimize the use of pandas for handling large datasets. by mastering these techniques, you’ll be able to process data faster, reduce memory usage, and write more efficient code. Whether you’re working with gigabytes or terabytes of data, processing them efficiently is key to maintaining performance and minimizing memory usage. in this post, we’ll explore advanced techniques and best practices in pandas that can help you handle large datasets without running into memory bottlenecks or performance degradation.

Comments are closed.