Pyspark Read Json File Into Dataframe Reading Writing Reading Learn

Python Read Json File Spark By Examples In this article, i will explain how to utilize pyspark to efficiently read json files into dataframes, how to handle null values, how to handle specific date formats, and finally, how to write dataframe to a json file. How can i read the following json structure to spark dataframe using pyspark? my json structure {"results": [ {"a":1,"b":2,"c":"name"}, {"a":2,"b":5,"c":"foo"}]} i have tried with : df = spark.read.

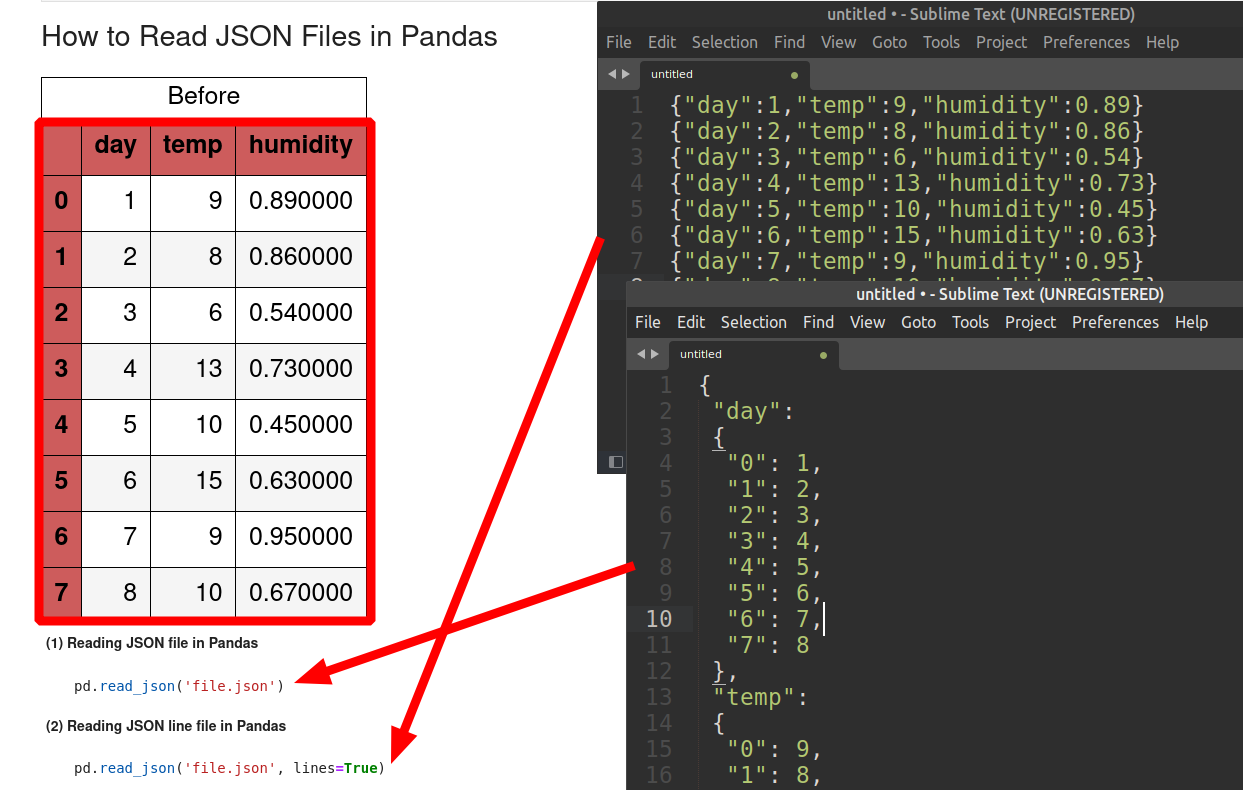

How To Read Json Files In Pandas Reading json files in pyspark means using the spark.read.json () method to load javascript object notation (json) data into a dataframe, converting this versatile text format into a structured, queryable entity within spark’s distributed environment. In apache spark, reading a json file into a dataframe can be achieved using either spark.read.json("path") or spark.read.format("json").load("path"). both methods are essentially equivalent in functionality but offer different syntactic styles. This section covers how to read and write data in various formats using pyspark. you’ll learn how to load data from common file types (e.g., csv, json, parquet, orc) and store data efficiently. Pyspark provides a dataframe api for reading and writing json files. you can use the read method of the sparksession object to read a json file into a dataframe, and the.

Pandas Read Json Reading Json Files Into Dataframes Datagy This section covers how to read and write data in various formats using pyspark. you’ll learn how to load data from common file types (e.g., csv, json, parquet, orc) and store data efficiently. Pyspark provides a dataframe api for reading and writing json files. you can use the read method of the sparksession object to read a json file into a dataframe, and the. In this article, we are going to convert json string to dataframe in pyspark. method 1: using read json () we can read json files using pandas.read json. this method is basically used to read json files through pandas. syntax: pandas.read json ("file name.json") here we are going to use this json file for demonstration: code:. In this comprehensive 3000 word guide, i‘ll walk you through the ins and outs of reading json into pyspark dataframes using a variety of techniques. i‘ll provide code snippets you can apply right away, along with explanations of how each approach works under the hood in spark on linux systems. When a json object is read. spark parses the object and automatically infers schema. you can see this using df.printschema () sample input: code: output. suppose instead of directly giving you year, month and date columns, the columns are wrapped inside a date object. let's set schema for this json. sample input: output:. With pyspark, you can read json files using the read.json () method. here’s a simple example: this will create a dataframe from the json file and display its contents. to write the contents of a dataframe back to a json file, you can use the write.json () method:.

Python Reading Json File Into Spark Dataframe Stack Overflow In this article, we are going to convert json string to dataframe in pyspark. method 1: using read json () we can read json files using pandas.read json. this method is basically used to read json files through pandas. syntax: pandas.read json ("file name.json") here we are going to use this json file for demonstration: code:. In this comprehensive 3000 word guide, i‘ll walk you through the ins and outs of reading json into pyspark dataframes using a variety of techniques. i‘ll provide code snippets you can apply right away, along with explanations of how each approach works under the hood in spark on linux systems. When a json object is read. spark parses the object and automatically infers schema. you can see this using df.printschema () sample input: code: output. suppose instead of directly giving you year, month and date columns, the columns are wrapped inside a date object. let's set schema for this json. sample input: output:. With pyspark, you can read json files using the read.json () method. here’s a simple example: this will create a dataframe from the json file and display its contents. to write the contents of a dataframe back to a json file, you can use the write.json () method:.

Comments are closed.