Pyspark Python Add Column With File Name Without For Each Loop

Pyspark Python Add Column With File Name Without For Each Loop I need to add the name of each file in the filepath as a data column to denote what file the row came from. using a for each loop to process each file individually takes too much time for the number of files i am reading and the other data manipulations required as part of this dataframe. Loading data in spark and adding the filename as a column to a dataframe is a common scenario. this can be done using pyspark (python) by leveraging the dataframe api and rdd transformations. below, i’ll provide a detailed explanation along with an example to illustrate this process using pyspark.

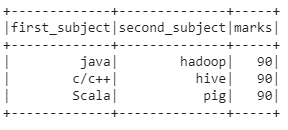

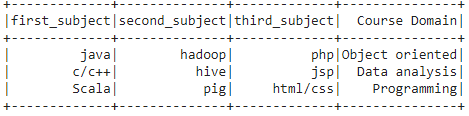

Pyspark Python Add Column With File Name Without For Each Loop In this approach to add a new column with constant values, the user needs to call the lit () function parameter of the withcolumn () function and pass the required parameters into these functions. here, the lit () is available in pyspark.sql. functions module. syntax: where, example:. In this pyspark article, i will explain different ways to add a new column to dataframe using withcolumn(), select(), sql(), few ways include adding a constant column with a default value, derive based out of another column, add a column with null none value, adding multiple columns e.t.c. We can use withcolumn function to add extra columns to dataframe. in this case using withcolumn function we can add file and folder details to dataframe. kindly check below sample code. you can consider checking below video to understand about withcolumn function. withcolumn () in pyspark. hope this helps. please let me know if any further queries. Each file contains the following columns: first name, last name, age, sex, and location. add a new column state to each dataframe with values derived from the filenames (karnataka and.

How To Add New Column To Pyspark Dataframe In Python 5 Examples We can use withcolumn function to add extra columns to dataframe. in this case using withcolumn function we can add file and folder details to dataframe. kindly check below sample code. you can consider checking below video to understand about withcolumn function. withcolumn () in pyspark. hope this helps. please let me know if any further queries. Each file contains the following columns: first name, last name, age, sex, and location. add a new column state to each dataframe with values derived from the filenames (karnataka and. Add column using withcolumn: withcolumn () function can be used on a dataframe to either add a new column or replace an existing column that has same name. withcolumn () function can cause performance issues and even "stackoverflowexception" if it is called multiple times using loop to add multiple columns. In this article, we are going to learn how to add a column from a list of values using a udf using pyspark in python. a data frame that is similar to a relational table in spark sql, and can be created using various functions in sparksession is known as a pyspark data frame. Using withcolumn method: you can use the withcolumn method to add a new column based on an existing column or a computed value. df= spark.read.format("csv").load("file: path to file.csv"). Now, let’s explore 10 different ways to add a new column to this dataframe. you can define a udf to perform operations on a column and add the result as a new column. from pyspark.sql.types import stringtype. def age category(age): if age < 25: return "young" else: return "adult" age udf = udf(age category, stringtype()).

How To Add New Column To Pyspark Dataframe In Python 5 Examples Add column using withcolumn: withcolumn () function can be used on a dataframe to either add a new column or replace an existing column that has same name. withcolumn () function can cause performance issues and even "stackoverflowexception" if it is called multiple times using loop to add multiple columns. In this article, we are going to learn how to add a column from a list of values using a udf using pyspark in python. a data frame that is similar to a relational table in spark sql, and can be created using various functions in sparksession is known as a pyspark data frame. Using withcolumn method: you can use the withcolumn method to add a new column based on an existing column or a computed value. df= spark.read.format("csv").load("file: path to file.csv"). Now, let’s explore 10 different ways to add a new column to this dataframe. you can define a udf to perform operations on a column and add the result as a new column. from pyspark.sql.types import stringtype. def age category(age): if age < 25: return "young" else: return "adult" age udf = udf(age category, stringtype()).

Comments are closed.