Pyspark Learn How To Create Pyspark Dataframe

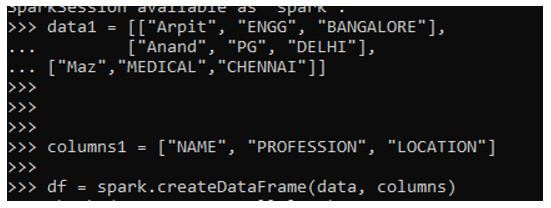

How To Create A Spark Dataframe 5 Methods With Examples In this article, you will learn to create dataframe by some of these methods with pyspark examples. in order to create a dataframe from a list we need the data hence, first, let’s create the data and the columns that are needed. 1. create dataframe from rdd. Pyspark helps in processing large datasets using its dataframe structure. in this article, we will see different methods to create a pyspark dataframe. it starts with initialization of sparksession which serves as the entry point for all pyspark applications which is shown below: lets see an example of creating dataframe from a list of rows.

Pyspark Create Dataframe From List Working Examples A pyspark dataframe can be created via pyspark.sql.sparksession.createdataframe typically by passing a list of lists, tuples, dictionaries and pyspark.sql.row s, a pandas dataframe and an rdd consisting of such a list. pyspark.sql.sparksession.createdataframe takes the schema argument to specify the schema of the dataframe. I am trying to manually create a pyspark dataframe given certain data: structfield("time epocs", decimaltype(), true), structfield("lat", decimaltype(), true), structfield("long", decimaltype(), true), this gives an error when i try to display the dataframe, so i am not sure how to do this. Pyspark allows users to handle large datasets efficiently through distributed computing. whether you’re new to spark or looking to enhance your skills, let us delve into understanding how to create dataframes and manipulate data effectively, unlocking the power of big data analytics with pyspark. Learn how to create a pyspark dataframe with this comprehensive guide. get step by step instructions and examples to simplify your data processing tasks.

Pyspark Create Dataframe From List Working Examples Pyspark allows users to handle large datasets efficiently through distributed computing. whether you’re new to spark or looking to enhance your skills, let us delve into understanding how to create dataframes and manipulate data effectively, unlocking the power of big data analytics with pyspark. Learn how to create a pyspark dataframe with this comprehensive guide. get step by step instructions and examples to simplify your data processing tasks. How to create pyspark dataframe easy and simple way in this pyspark tutorial, you’ll learn the fundamentals of spark, how to create distributed data processing pipelines, and leverage its versatile libraries to transform and analyze large datasets efficiently with examples. Dataframes can be created using various methods in pyspark: you can create a dataframe from an existing rdd using a case class and the todf method: you can create dataframes from structured data files such as csv, json, and parquet using the read method: learn about dataframes in apache pyspark. This guide jumps right into the syntax and practical steps for creating a pyspark dataframe from a csv file, packed with examples showing how to handle different scenarios, from simple to complex. we’ll tackle common errors to keep your pipelines rock solid. let’s load that data like a pro!. To generate a dataframe — a distributed collection of data arranged into named columns — pyspark offers multiple methods. the following are some typical pyspark methods for creating a dataframe:.

Comments are closed.