Pyspark Create Dataframe With Examples Spark Qas

Spark Create Dataframe With Examples Spark By Examples 105 pyspark.sql.functions.when takes a boolean column as its condition. when using pyspark, it's often useful to think "column expression" when you read "column". logical operations on pyspark columns use the bitwise operators: & for and | for or ~ for not when combining these with comparison operators such as <, parenthesis are often needed. When in pyspark multiple conditions can be built using & (for and) and | (for or). note:in pyspark t is important to enclose every expressions within parenthesis () that combine to form the condition.

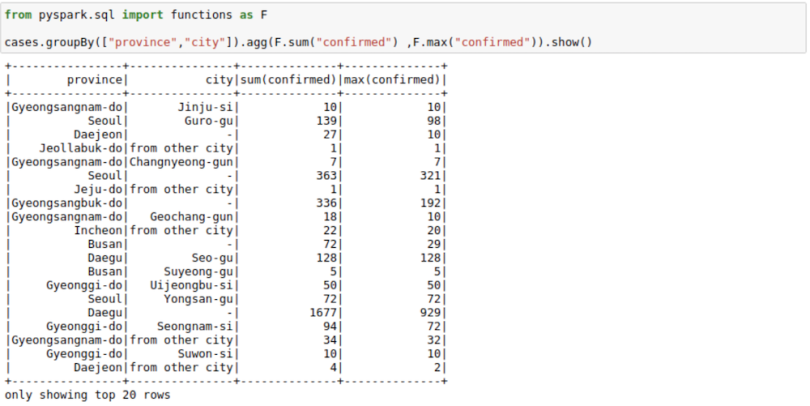

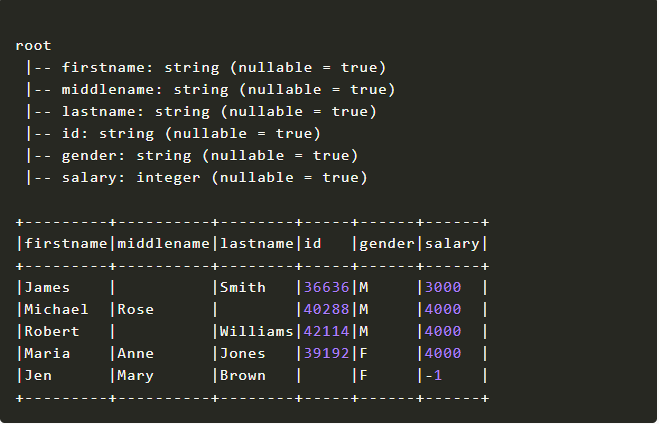

Pyspark Tutorial For Beginners With Examples Spark By 57 Off In spark version 1.2.0 one could use subtract with 2 schemrdds to end up with only the different content from the first one val onlynewdata = todayschemardd.subtract(yesterdayschemardd) onlynewdata. 4 on pyspark, you can also use this bool(df.head(1)) to obtain a true of false value it returns false if the dataframe contains no rows. Pyspark: explode json in column to multiple columns asked 7 years ago modified 3 months ago viewed 86k times. Manually create a pyspark dataframe asked 5 years, 9 months ago modified 1 year ago viewed 207k times.

Pyspark Create Dataframe From List Spark By Examples Pyspark: explode json in column to multiple columns asked 7 years ago modified 3 months ago viewed 86k times. Manually create a pyspark dataframe asked 5 years, 9 months ago modified 1 year ago viewed 207k times. Comparison operator in pyspark (not equal !=) asked 8 years, 10 months ago modified 1 year, 4 months ago viewed 164k times. How to find count of null and nan values for each column in a pyspark dataframe efficiently? asked 8 years ago modified 2 years, 3 months ago viewed 287k times. I come from pandas background and am used to reading data from csv files into a dataframe and then simply changing the column names to something useful using the simple command: df.columns =. Pyspark: display a spark data frame in a table format asked 8 years, 10 months ago modified 1 year, 11 months ago viewed 407k times.

Pyspark Create An Empty Dataframe Rdd Spark By Examples Comparison operator in pyspark (not equal !=) asked 8 years, 10 months ago modified 1 year, 4 months ago viewed 164k times. How to find count of null and nan values for each column in a pyspark dataframe efficiently? asked 8 years ago modified 2 years, 3 months ago viewed 287k times. I come from pandas background and am used to reading data from csv files into a dataframe and then simply changing the column names to something useful using the simple command: df.columns =. Pyspark: display a spark data frame in a table format asked 8 years, 10 months ago modified 1 year, 11 months ago viewed 407k times.

Sparkbyexamples On Linkedin Pyspark Create Dataframe With Examples I come from pandas background and am used to reading data from csv files into a dataframe and then simply changing the column names to something useful using the simple command: df.columns =. Pyspark: display a spark data frame in a table format asked 8 years, 10 months ago modified 1 year, 11 months ago viewed 407k times.

Pyspark Create Dataframe With Examples Spark Qas

Comments are closed.