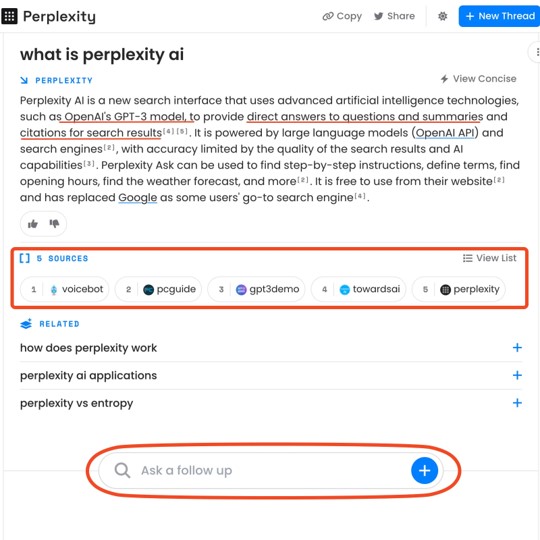

Perplexity Future Of Search Engines With Natural Text Comprehension Ability

Is Perplexity Ai Showing Us The Future Of Search So perplexity represents the number of sides of a fair die that when rolled, produces a sequence with the same entropy as your given probability distribution. number of states ok, so now that we have an intuitive definition of perplexity, let's take a quick look at how it is affected by the number of states in a model. Perplexity ai 不是搜索的终点,但可能是我们逃离“信息垃圾场”的起点。 它就像是搜索引擎界的 gpt 4:懂你说什么,还知道去哪儿找答案。 当然,要是它哪天推出 pro 会员,也别忘了上拼团看看有没有便宜团能拼,不然 ai 会用,钱包也得会养哈哈~ ai布道师warren.

Perplexity Ai The Future Of Search By Machine Learning Street Talk 所以在给定输入的前面若干词汇即给定历史信息后,当然语言模型等可能性输出的结果个数越少越好,越少表示模型就越知道对给定的历史信息 \ {e 1\cdots e {i 1}\} ,应该给出什么样的输出 e i ,即 perplexity 越小,表示语言模型越好。. Why can i compare perplexity between my first two outputs while the third output doesn't appear to be comparable? for example, k = 9 in the first two outputs hovers around a perplexity of 32,000. in the third output, k = 10 is nearly 100,000 in perplexity—nowhere near 32,000. wouldn't we expect perplexity for k = 10 to remain close to 32,000?. 如何评价 perplexity 消除了 deepseek 的审查以提供公正、准确的回答? perplexity: 我们很高兴地宣布,全新 deepseek r1 模型现已在所有 perplexity 平台上线。. And this is the perplexity of the corpus to the number of words. if you feel uncomfortable with the log identities, google for a list of logarithmic identities.

Perplexity The Future Of Search Engines 如何评价 perplexity 消除了 deepseek 的审查以提供公正、准确的回答? perplexity: 我们很高兴地宣布,全新 deepseek r1 模型现已在所有 perplexity 平台上线。. And this is the perplexity of the corpus to the number of words. if you feel uncomfortable with the log identities, google for a list of logarithmic identities. Would comparing perplexities be invalidated by the different data set sizes? no. i copy below some text on perplexity i wrote with some students for a natural language processing course (assume log log is base 2): in order to assess the quality of a language model, one needs to define evaluation metrics. one evaluation metric is the log likelihood of a text, which is computed as follows. In the coursera nlp course , dan jurafsky calculates the following perplexity: operator(1 in 4) sales(1 in 4) technical support(1 in 4) 30,000 names(1 in 120,000 each) he says the perplexity is 53. Perplexity的快速模型、克劳德4.0、gpt 4.1、双子座2.5专业版、grok3测试版、perplexity的无偏见推理模型、openal的最新推理模型。 我用他给自己算了一挂:请你作为一个算命大师,帮我算一卦,我想知道我的人生各个阶段的命运。 我的出生年月日是xx年农历x月x,x时。. 只有没深入用过的才吹这玩意儿,perplexity模型掺假,引用的信源质量低,deep research幻觉极其严重。 搜索领域谷歌的地位根本无法被撼动,ai studio里的grounding with google search那引用质量就爆了perplexity三条街。上周拼多多发财报前我想整理它2023q4到2024q3的财报数据,perplexity无论是用o3 mini还是sonnet 3.7.

Perplexity Ai The Future Of Search Would comparing perplexities be invalidated by the different data set sizes? no. i copy below some text on perplexity i wrote with some students for a natural language processing course (assume log log is base 2): in order to assess the quality of a language model, one needs to define evaluation metrics. one evaluation metric is the log likelihood of a text, which is computed as follows. In the coursera nlp course , dan jurafsky calculates the following perplexity: operator(1 in 4) sales(1 in 4) technical support(1 in 4) 30,000 names(1 in 120,000 each) he says the perplexity is 53. Perplexity的快速模型、克劳德4.0、gpt 4.1、双子座2.5专业版、grok3测试版、perplexity的无偏见推理模型、openal的最新推理模型。 我用他给自己算了一挂:请你作为一个算命大师,帮我算一卦,我想知道我的人生各个阶段的命运。 我的出生年月日是xx年农历x月x,x时。. 只有没深入用过的才吹这玩意儿,perplexity模型掺假,引用的信源质量低,deep research幻觉极其严重。 搜索领域谷歌的地位根本无法被撼动,ai studio里的grounding with google search那引用质量就爆了perplexity三条街。上周拼多多发财报前我想整理它2023q4到2024q3的财报数据,perplexity无论是用o3 mini还是sonnet 3.7.

Perplexity Ai Will Dominate Google In The Future Of Search Skim Ai Perplexity的快速模型、克劳德4.0、gpt 4.1、双子座2.5专业版、grok3测试版、perplexity的无偏见推理模型、openal的最新推理模型。 我用他给自己算了一挂:请你作为一个算命大师,帮我算一卦,我想知道我的人生各个阶段的命运。 我的出生年月日是xx年农历x月x,x时。. 只有没深入用过的才吹这玩意儿,perplexity模型掺假,引用的信源质量低,deep research幻觉极其严重。 搜索领域谷歌的地位根本无法被撼动,ai studio里的grounding with google search那引用质量就爆了perplexity三条街。上周拼多多发财报前我想整理它2023q4到2024q3的财报数据,perplexity无论是用o3 mini还是sonnet 3.7.

Comments are closed.