New Distributed Neural Graph Architecture For Ai Stanford

Stanford Cars Benchmark Neural Architecture Search Papers With Code What if we operate a dynamic distributed graph network with different modules? what if we combine transformer blocks with mamba blocks for an adaptive architecture of more complex tasks?. Discover how researchers from meta fair and stanford university are revolutionizing ai architecture by eliminating traditional layer structures in favor of dynamic distributed graph networks with specialized modules.

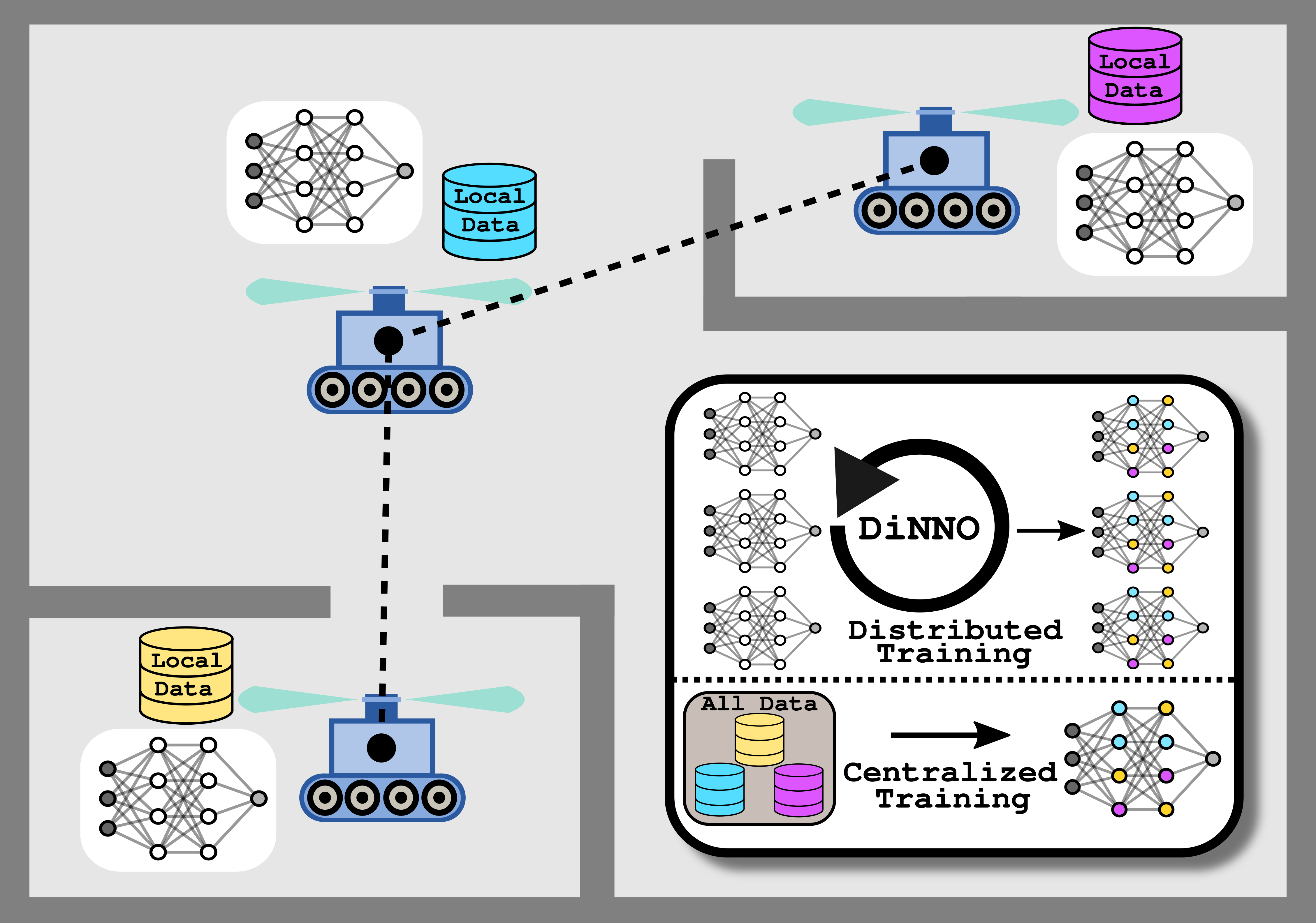

Home Msl Stanford Edu The stanford innovation provides a scalable self attention mechanism for graph data. it diffuses the attention scores from neighboring nodes to non neighboring nodes, thus benefiting from the expressiveness of full self attention. In the distributed setting, we study and analyze syn chronous and asynchronous weight update algorithms (like parallel sgd, admm and downpour sgd) and come up with worst case asymptotic communication cost and com putation time for each of the these algorithms. Roland is an experimental model developed in 2022 which aims to provide a flexible learning framework for dynamic graphs by making it possible to repurpose any static graph in a dynamic graph. New paradigm of how we define neural networks! how can we effectively parallelize irregular data of potentially varying size? sparse vs. dense graphs, many small vs. single giant graphs, graph level, clustering, pre training, self supervision, sageconv, gcnconv, gatconv, ginconv, pnaconv,.

The Evolution Of Distributed Systems For Graph Neural Networks And Roland is an experimental model developed in 2022 which aims to provide a flexible learning framework for dynamic graphs by making it possible to repurpose any static graph in a dynamic graph. New paradigm of how we define neural networks! how can we effectively parallelize irregular data of potentially varying size? sparse vs. dense graphs, many small vs. single giant graphs, graph level, clustering, pre training, self supervision, sageconv, gcnconv, gatconv, ginconv, pnaconv,. Revolutionize chip design with our deep reinforcement learning approach to chip floorplanning. in under six hours, our method generates chip layouts that match or exceed human designed standards in power, performance, and area. In this survey, we analyze three major challenges in distributed gnn training that are massive feature communication, the loss of model accuracy and workload imbalance. Additionally, users can easily import new design dimensions to graphgym, such as new types of gnn layers or new connectivity patterns across layers. we provide an example of using attention as a new intra layer design dimension in the appendix. The distdgl system builds upon dgl and pytorch, combining their strengths for efficient distributed graph computation. this hybrid architecture enables both sparse and dense tensor operations, which are essential for modern graph neural network training.

Comments are closed.