Llm System And Hardware Requirements Running Large Language Models Locally Systemrequirements

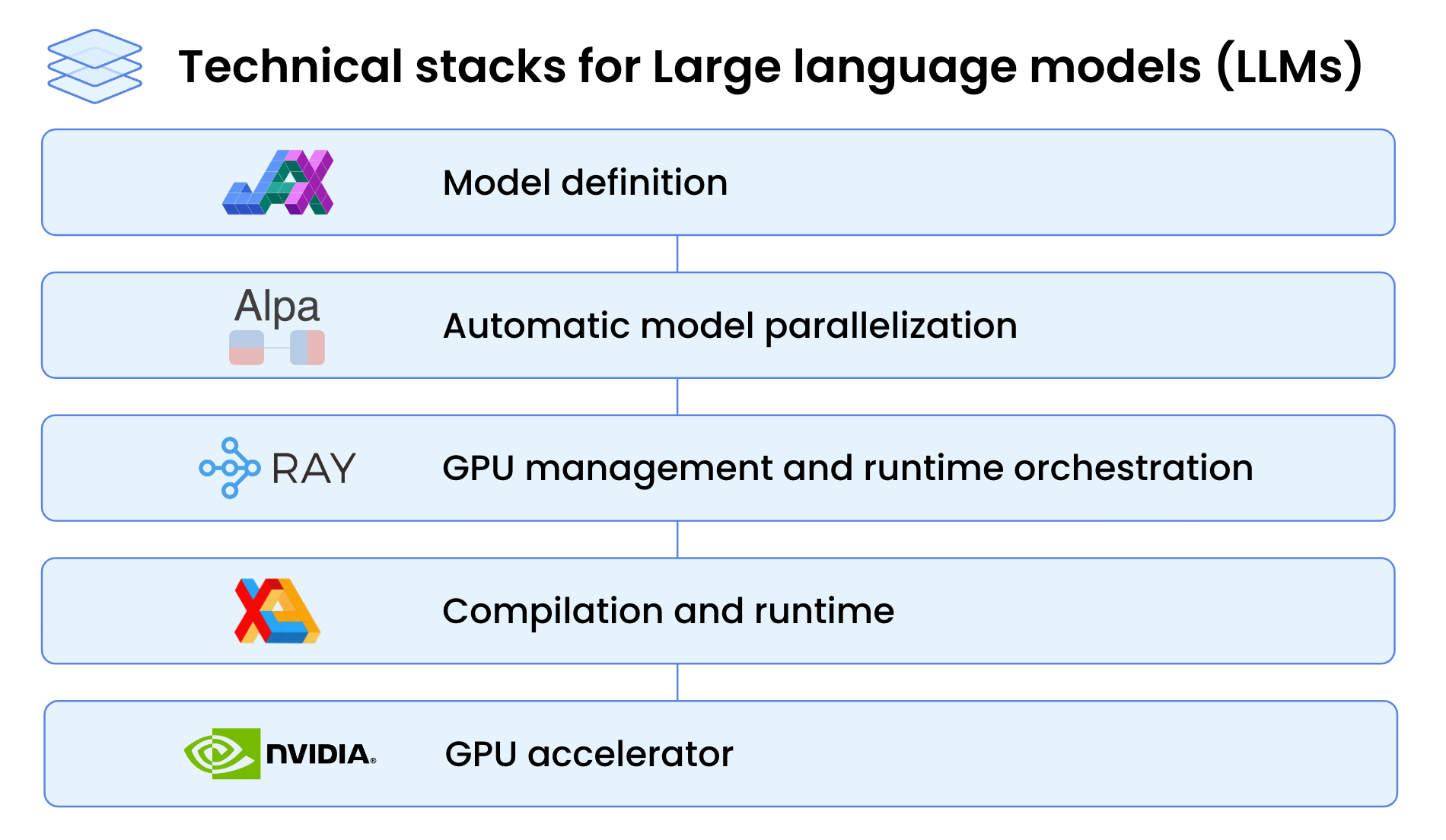

What Is An Llm Large Language Models Explained Pc Guide 54 Off To run llms locally, your hardware setup should focus on having a powerful gpu with sufficient vram, ample ram, and fast storage. while consumer grade hardware can handle inference tasks and light fine tuning, large scale training and fine tuning demand enterprise grade gpus and cpus. This architecture can be particularly beneficial for running large language models. for instance, the m2 max chip supports up to 400 gb s memory bandwidth, while the m2 ultra pushes this to an impressive 800 gb s.

Large Language Models Llm Running Home In this blog post, we will explore the local llm hardware requirements, breaking down what you need to deploy and use llms effectively. large language models are computationally intensive, often requiring a combination of high memory bandwidth, sufficient ram, and powerful gpus. Instantly check if your pc or laptop can run popular open source ai models like llama, mistral and gemma locally. get personalized quantization recommendations based on your cpu, gpu and ram specs. Local deployments of large language models offer advantages, including privacy, speed and customization but organizations need the right tools and infrastructure to succeed. Running llms locally offers several advantages including privacy, offline access, and cost efficiency. this repository provides step by step guides for setting up and running llms using various frameworks, each with its own strengths and optimization techniques. general requirements for running llms locally:.

What Are Large Language Models Llms Databricks Local deployments of large language models offer advantages, including privacy, speed and customization but organizations need the right tools and infrastructure to succeed. Running llms locally offers several advantages including privacy, offline access, and cost efficiency. this repository provides step by step guides for setting up and running llms using various frameworks, each with its own strengths and optimization techniques. general requirements for running llms locally:. Unlock the potential of large language models on your laptop. explore our guide to deploy any llm locally without the need for high end hardware. in the wake of chatgpt’s debut, the ai landscape has undergone a seismic shift. Minimum: 32gb ddr5 – suitable for small scale ai models and lightweight applications. recommended: 64gb ddr5 – ideal for running mid sized models and fine tuning. high end: 128gb ddr5 ecc – needed for training large scale llms locally. why more ram? stores large datasets and temporary files during ai processing. Choosing the right hardware is critical for a smooth llm experience. here’s a breakdown of recommended specifications: the gpu is the most important component. the amount of vram (video ram) on the gpu dictates the maximum size of the model you can load. One key trend that has emerged is the use of llms on local devices that leads to three primary advantages: privacy, savings and offline capabilities. there are several user friendly methods for deploying large language models on windows, macos and linux, ranging from gui based tools to command line interfaces. let’s dive into a few.

Large Language Models Llm Everything You Need To Know Unlock the potential of large language models on your laptop. explore our guide to deploy any llm locally without the need for high end hardware. in the wake of chatgpt’s debut, the ai landscape has undergone a seismic shift. Minimum: 32gb ddr5 – suitable for small scale ai models and lightweight applications. recommended: 64gb ddr5 – ideal for running mid sized models and fine tuning. high end: 128gb ddr5 ecc – needed for training large scale llms locally. why more ram? stores large datasets and temporary files during ai processing. Choosing the right hardware is critical for a smooth llm experience. here’s a breakdown of recommended specifications: the gpu is the most important component. the amount of vram (video ram) on the gpu dictates the maximum size of the model you can load. One key trend that has emerged is the use of llms on local devices that leads to three primary advantages: privacy, savings and offline capabilities. there are several user friendly methods for deploying large language models on windows, macos and linux, ranging from gui based tools to command line interfaces. let’s dive into a few.

What Is Large Language Models Llm Choosing the right hardware is critical for a smooth llm experience. here’s a breakdown of recommended specifications: the gpu is the most important component. the amount of vram (video ram) on the gpu dictates the maximum size of the model you can load. One key trend that has emerged is the use of llms on local devices that leads to three primary advantages: privacy, savings and offline capabilities. there are several user friendly methods for deploying large language models on windows, macos and linux, ranging from gui based tools to command line interfaces. let’s dive into a few.

What Is Large Language Models Llm

Comments are closed.