Lecture 1 Introduction To Information Theory

Introduction To Information Theory Pdf Probability Theory Information Lecture 1 of the course on information theory, pattern recognition, and neural networks. produced by: david mackay (university of cambridge) more. This chapter introduces some of the basic concepts of information theory, as well as the definitions and notations of probabilities that will be used throughout the book. the notion of entropy, which is fundamental to the whole topic of this book, is introduced here.

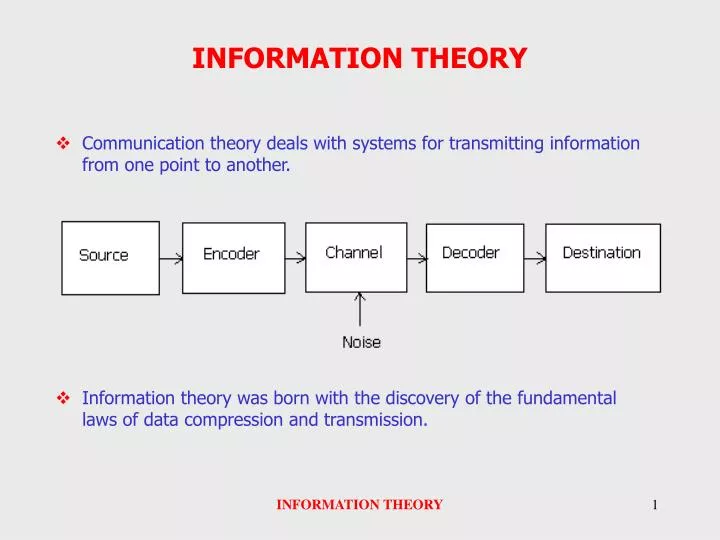

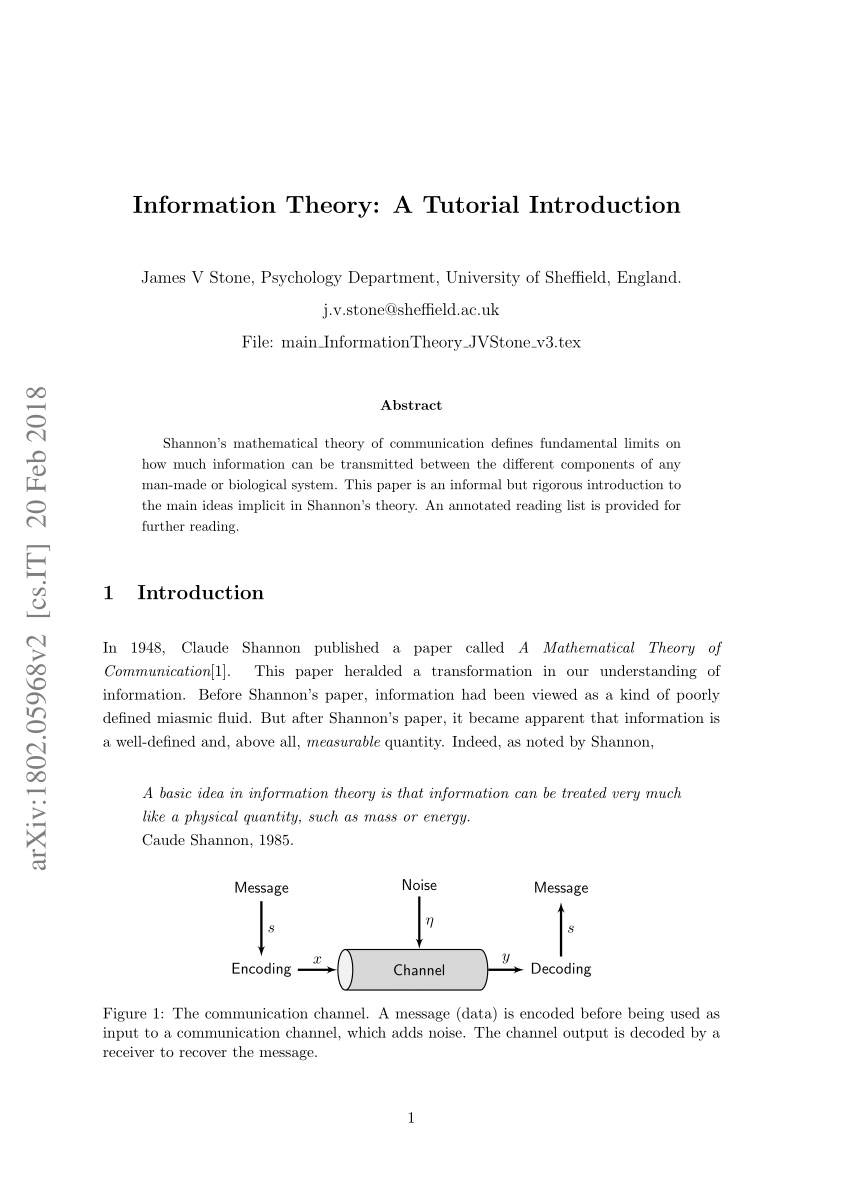

Lecture 1 Introduction And Information 1 Ppt Lecture 1: introduction to information theory lecture notes chapter 1 cambridge university press 9 640 pages, ~ 35 pounds also available free online inference.phy.cam.ac.uk mackay itila ме. qhon 0. Information theory is not only about communication and storages basics of information theory and some of its applications. discrete probabilities need enumeration, no lebesgue integration. in particular: no hard formalism is involved! how to modelize a source? a noisy channel? see the programming project or any generative ai!. Our goals in this class are to establish an understanding of the intrinsic properties of transmission of information and the rela tion between coding and the fundamental limits of information transmission in the context of communications. Information is a message that is uncertain to receivers: if we receive something that we already knew with absolute certainty then it is non informative. information theory is the study of the fundamental limits and potential of the representation and transmission of information. example 1: what number am i thinking of?.

Ppt Introduction To Information Theory Powerpoint Presentation Free Our goals in this class are to establish an understanding of the intrinsic properties of transmission of information and the rela tion between coding and the fundamental limits of information transmission in the context of communications. Information is a message that is uncertain to receivers: if we receive something that we already knew with absolute certainty then it is non informative. information theory is the study of the fundamental limits and potential of the representation and transmission of information. example 1: what number am i thinking of?. Introduction to information theory (10) 11:49introduction to information theory (11) 12:07. Braverman lecture 1 scribe: mark braverman 1 introduction information theory is the study of a broad variety of topics having to do with quantifying the amount of information carried by a random variable or collection . f random variables, and reasoning about this information. it gives us tools to de ne and reason about . We will start with a very short introduction to classical information theory (shannon theory). and let us suppose that a occurs with probability p, and b with probability 1 p. how many bits of information can one extract from a long message of this kind, say with n letters?. In information theory, shannon's source coding theorem (or noiseless coding theorem) establishes the limits to possible data compression, and the operational meaning of the shannon entropy.

Ppt Introduction To Information Theory Powerpoint Presentation Free Introduction to information theory (10) 11:49introduction to information theory (11) 12:07. Braverman lecture 1 scribe: mark braverman 1 introduction information theory is the study of a broad variety of topics having to do with quantifying the amount of information carried by a random variable or collection . f random variables, and reasoning about this information. it gives us tools to de ne and reason about . We will start with a very short introduction to classical information theory (shannon theory). and let us suppose that a occurs with probability p, and b with probability 1 p. how many bits of information can one extract from a long message of this kind, say with n letters?. In information theory, shannon's source coding theorem (or noiseless coding theorem) establishes the limits to possible data compression, and the operational meaning of the shannon entropy.

Ppt Information Theory Powerpoint Presentation Free Download Id 7226 We will start with a very short introduction to classical information theory (shannon theory). and let us suppose that a occurs with probability p, and b with probability 1 p. how many bits of information can one extract from a long message of this kind, say with n letters?. In information theory, shannon's source coding theorem (or noiseless coding theorem) establishes the limits to possible data compression, and the operational meaning of the shannon entropy.

Pdf Information Theory A Tutorial Introduction

Comments are closed.