Large Language Models And The Alchemy Of Optimization Algorithms

Algorithms For Optimization Pdf Mathematical Optimization Combining large language models (llms) and optimization algorithms (oas) is crucial for solving large scale, high dimensional, and dynamically changing optimization problems. In this work, we propose optimization by prompting (opro), a simple and effective approach to leverage large language models (llms) as optimizers, where the optimization task is described in natural language.

Large Language Model Algorithms In Plain English Pdf The ability of large language models (llms) to generate high quality text and code has fuelled their rise in popularity. in this paper, we aim to demonstrate the potential of llms within the realm of optimization algorithms by integrating them into stnweb. In a recent paper, camilo chacon sartori and christian blum explore the intersection of large language models (llms) and optimization algorithms, demonstrating that these models can not only understand but also improve upon complex, expert designed code. 🔥 applying large language models (llms) for diverse optimization tasks (opt) is an emerging research area. this is a collection of references and papers of llm4opt. Optimization algorithms and large language models (llms) enhance decision making in dynamic environments by integrating artificial intelligence with traditional techniques.

Algorithms For Optimization 🔥 applying large language models (llms) for diverse optimization tasks (opt) is an emerging research area. this is a collection of references and papers of llm4opt. Optimization algorithms and large language models (llms) enhance decision making in dynamic environments by integrating artificial intelligence with traditional techniques. #science #sciencenews #ai #techan algorithmic palanalysis of the paper: arxiv.org pdf 2502.08298in a recent paper, camilo chacon sartori and christia. Large language models (llms) have revolutionised natural language processing (nlp) with their impressive performance across a wide range of tasks. This paper presents a population based optimization method based on llms called large language model based evolutionary optimizer (leo). we present a diverse set of benchmark test cases, spanning from elementary examples to multi objective and high dimensional numerical optimization problems. Optimizing the efficiency and scalability of large language models (llms) is crucial for advancing natural language processing (nlp) applications. this paper explores various optimization techniques for enhancing llms, focusing on strategies that improve computational efficiency and model scalability.

Discovering Preference Optimization Algorithms With And For Large #science #sciencenews #ai #techan algorithmic palanalysis of the paper: arxiv.org pdf 2502.08298in a recent paper, camilo chacon sartori and christia. Large language models (llms) have revolutionised natural language processing (nlp) with their impressive performance across a wide range of tasks. This paper presents a population based optimization method based on llms called large language model based evolutionary optimizer (leo). we present a diverse set of benchmark test cases, spanning from elementary examples to multi objective and high dimensional numerical optimization problems. Optimizing the efficiency and scalability of large language models (llms) is crucial for advancing natural language processing (nlp) applications. this paper explores various optimization techniques for enhancing llms, focusing on strategies that improve computational efficiency and model scalability.

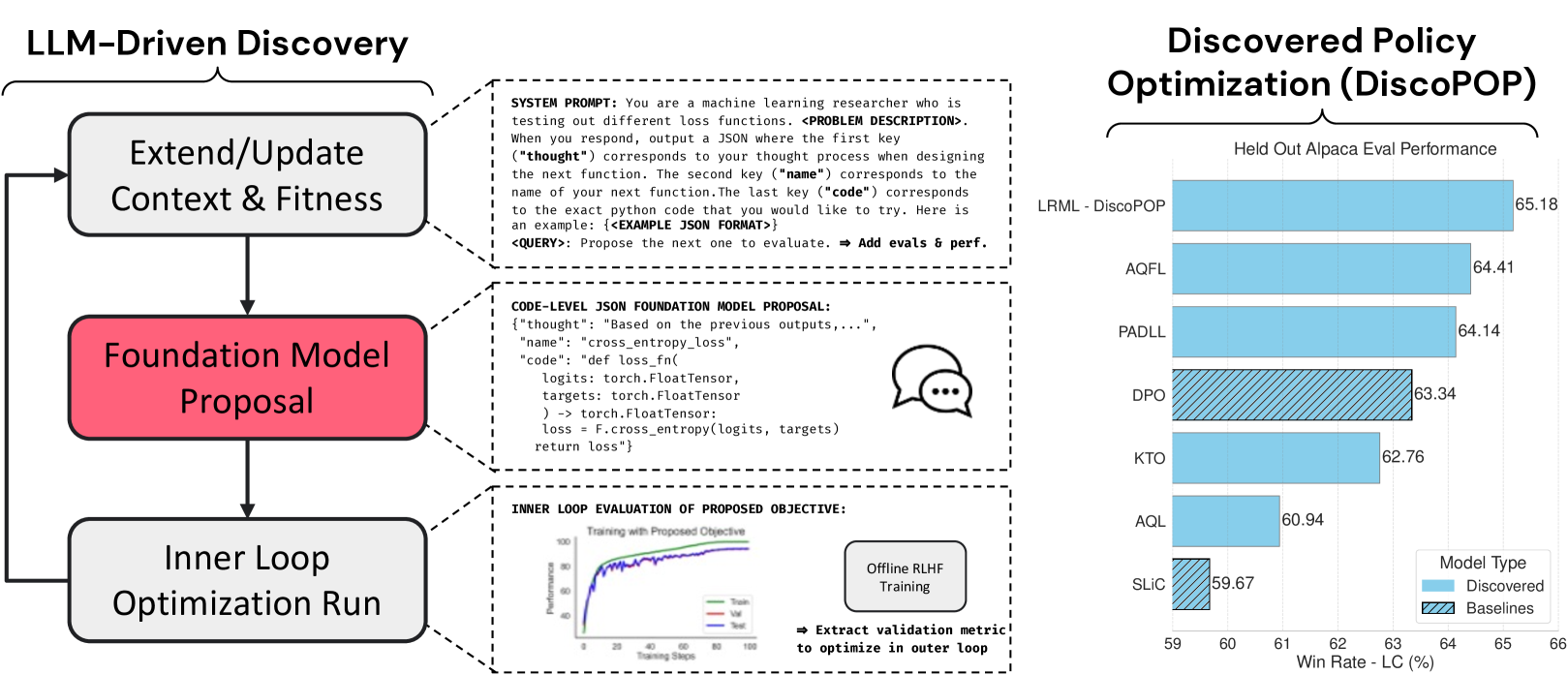

outputs. Typically%2C preference optimization is approached as an offline supervised learning task using manually-crafted convex loss functions. While these methods are based on theoretical insights%2C they are inherently constrained by human creativity%2C so the large search space of possible loss functions remains under explored. We address this by performing LLM-driven objective discovery to automatically discover new state-of-the-art preference optimization algorithms without (expert) human intervention. Specifically%2C we iteratively prompt an LLM to propose and implement new preference optimization loss functions based on previously-evaluated performance metrics. This process leads to the discovery of previously-unknown and performant preference optimization algorithms. The best performing of these we call Discovered Preference Optimization (DiscoPOP)%2C a novel algorithm that adaptively blends logistic and exponential losses. Experiments demonstrate the state-of-the-art performance of DiscoPOP and its successful transfer to held-out tasks.)

Discovering Preference Optimization Algorithms With And For Large This paper presents a population based optimization method based on llms called large language model based evolutionary optimizer (leo). we present a diverse set of benchmark test cases, spanning from elementary examples to multi objective and high dimensional numerical optimization problems. Optimizing the efficiency and scalability of large language models (llms) is crucial for advancing natural language processing (nlp) applications. this paper explores various optimization techniques for enhancing llms, focusing on strategies that improve computational efficiency and model scalability.

Algorithms For Optimization Mit For A Better World

Comments are closed.