Kafka Avro Console Consumer Schema Registry Url

Lydtech Consulting Kafka Schema Registry Avro Introduction To produce your first record into kafka, open another terminal window and run the following command to open a second shell on the broker container: from inside the second terminal on the broker container, run the following command to start a console producer: topic orders avro \. bootstrap server broker:9092 \. This is a general property of the regular console consumer. these are the only extra options in the avro console consumer script, meaning other than what's already defined in kafka consumer consumer, you can only provide formatter or property schema.registry.url, and no other schema registry specific parameters (whatever those may be).

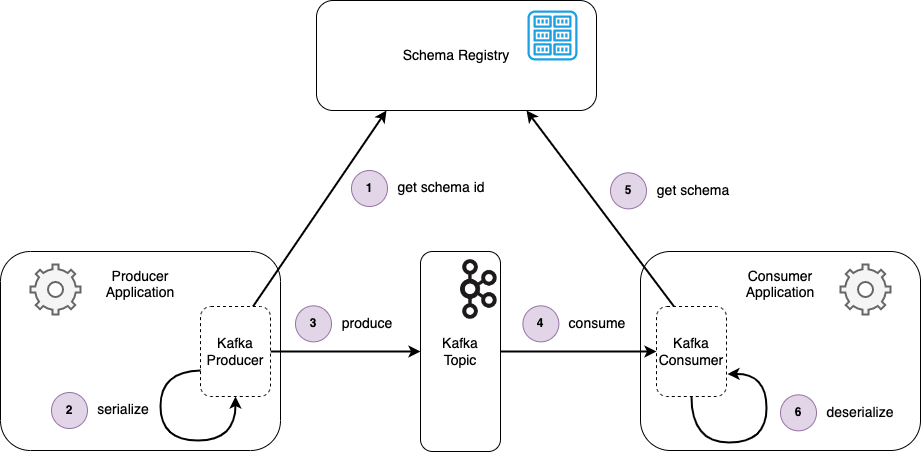

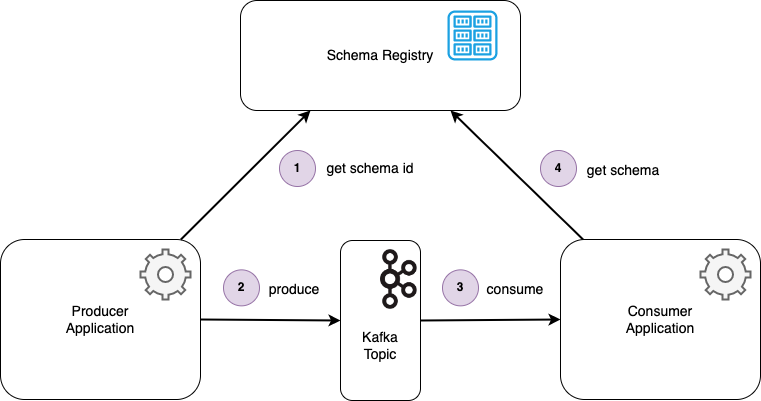

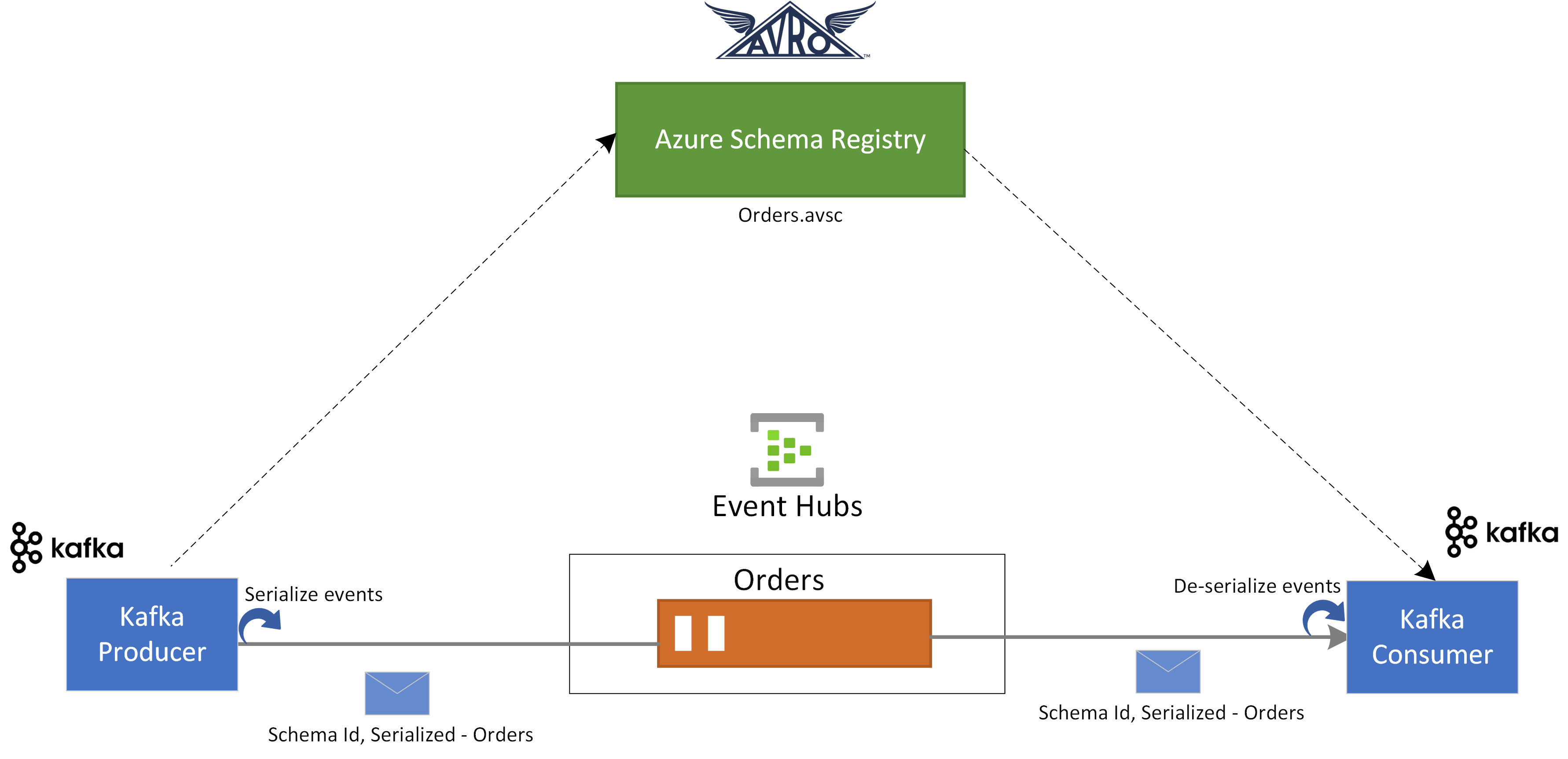

Lydtech Consulting Kafka Schema Registry Avro Introduction Avro serializer¶. you can plug kafkaavroserializer into kafkaproducer to send messages of avro type to kafka currently supported primitive types are null, boolean, integer, long, float, double, string, byte[], and complex type of indexedrecord. You can use the kafka avro console consumer, kafka protobuf console consumer, and kafka json schema console consumer utilities to get the schema ids for all messages on a topic, or for a specified subset of messages. this can be useful for exploring or troubleshooting schemas. The schema registry is the answer to this problem: it is a server that runs in your infrastructure (close to your kafka brokers) and that stores your schemas (including all their versions). when you send avro messages to kafka, the messages contain an identifier of a schema stored in the schema registry. a library allows you to serialize and. To produce records using schema registry, this tutorial assumes a local installation of schema registry or using docker. producing records is very similar to using the console producer that ships with kafka, instead you'll use the console producer that comes with schema registry: copy. kafka avro console producer \. topic <topic> \.

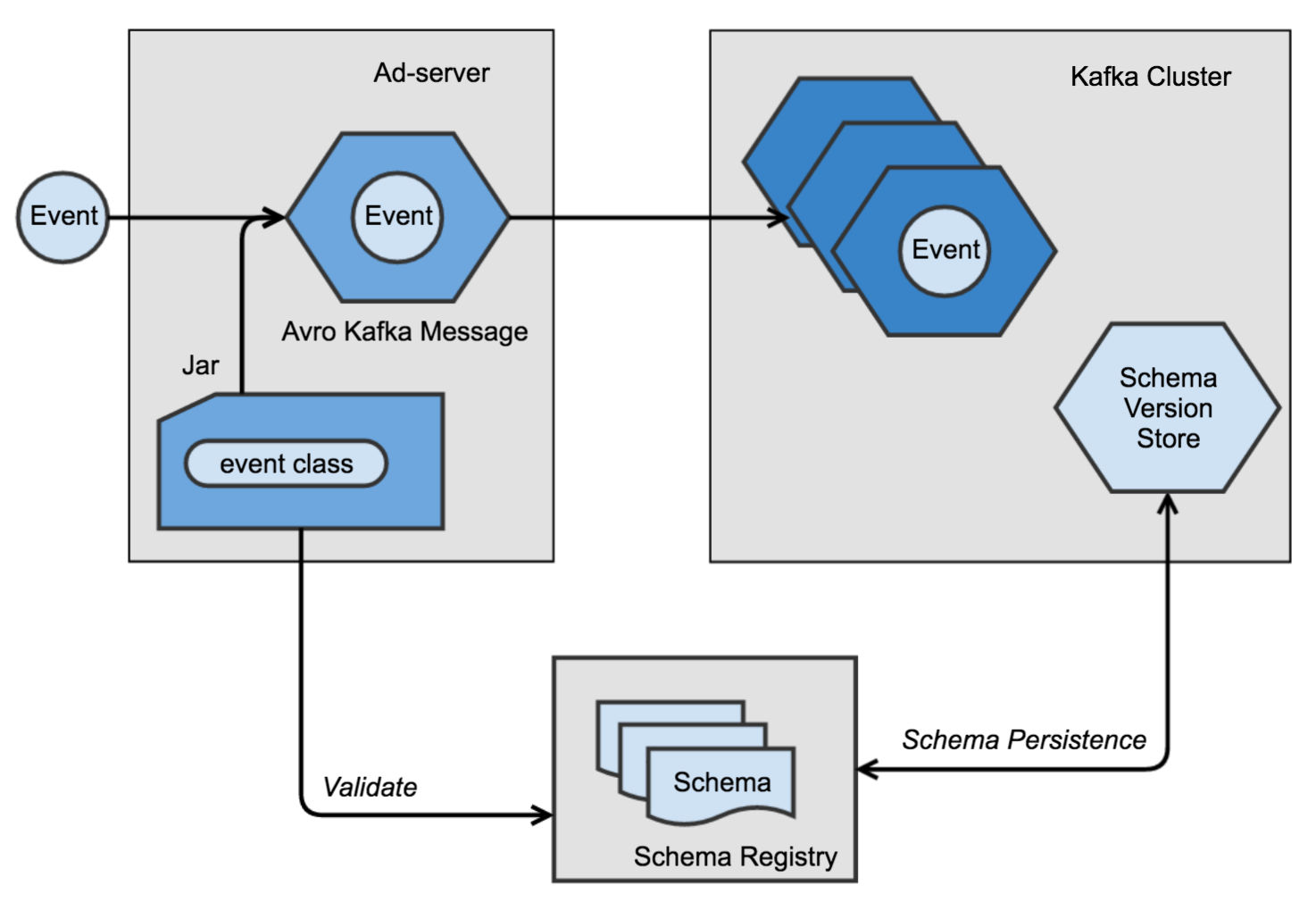

Valider Les г Vг Nements Des Applications Apache Kafka Avec Avro Java The schema registry is the answer to this problem: it is a server that runs in your infrastructure (close to your kafka brokers) and that stores your schemas (including all their versions). when you send avro messages to kafka, the messages contain an identifier of a schema stored in the schema registry. a library allows you to serialize and. To produce records using schema registry, this tutorial assumes a local installation of schema registry or using docker. producing records is very similar to using the console producer that ships with kafka, instead you'll use the console producer that comes with schema registry: copy. kafka avro console producer \. topic <topic> \. Avro is a popular data serialization format for working with kafka. in this article, you will learn how to use avro serializer with kafka consumers and producers in java. you will also see how to use schema registry to manage avro schemas and validate messages. this is a useful skill for building data pipelines and streaming applications with kafka. The kafka avro serialization project provides serializers. kafka producers and consumers that use kafka avro serialization handle schema management and the serialization of records using avro and.

Creating A Data Pipeline With The Kafka Connect Api Confluent Avro is a popular data serialization format for working with kafka. in this article, you will learn how to use avro serializer with kafka consumers and producers in java. you will also see how to use schema registry to manage avro schemas and validate messages. this is a useful skill for building data pipelines and streaming applications with kafka. The kafka avro serialization project provides serializers. kafka producers and consumers that use kafka avro serialization handle schema management and the serialization of records using avro and.

Comments are closed.