Kafka And Zookeeper Multi Node Cluster Setup Using Docker

Kafka Single And Multi Node Clusters Using Docker Compose Apache kafka is an open source distributed event streaming platform used by thousands of companies for high performance data pipelines, streaming analytics, data integration, and mission critical applications. Kafka 4.0.0 includes a significant number of new features and fixes. for more information, please read our blog post, the detailed upgrade notes and and the release notes.

Kafka Single And Multi Node Clusters Using Docker Compose Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages. this allows for lower latency processing and easier support for multiple data sources and distributed data consumption. In this quickstart we'll see how to run kafka connect with simple connectors that import data from a file to a kafka topic and export data from a kafka topic to a file. Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages. this allows for lower latency processing and easier support for multiple data sources and distributed data consumption. We use kafka, kafka connect, and kafka streams to enable our developers to access data freely in the company. kafka streams powers parts of our analytics pipeline and delivers endless options to explore and operate on the data sources we have at hand.

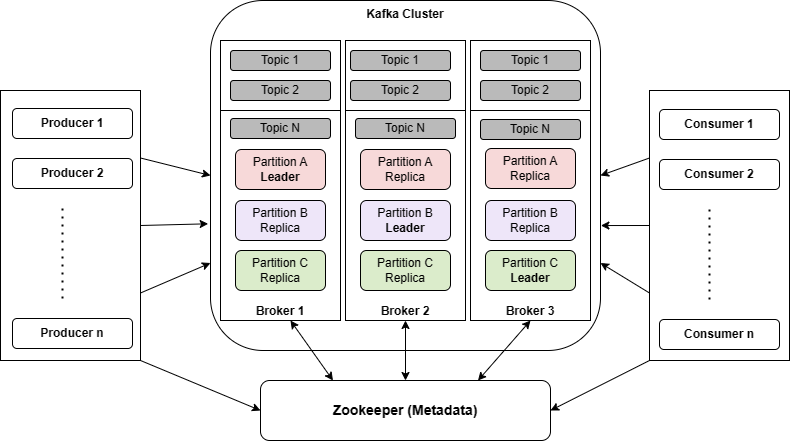

Kafka Single And Multi Node Clusters Using Docker Compose Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages. this allows for lower latency processing and easier support for multiple data sources and distributed data consumption. We use kafka, kafka connect, and kafka streams to enable our developers to access data freely in the company. kafka streams powers parts of our analytics pipeline and delivers endless options to explore and operate on the data sources we have at hand. To understand how kafka does these things, let's dive in and explore kafka's capabilities from the bottom up. first a few concepts: kafka is run as a cluster on one or more servers that can span multiple datacenters. the kafka cluster stores streams of records in categories called topics. each record consists of a key, a value, and a timestamp. Apache kafka is nussknacker's primary input and output interface in streaming use cases nussknacker reads events from kafka, applies decision algorithms and outputs actions to kafka. Notably, in kafka 4.0, kafka clients and kafka streams require java 11, while kafka brokers, connect, and tools, now require java 17. this release also updates the minimum supported client and broker versions (kip 896), and defines new baseline requirements for supported upgrade paths. Kafka streams is a client library of kafka for real time stream processing and analyzing data stored in kafka brokers. this quickstart example will demonstrate how to run a streaming application coded in this library.

Kafka Single And Multi Node Clusters Using Docker Compose To understand how kafka does these things, let's dive in and explore kafka's capabilities from the bottom up. first a few concepts: kafka is run as a cluster on one or more servers that can span multiple datacenters. the kafka cluster stores streams of records in categories called topics. each record consists of a key, a value, and a timestamp. Apache kafka is nussknacker's primary input and output interface in streaming use cases nussknacker reads events from kafka, applies decision algorithms and outputs actions to kafka. Notably, in kafka 4.0, kafka clients and kafka streams require java 11, while kafka brokers, connect, and tools, now require java 17. this release also updates the minimum supported client and broker versions (kip 896), and defines new baseline requirements for supported upgrade paths. Kafka streams is a client library of kafka for real time stream processing and analyzing data stored in kafka brokers. this quickstart example will demonstrate how to run a streaming application coded in this library.

Kafka Single And Multi Node Clusters Using Docker Compose Notably, in kafka 4.0, kafka clients and kafka streams require java 11, while kafka brokers, connect, and tools, now require java 17. this release also updates the minimum supported client and broker versions (kip 896), and defines new baseline requirements for supported upgrade paths. Kafka streams is a client library of kafka for real time stream processing and analyzing data stored in kafka brokers. this quickstart example will demonstrate how to run a streaming application coded in this library.

Comments are closed.