How Deepseek Rewrote The Transformer Mla

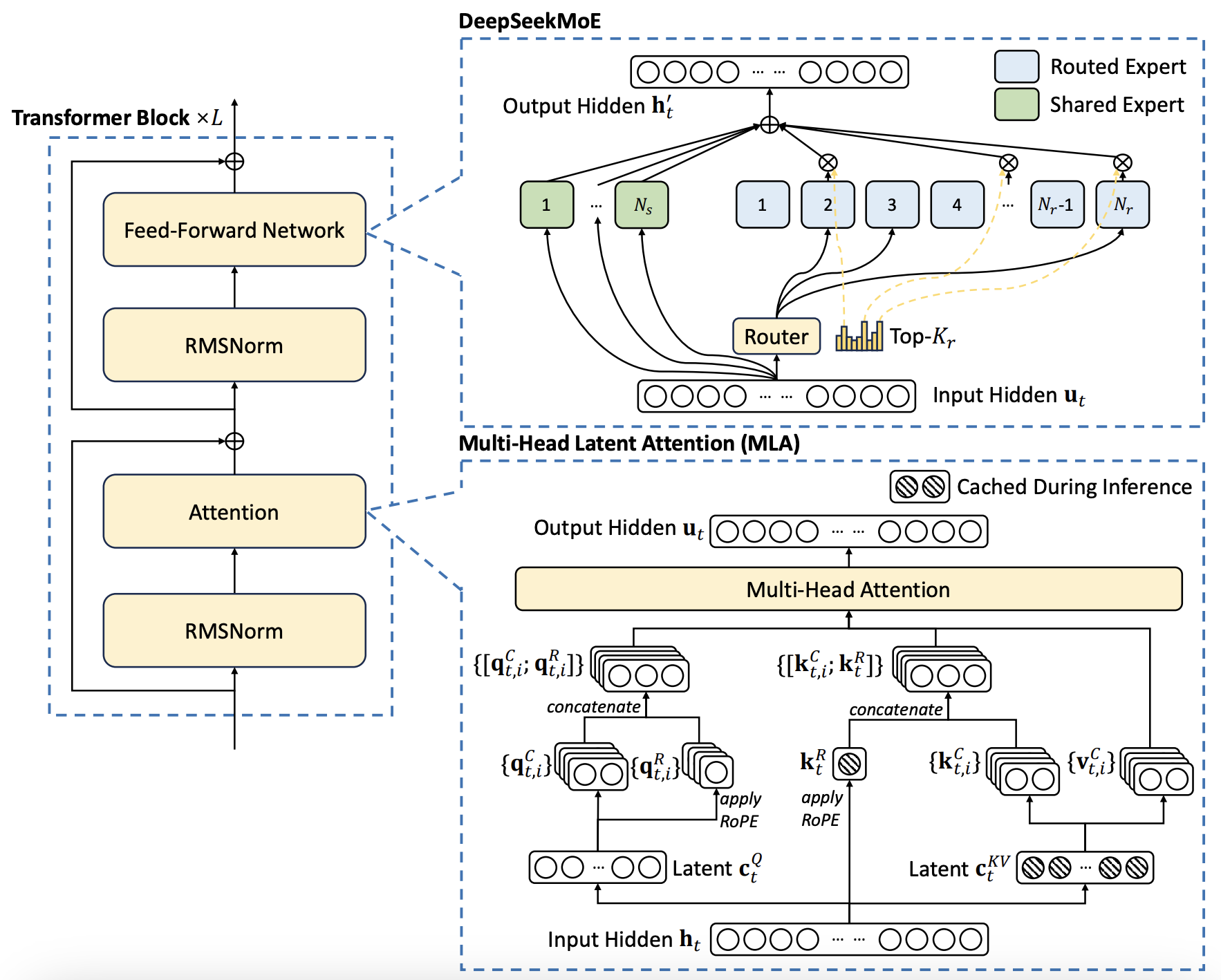

How Deepseek Rewrote The Transformer Mla Matija Grcic Tor the deepseek v3 r1 architecture, mla reduces the kv cache size by a factor of 3,997,696 70,272 =56.9x. 2. i claim a couple times that mla allows deepseek to generate tokens more than 6x. Multi head latent attention (abbreviated as mla) is the most important architectural innovation in deepseek’s models for long context inference. this technique was first introduced in deepseek v2 and is a superior way to reduce the size of the kv cache compared to traditional methods such as grouped query and multi query attention.

How Deepseek Rewrote The Rules Of Ai By Nick Potkalitsky In the ever evolving landscape of generative ai, one breakthrough is making waves — multi head latent attention (mla), a game changer that dramatically slashes the computational cost of. One of the standout innovations of deepseek r1 is the introduction of multi head latent attention (mla), a technique that enhances the core transformer architecture. the mla method addresses a critical bottleneck which is the key value (kv) cache size, achieving a 57 fold reduction in its size. Multi head latent attention (mla) is an innovative architecture proposed by deepseek, designed to ensure efficient and economical inference by significantly compressing the key value (kv) cache into a latent vector. Developed by the innovative team at deepseek, a chinese ai lab, this approach compresses the bulky key value (kv) cache into a smaller, smarter latent vector. the result? a leaner, meaner ai that doesn’t sacrifice performance. why attention matters in ai?.

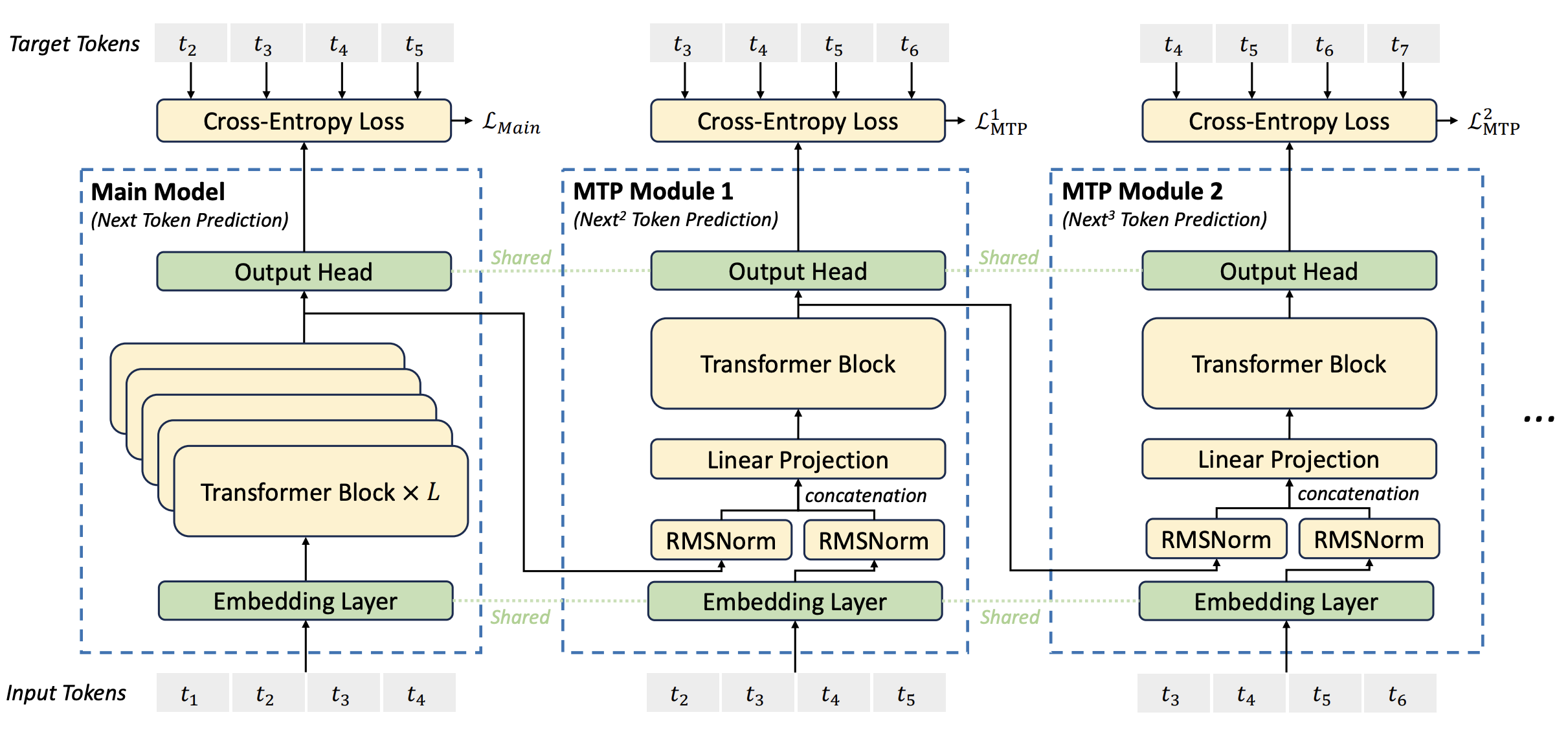

How Has Deepseek Improved The Transformer Architecture Epoch Ai Multi head latent attention (mla) is an innovative architecture proposed by deepseek, designed to ensure efficient and economical inference by significantly compressing the key value (kv) cache into a latent vector. Developed by the innovative team at deepseek, a chinese ai lab, this approach compresses the bulky key value (kv) cache into a smaller, smarter latent vector. the result? a leaner, meaner ai that doesn’t sacrifice performance. why attention matters in ai?. The key architectural improvement highlighted in their report is the multi head latent attention (mla) technique, which reduces the size of the kv cache compared to traditional methods. the kv cache is important for efficiently generating tokens sequentially during inference by caching key and value vectors of past tokens. At the heart of deepseek r1’s efficiency is multi head latent attention (mla), a novel modification to the transformer architecture that reduces the memory bottleneck of the key value (kv). Deepseek's r1 model revolutionizes language processing by significantly reducing computational requirements through multi head latent attention, enhancing performance while decreasing key value cache. This document details the multi head latent attention (mla) mechanism, a key architectural innovation in the deepseek v3 model that enhances inference efficiency while maintaining model quality.

How Has Deepseek Improved The Transformer Architecture Epoch Ai The key architectural improvement highlighted in their report is the multi head latent attention (mla) technique, which reduces the size of the kv cache compared to traditional methods. the kv cache is important for efficiently generating tokens sequentially during inference by caching key and value vectors of past tokens. At the heart of deepseek r1’s efficiency is multi head latent attention (mla), a novel modification to the transformer architecture that reduces the memory bottleneck of the key value (kv). Deepseek's r1 model revolutionizes language processing by significantly reducing computational requirements through multi head latent attention, enhancing performance while decreasing key value cache. This document details the multi head latent attention (mla) mechanism, a key architectural innovation in the deepseek v3 model that enhances inference efficiency while maintaining model quality.

Comments are closed.