Hardware And Communication Video 5 Parallel Computing

Parallel Computing Communication Operations Slides Pdf Matrix This video aims to take students through parallel computing and how to calculate runtime using amdahl's law in the eduqas computer science specification more. Let's discuss about parallel computing and hardware architecture of parallel computing in this post. note that there are two types of computing but we only learn parallel computing here.

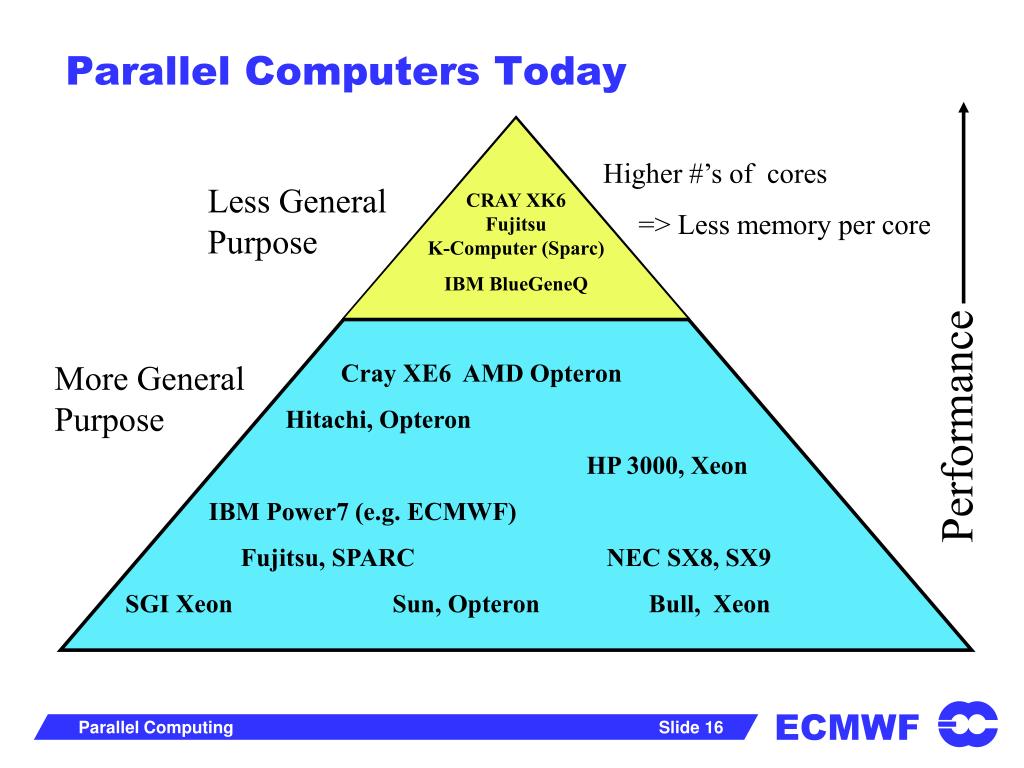

Parallel Computing System Download Free Pdf Parallel Computing Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. [1] . large problems can often be divided into smaller ones, which can then be solved at the same time. there are several different forms of parallel computing: bit level, instruction level, data, and task parallelism. Unlock the power behind today’s tech with parallel computation! this quick guide breaks down how cpus, gpus, and cloud services work together to process task. Parallel computing provides concurrency and saves time and money. complex, large datasets, and their management can be organized only and only using parallel computing's approach. Parallel languages (co array fortran, upc, chapel, ) higher level programming languages (python, r, matlab) do a combination of these approaches under the hood.

Parallel Computing Fundamentals Rc Learning Portal Parallel computing provides concurrency and saves time and money. complex, large datasets, and their management can be organized only and only using parallel computing's approach. Parallel languages (co array fortran, upc, chapel, ) higher level programming languages (python, r, matlab) do a combination of these approaches under the hood. Parallel computing is the process of breaking a task into smaller parts that can be processed simultaneously by multiple processors. these notes explore the different ways of achieving parallelism in hardware and their impact on parallel computing performance. Mpi is a standard for parallelizing c, c and fortran code to run on distributed memory (multiple compute node) systems. Lecture 1: why parallelism? why efficiency? (motivation for transactions, design space of transactional memory implementations.) (finishing up transactional memory focusing on implementations of stm and htm.). Parallel computing architecture involves the simultaneous execution of multiple computational tasks to enhance performance and efficiency. this tutorial provides an in depth exploration of.

What Is Parallel Computing Definition And Faqs Omnisci Parallel computing is the process of breaking a task into smaller parts that can be processed simultaneously by multiple processors. these notes explore the different ways of achieving parallelism in hardware and their impact on parallel computing performance. Mpi is a standard for parallelizing c, c and fortran code to run on distributed memory (multiple compute node) systems. Lecture 1: why parallelism? why efficiency? (motivation for transactions, design space of transactional memory implementations.) (finishing up transactional memory focusing on implementations of stm and htm.). Parallel computing architecture involves the simultaneous execution of multiple computational tasks to enhance performance and efficiency. this tutorial provides an in depth exploration of.

Ppt Parallel Computing Powerpoint Presentation Free Download Id Lecture 1: why parallelism? why efficiency? (motivation for transactions, design space of transactional memory implementations.) (finishing up transactional memory focusing on implementations of stm and htm.). Parallel computing architecture involves the simultaneous execution of multiple computational tasks to enhance performance and efficiency. this tutorial provides an in depth exploration of.

Ppt Parallel Computing Powerpoint Presentation Free Download Id

Comments are closed.