Hadoop Mapreduce Modern Big Data Processing With Hadoop

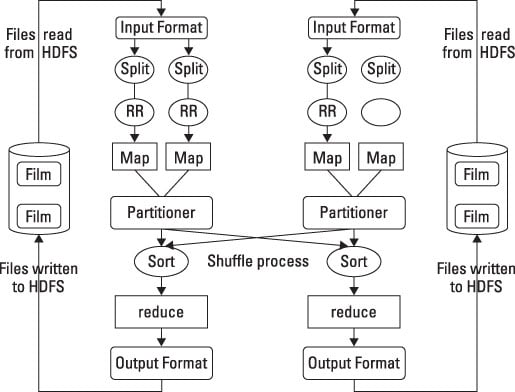

Modern Big Data Processing With Hadoop Scanlibs Apache mapreduce is a framework that makes it easier for us to run mapreduce operations on very large, distributed datasets. one of the advantages of hadoop is a distributed file system that is rack aware and scalable. Hadoop was inspired by google's mapreduce and google file system (gfs) papers to reduce the control system. hadoop is an open source platform for storing, processing, and analyzing enormous volumes of data across distributed computing clusters to process internal data.

Modern Big Data Processing With Hadoop Packt Ebook Pdf Buku Hadoop mapreduce is a game changing framework for big data processing, offering unparalleled scalability, cost efficiency, and flexibility across industries. let us explore its advantages through compelling hypothetical scenarios:. Mapreduce is a distributed execution framework that simplifies data processing on large clusters by breaking tasks into parallel processing steps, making it a key component of the apache hadoop. This book will give you a complete understanding of the data lifecycle management with hadoop, followed by modeling of structured and unstructured data in hadoop. This book will give you a complete understanding of the data lifecycle management with hadoop, followed by modeling of structured and unstructured data in hadoop. it will also show you how to design real time streaming pipelines by leveraging tools such as apache spark, and build efficient enterprise search solutions using elasticsearch.

Hadoop Mapreduce For Big Data Dummies This book will give you a complete understanding of the data lifecycle management with hadoop, followed by modeling of structured and unstructured data in hadoop. This book will give you a complete understanding of the data lifecycle management with hadoop, followed by modeling of structured and unstructured data in hadoop. it will also show you how to design real time streaming pipelines by leveraging tools such as apache spark, and build efficient enterprise search solutions using elasticsearch. The apache hadoop platform, with hadoop distributed file system (hdfs) and mapreduce (m r) framework at its core, allows for distributed processing of large data sets across clusters of computers using the map and reduce programming model. You will learn to build enterprise grade analytics solutions on hadoop, and how to visualize your data using tools such as apache superset. this book also covers techniques for deploying your. The project involves ingesting data into hdfs, and processing it using different components of the hadoop ecosystem, including mapreduce, hive, spark, pig, and sqoop. we also explore data export capabilities and performance optimizations using tez, enhancing the overall efficiency of our data processing workflows. It is a completely new programming paradigm that simplifies big data processing in parallel with key value pairs. we’ll discuss everything in detail with examples in this post.

Comments are closed.