Hadoop Mapreduce For Big Data Dummies

Big Data For Dummies Pdf Apache Hadoop Map Reduce Hadoop mapreduce is the heart of the hadoop system. it provides all the capabilities you need to break big data into manageable chunks, process the data in parallel on your distributed cluster, and then make the data available for user consumption or additional processing. If you need to develop or manage big data solutions, you'll appreciate how these four experts define, explain, and guide you through this new and often confusing concept. you'll learn what it is, why it matters, and how to choose and implement solutions that work.

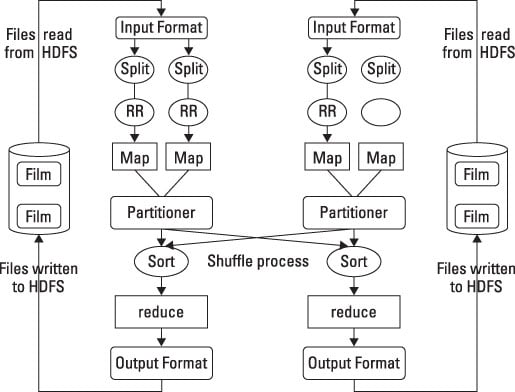

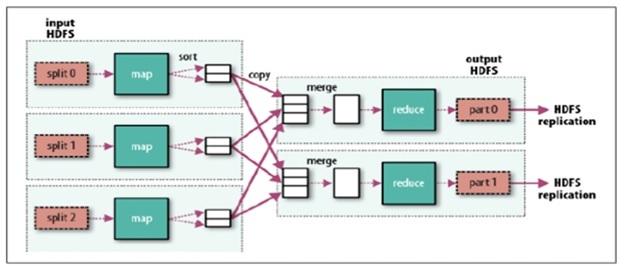

Hadoop Mapreduce For Big Data Dummies You’ll learn how to use hadoop, hive, pig, hbase and spark, as well as all the theoretical aspects of big data architectures. the other modules in this course cover python programming, databases, big data vitesse and, finally, automation and deployment. Mapreduce is a programming model that allows for massive scalability across hundreds or thousands of servers in a hadoop cluster. it’s the heart of hadoop that performs all the processing tasks. one of the most common mistakes beginners make is not properly sizing the hadoop cluster. The map phase is mostly for parsing, transforming data, and filtering out data. think record by record, shared nothing approach to record processing. in word count, this is parsing the line and splitting out the words. the reduce phase is all about aggregation: counting, averaging, min max, etc. Examples are given of how much data is created every minute from sources like emails, social media posts, and videos. common tools for analyzing big data like mapreduce and hadoop are also introduced.

Learn By Example Hadoop Mapreduce For Big Data Problems Coderprog The map phase is mostly for parsing, transforming data, and filtering out data. think record by record, shared nothing approach to record processing. in word count, this is parsing the line and splitting out the words. the reduce phase is all about aggregation: counting, averaging, min max, etc. Examples are given of how much data is created every minute from sources like emails, social media posts, and videos. common tools for analyzing big data like mapreduce and hadoop are also introduced. Mapreduce is one of those new technologies, but it is just an algorithm, a recipe for how to make sense of all the data. to get the most from mapreduce, you need more than just an algorithm. you need a collection of products and technologies designed to handle the challenges presented by big data. ### understanding hadoop mapreduce hadoop is an open source framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. one of the key components of hadoop is mapreduce, which is a programming model and processing engine for processing and generating large data sets with a parallel, distributed algorithm on a cluster. Hadoop is a high level open source project . it contains several individual projects. it also has a slew of related projects. has been implemented for the most part in java. " how do we assign work units to workers? " what if we have more work units than workers? " what if workers need to share partial results?. Hadoop, an open source software framework, uses hdfs (the hadoop distributed file system) and mapreduce to analyze big data on clusters of commodity hardware—that is, in a distributed computing environment.

Hadoop Mapreduce Key Features Highlights Intellipaat Mapreduce is one of those new technologies, but it is just an algorithm, a recipe for how to make sense of all the data. to get the most from mapreduce, you need more than just an algorithm. you need a collection of products and technologies designed to handle the challenges presented by big data. ### understanding hadoop mapreduce hadoop is an open source framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. one of the key components of hadoop is mapreduce, which is a programming model and processing engine for processing and generating large data sets with a parallel, distributed algorithm on a cluster. Hadoop is a high level open source project . it contains several individual projects. it also has a slew of related projects. has been implemented for the most part in java. " how do we assign work units to workers? " what if we have more work units than workers? " what if workers need to share partial results?. Hadoop, an open source software framework, uses hdfs (the hadoop distributed file system) and mapreduce to analyze big data on clusters of commodity hardware—that is, in a distributed computing environment.

Comments are closed.