Gpu Vs Cpu Comparison Over The Last Years Cuda Programming And

Gpu Vs Cpu Comparison Over The Last Years Cuda Programming And We prepared a comparison sheet of theoretical performance (number of transistors, peak performance, memory bandwidth) of cpus and gpus over the last 6 years. since it was quite an effort to collect all the data, we woul…. Gpus and cpus are intended for fundamentally different types of workloads. cpus are typically designed for multitasking and fast serial processing, while gpus are designed to produce high computational throughput using their massively parallel architectures.

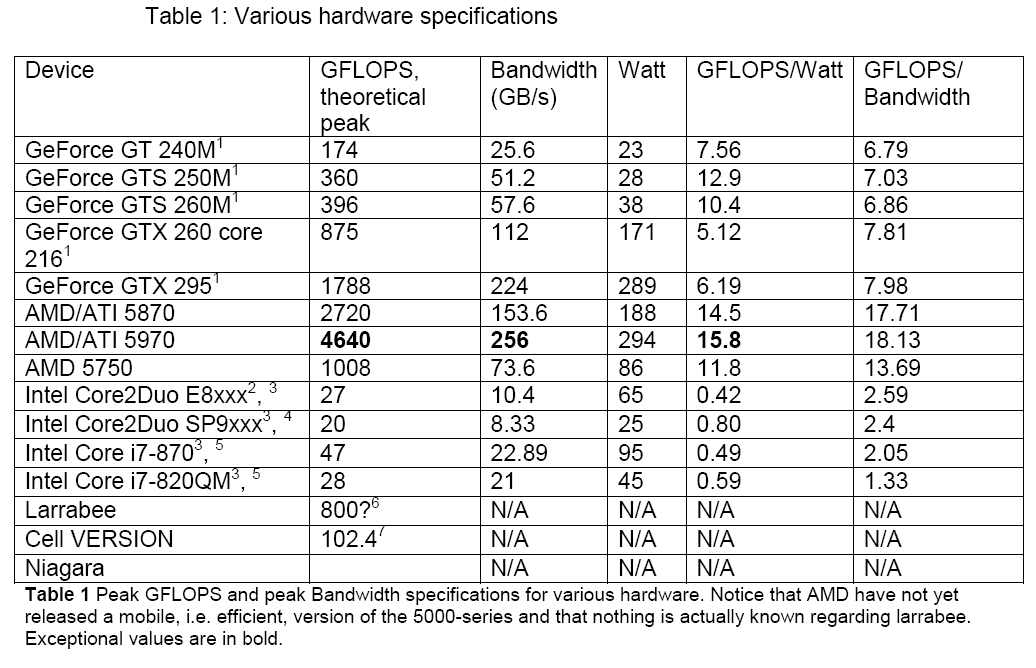

Gpu Vs Cpu Comparison Over The Last Years Cuda Programming And In my previous post, “ cuda 0: from os to gpus,” i provided a brief overview of key terms in parallel computing and discussed the rise of gpus. this article dives deeper into the technical. Cuda serves as the connecting bridge between nvidia gpus and gpu based applications, enabling popular deep learning libraries like tensorflow and pytorch to leverage gpu acceleration. Comparison of peak theoretical gflops and memory bandwidth for nvidia gpus and intel cpus over the past few years. graphs from the nvidia cuda c programming guide 4.0. the gpu chips are massive multithreaded, manycore simd processors. simd stands for single instruction multiple data. One of the key differences between cpu and gpu programming in cuda lies in their architectural design. cpus are designed with a few powerful cores optimized for sequential processing, while gpus are designed with hundreds or thousands of smaller cores optimized for parallel processing.

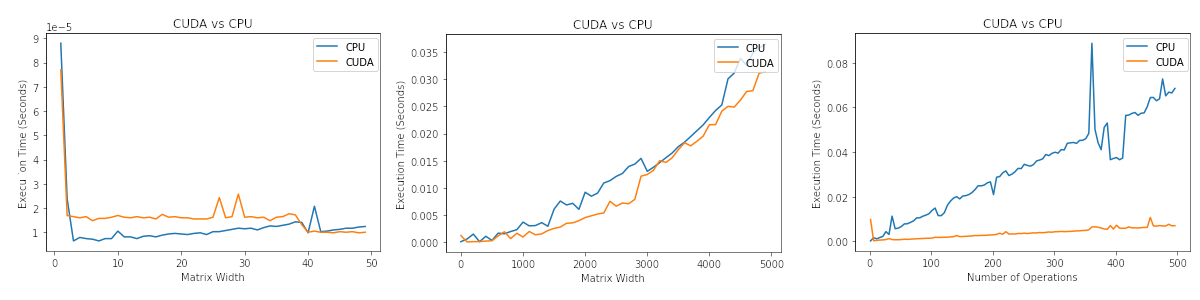

Jrtechs Cuda Vs Cpu Performance Comparison of peak theoretical gflops and memory bandwidth for nvidia gpus and intel cpus over the past few years. graphs from the nvidia cuda c programming guide 4.0. the gpu chips are massive multithreaded, manycore simd processors. simd stands for single instruction multiple data. One of the key differences between cpu and gpu programming in cuda lies in their architectural design. cpus are designed with a few powerful cores optimized for sequential processing, while gpus are designed with hundreds or thousands of smaller cores optimized for parallel processing. It is up to date with current cpu and gpu releases, and splits the data into four curves, showing single and double precision performance separately. actually, the beta cuda 3.1 programming guide has a much improved version of this figure. Cpu and gpu are very different and have different programming models and programming languages. you could use c on the cpu and opencl (or cuda, if you restrict yourself to nvidia products) on the gpu. you generally won't be able to run an arbitrary c code on the gpu. Each implementation includes a comparison with a cpu based version of the same algorithm. the tests track metrics such as: execution time for both cpu and gpu implementations. speedup achieved by leveraging gpu parallelism. accuracy and correctness of the results. Comparison of cpu and gpu single precision floating point performance through the years. image taken from nvidia’s cuda c programming guide [93]. parallel computing has become.

Comparison Cpu Vs Gpu Infographics Stable Diffusion Online It is up to date with current cpu and gpu releases, and splits the data into four curves, showing single and double precision performance separately. actually, the beta cuda 3.1 programming guide has a much improved version of this figure. Cpu and gpu are very different and have different programming models and programming languages. you could use c on the cpu and opencl (or cuda, if you restrict yourself to nvidia products) on the gpu. you generally won't be able to run an arbitrary c code on the gpu. Each implementation includes a comparison with a cpu based version of the same algorithm. the tests track metrics such as: execution time for both cpu and gpu implementations. speedup achieved by leveraging gpu parallelism. accuracy and correctness of the results. Comparison of cpu and gpu single precision floating point performance through the years. image taken from nvidia’s cuda c programming guide [93]. parallel computing has become.

Comments are closed.