Github Mahaitao999 Cuda Programming Cuda%e7%bc%96%e7%a8%8b%e5%9f%ba%e7%a1%80%e4%b8%8e%e5%ae%9e%e8%b7%b5 %e4%b8%80%e4%b9%a6%e7%9a%84%e4%bb%a3%e7%a0%81

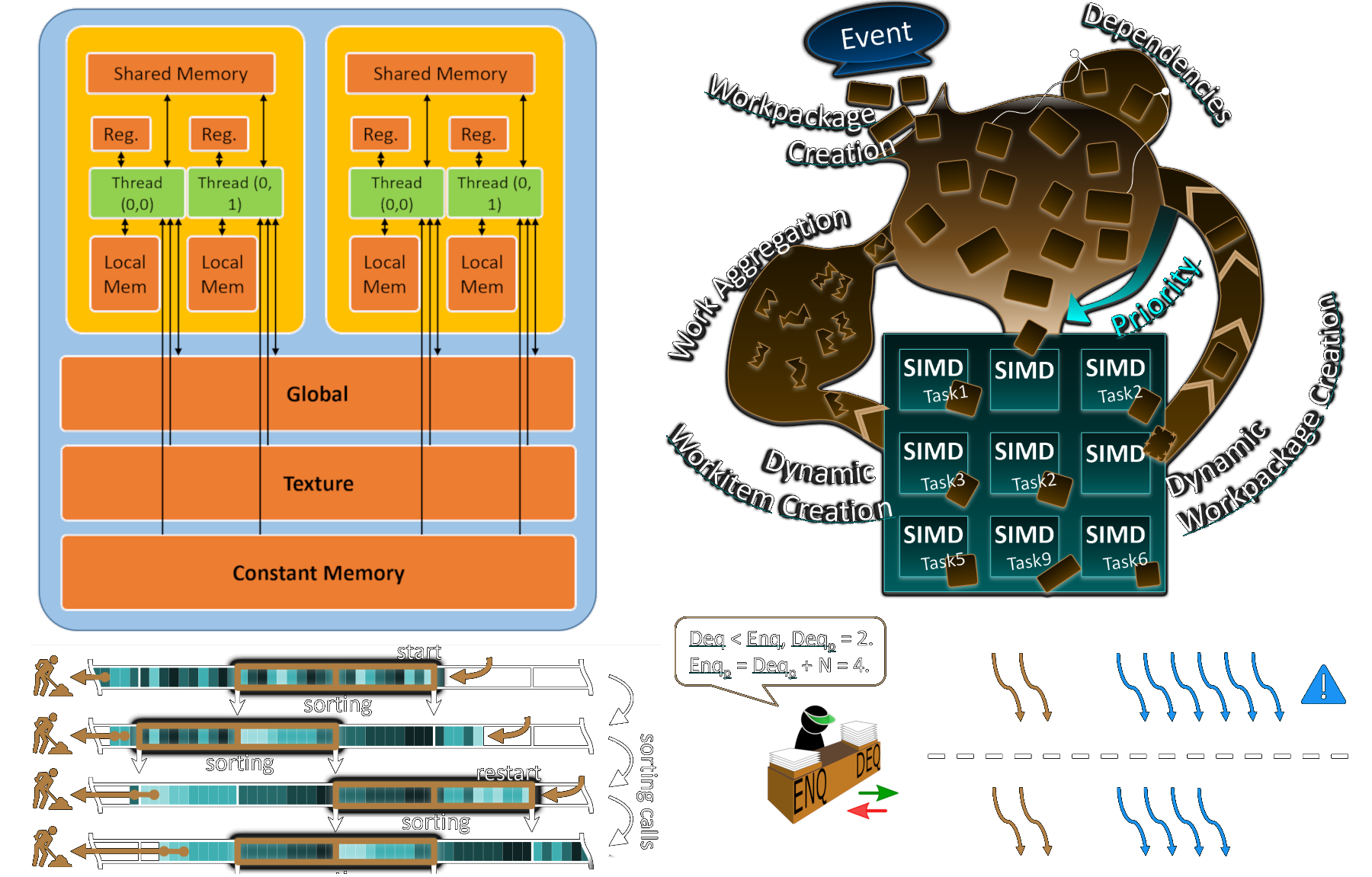

Cuda And Applications To Task Based Programming 《cuda编程基础与实践》一书的代码. contribute to mahaitao999 cuda programming development by creating an account on github. To provide a profound understanding of how cuda applications can achieve peak performance, the first two parts of this tutorial outline the modern cuda architecture. following a basic introduction, we expose how language features are linked to and constrained by the underlying physical hardware components.

Github Mahaitao999 Cuda Programming Cuda编程基础与实践 一书的代码 A quick and easy introduction to cuda programming for gpus. this post dives into cuda c with a simple, step by step parallel programming example. Deep learning & computer vision. mahaitao999 has 57 repositories available. follow their code on github. This page serves as a web presence for hosting up to date materials for the 4 part tutorial "cuda and applications to task based programming". here you may find code samples to complement the presented topics as well as extended course notes, helpful links and references. Have a question about this project? sign up for a free github account to open an issue and contact its maintainers and the community.

Github Misterramon Cuda This page serves as a web presence for hosting up to date materials for the 4 part tutorial "cuda and applications to task based programming". here you may find code samples to complement the presented topics as well as extended course notes, helpful links and references. Have a question about this project? sign up for a free github account to open an issue and contact its maintainers and the community. Install the latest version of cuda toolkit if allowed, since newer versions usually have more features and bug fixes. for example, cuda 12.5 introduces the new primitive cub::devicefor for device side parallel for loops (related pr). 之后利用矩阵分块和shared mem进行优化 思路就是现在每次我们需要计算a矩阵的一行和b矩阵的一列,因此我们把a矩阵划分成为nblock=n block size,b矩阵同理,这样相乘的时候就是对应block相乘,算完之后再算block的相加操作。 里面较为关键的计算有:astep对于a中下一个block的位置,因为是按照行所以是block size,bstep对于b中下一个block的位置,因为是按照列,下一个block离现在有block size行,所以是block size*n. int tx = threadidx.x; int ty = threadidx.y; int bx = blockidx.x; int by = blockidx.y;. 不过最后总算是用各种方法实现了,不过也有很多可以优化的地方,可以参考下: 资源: github 1keven1 simpledigitalrecognitionneuralnetwork tree master taichi 性能测试 刚写完一测试发现性能不升反降,给我吓得。 还好在测试神经网络复杂度的时候有了眉目: 分析. Contribute to mahaitao999 cuda programming development by creating an account on github.

Comments are closed.