Getting File Key Size In Boto S3 Using Python 3 Dnmtechs Sharing

Getting File Key Size In Boto S3 Using Python 3 Dnmtechs Sharing Getting the file or key size in boto s3 using python 3 is a straightforward process. by using the `s3.head object` method and extracting the `contentlength` key from the response object, you can easily retrieve the size of a file or key in s3. There must be an easy way to get the file size (key size) without pulling over a whole file. i can see it in the properties of the aws s3 browser. and i think i can get it off the "content length" header of a "head" request. but i'm not connecting the dots about how to do this with boto.

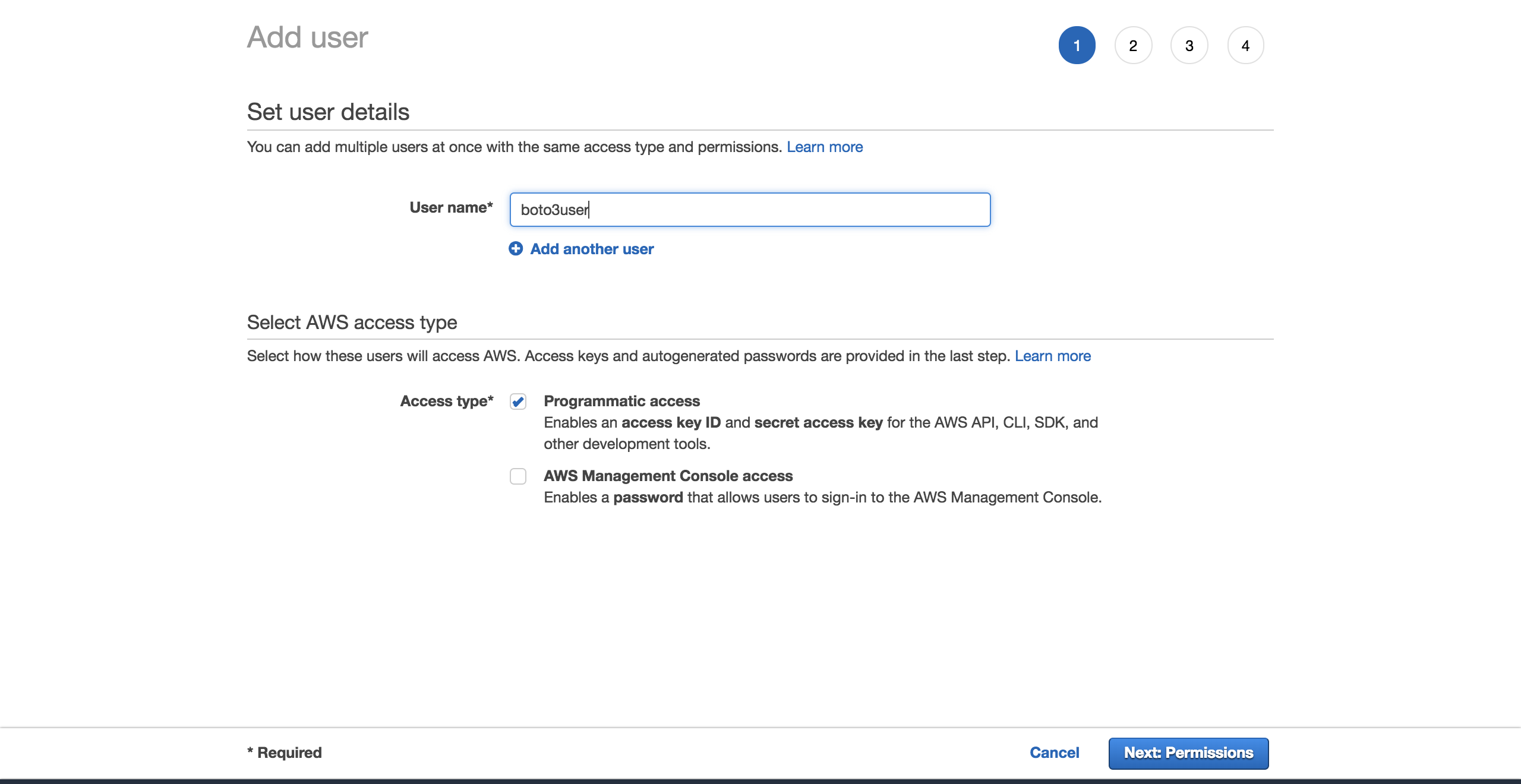

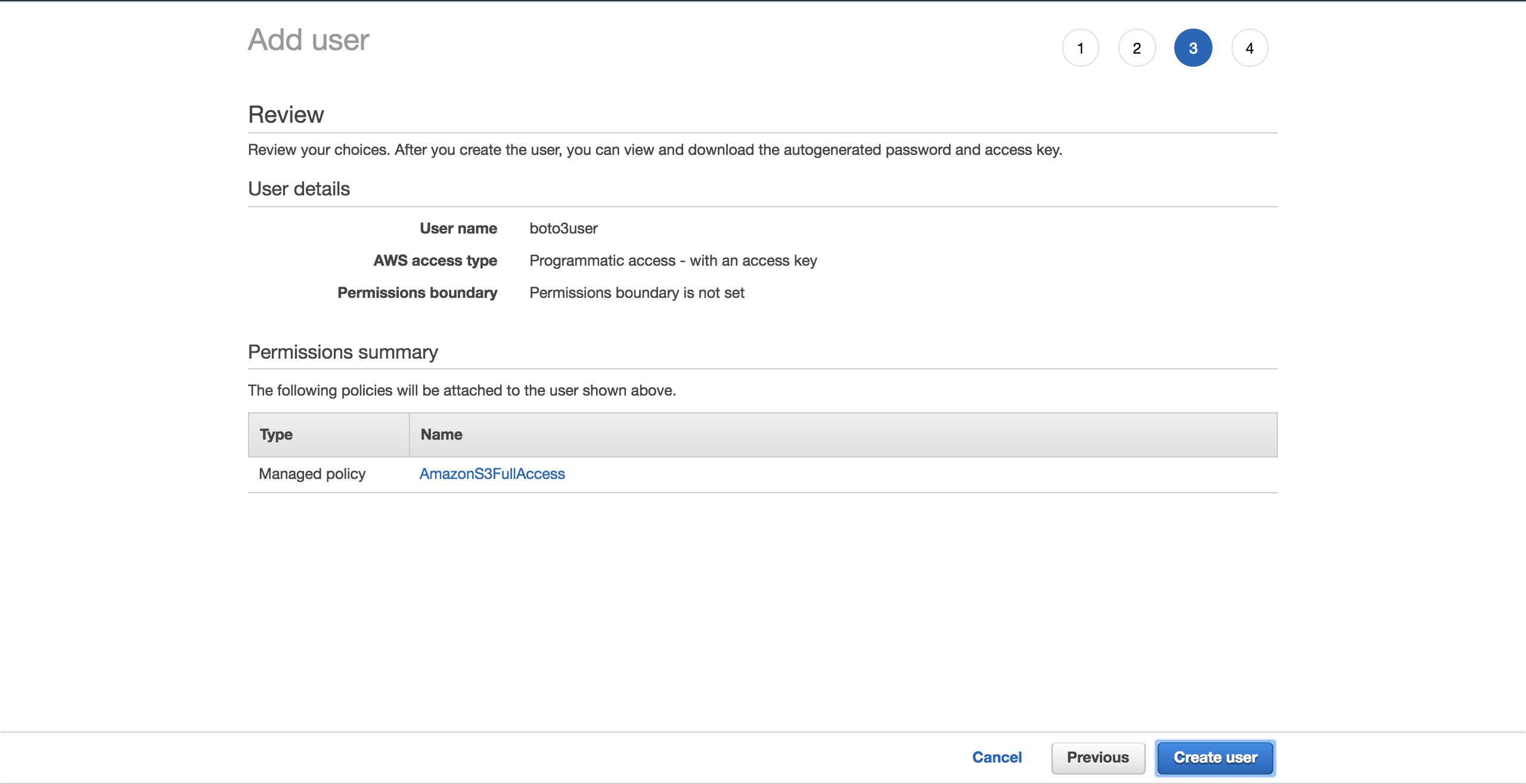

Getting File Key Size In Boto S3 Using Python 3 Dnmtechs Sharing The most efficient way to get the size of an object is to use the head object method from the boto3 library. this method sends a head request to the s3 service, fetching the metadata for the specified object without downloading it. Python : how do i get the file key size in boto s3?to access my live chat page, on google, search for "hows tech developer connect"i promised to share a hi. Reading files from an aws s3 bucket using python and boto3 is straightforward. with just a few lines of code, you can retrieve and work with data stored in s3, making it an invaluable tool for data scientists working with large datasets. When working with amazon simple storage service (s3) using the boto library in python 3, it is often necessary to retrieve the size of a file or key stored in an s3 bucket.

Python Boto3 And Aws S3 Demystified Real Python Reading files from an aws s3 bucket using python and boto3 is straightforward. with just a few lines of code, you can retrieve and work with data stored in s3, making it an invaluable tool for data scientists working with large datasets. When working with amazon simple storage service (s3) using the boto library in python 3, it is often necessary to retrieve the size of a file or key stored in an s3 bucket. The lookup method simply does a head request on the bucket for the keyname so it will return all of the headers (including content length) for the key but will not transfer any of the actual content of the key. To find the size of an s3 bucket using cloudwatch and boto3, you can utilize the cloudwatch metrics for s3 bucket storage. specifically, you can use the “ bucketsizebytes ” metric to retrieve the size of the bucket. Reading files from an aws s3 bucket using python and boto3 is straightforward. with just a few lines of code, you can retrieve and work with data stored in s3, making it an invaluable tool for data scientists working with large datasets. Below are 3 examples codes on how to list the objects in an s3 bucket folder. what the code does is that it gets all the files objects inside the s3 bucket named radishlogic bucket within the folder named s3 folder and adds their keys inside a python list (s3 object key list).

Python Boto3 And Aws S3 Demystified Real Python The lookup method simply does a head request on the bucket for the keyname so it will return all of the headers (including content length) for the key but will not transfer any of the actual content of the key. To find the size of an s3 bucket using cloudwatch and boto3, you can utilize the cloudwatch metrics for s3 bucket storage. specifically, you can use the “ bucketsizebytes ” metric to retrieve the size of the bucket. Reading files from an aws s3 bucket using python and boto3 is straightforward. with just a few lines of code, you can retrieve and work with data stored in s3, making it an invaluable tool for data scientists working with large datasets. Below are 3 examples codes on how to list the objects in an s3 bucket folder. what the code does is that it gets all the files objects inside the s3 bucket named radishlogic bucket within the folder named s3 folder and adds their keys inside a python list (s3 object key list).

Python Boto3 And Aws S3 Demystified Real Python Reading files from an aws s3 bucket using python and boto3 is straightforward. with just a few lines of code, you can retrieve and work with data stored in s3, making it an invaluable tool for data scientists working with large datasets. Below are 3 examples codes on how to list the objects in an s3 bucket folder. what the code does is that it gets all the files objects inside the s3 bucket named radishlogic bucket within the folder named s3 folder and adds their keys inside a python list (s3 object key list).

Uploading Downloading Files From Aws S3 Using Python Boto3 By Liu Zuo

Comments are closed.