Generate Images Faster In Stable Diffusion With Nvidia Tensorrt Irender

Generate Images Faster In Stable Diffusion With Nvidia Tensorrt Irender When applied to stable diffusion web ui image generation, tensorrt substantially accelerated performance. it doubled the number of images generated per minute compared to previously employed pytorch optimizations. nvidia has shared a stable diffusion tensorrt demo. The best way to enable these optimizations is with nvidia tensorrt sdk, a high performance deep learning inference optimizer. tensorrt provides layer fusion, precision calibration, kernel auto tuning, and other capabilities that significantly boost the efficiency and speed of deep learning models.

Generate Images Faster In Stable Diffusion With Nvidia Tensorrt Irender With nvidia tensorrt acceleration and quantization, users can now generate and edit images faster and more efficiently on nvidia rtx gpus. stable diffusion 3.5 quantized fp8 (right) generates images in half the time with similar quality as fp16 (left). Comfyui offers a streamlined interface for stable diffusion, accelerated on nvidia rtx gpus with nvidia tensorrt. fast image generation combined with one step rtx enhanced. In today’s game ready driver, nvidia added tensorrt acceleration for stable diffusion web ui, which boosts geforce rtx performance by up to 2x. in this tutorial video i will show you everything about this new speed up via extension installation and tensorrt sd unet generation. Key takeaways: we've collaborated with nvidia to deliver nvidia tensorrt optimized versions of stable diffusion 3.5 (sd3.5), making enterprise grade image generation available on a wider range of nvidia rtx gpus. the sd3.5 tensorrt optimized models deliver up to 2.3x faster generation on sd3.5 large and 1.7x faster on sd3.5 medium, while reducing vram requirements by 40%. the optimized models.

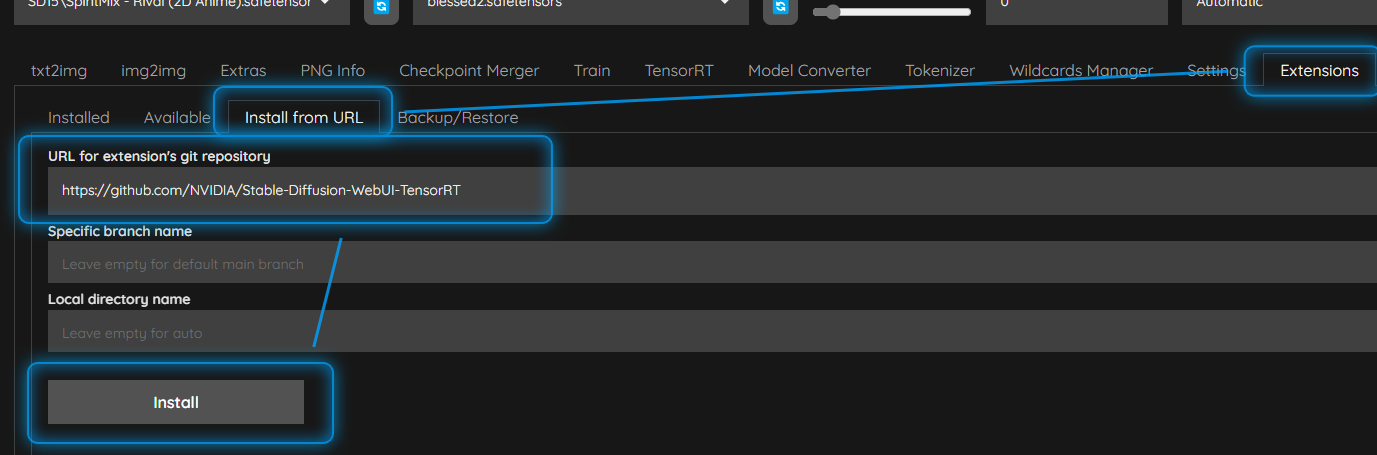

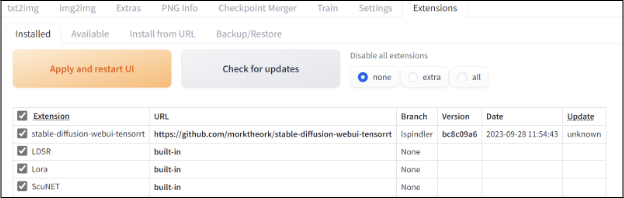

Generate Images Faster In Stable Diffusion With Nvidia Tensorrt Irender In today’s game ready driver, nvidia added tensorrt acceleration for stable diffusion web ui, which boosts geforce rtx performance by up to 2x. in this tutorial video i will show you everything about this new speed up via extension installation and tensorrt sd unet generation. Key takeaways: we've collaborated with nvidia to deliver nvidia tensorrt optimized versions of stable diffusion 3.5 (sd3.5), making enterprise grade image generation available on a wider range of nvidia rtx gpus. the sd3.5 tensorrt optimized models deliver up to 2.3x faster generation on sd3.5 large and 1.7x faster on sd3.5 medium, while reducing vram requirements by 40%. the optimized models. Tldr in this tutorial, carter, a founding engineer at brev, demonstrates how to utilize comfyui and nvidia's tensorrt for rapid image generation with stable diffusion. he guides viewers through setting up the environment on brev, deploying a launchable, and optimizing the model for faster inference. Stable diffusion is an open source generative ai image based model that enables users to generate images with simple text descriptions. gaining traction among developers, it has powered popular applications like wombo and lensa. Starting with nvidia tensorrt 9.2.0, we’ve developed a best in class quantization toolkit with improved 8 bit (fp8 or int8) post training quantization (ptq) to significantly speed up diffusion deployment on nvidia hardware while preserving image quality. It takes more vram overall to generate an image of the same size. the original models still need to stick around, making memory management difficult they need to be loaded and unloaded from vram to make the most of the gains. each engine must fit within a single protobuf message.

Generate Images Faster In Stable Diffusion With Nvidia Tensorrt Irender Tldr in this tutorial, carter, a founding engineer at brev, demonstrates how to utilize comfyui and nvidia's tensorrt for rapid image generation with stable diffusion. he guides viewers through setting up the environment on brev, deploying a launchable, and optimizing the model for faster inference. Stable diffusion is an open source generative ai image based model that enables users to generate images with simple text descriptions. gaining traction among developers, it has powered popular applications like wombo and lensa. Starting with nvidia tensorrt 9.2.0, we’ve developed a best in class quantization toolkit with improved 8 bit (fp8 or int8) post training quantization (ptq) to significantly speed up diffusion deployment on nvidia hardware while preserving image quality. It takes more vram overall to generate an image of the same size. the original models still need to stick around, making memory management difficult they need to be loaded and unloaded from vram to make the most of the gains. each engine must fit within a single protobuf message.

Comments are closed.