Flatten Arrays Structs With Explode Inline And Struct Pyspark Tutorial Pyspark

Spark Explode Array Of Struct To Rows Spark By Examples Master pyspark's most powerful transformations in this tutorial as we explore how to flatten complex nested data structures in spark dataframes. you'll learn how to use explode (),. Pyspark explode (), inline (), and struct () explained with examples. learn how to flatten arrays and work with nested structs in pyspark.

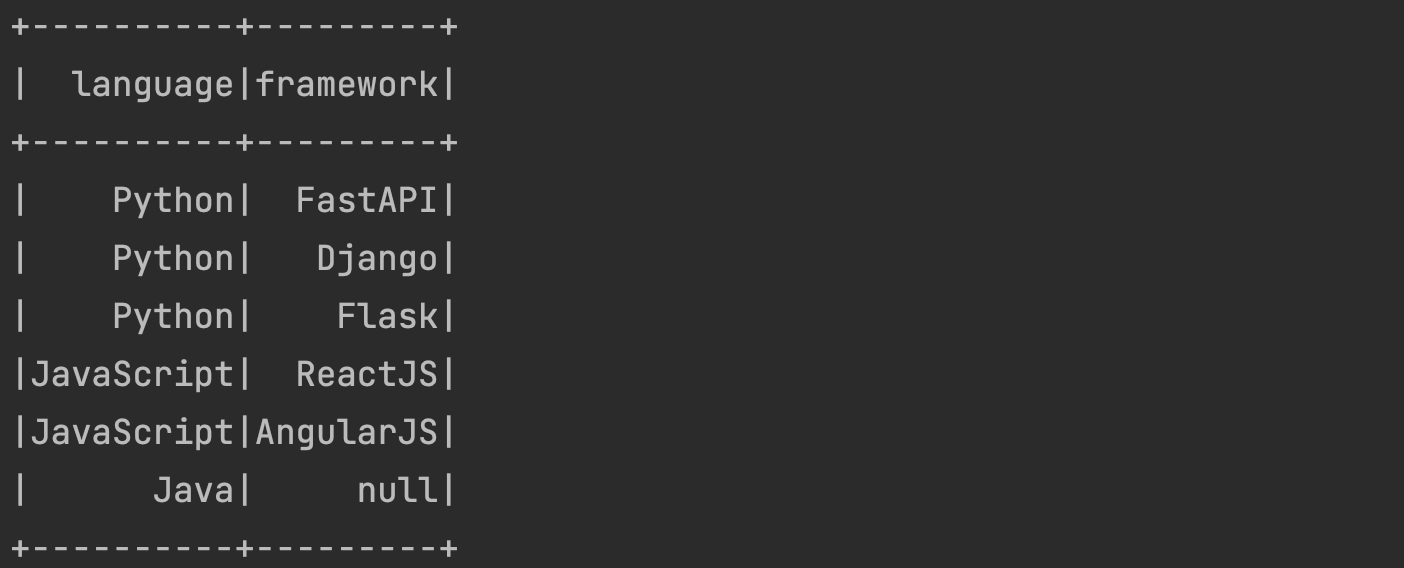

Flatten Or Explode Nested Arrays In Power Automate Powerautomate Json Use a combination of explode and the * selector: .select('id', 'device exploded.*') # root # | id: string (nullable = true) # | device vendor: string (nullable = true) # | device name: string (nullable = true) # | device manufacturer: string (nullable = true). This function utilized an explode mechanism to flatten the structure, effectively simplifying the task and making it more efficient. i’ll elaborate on the function i developed in spark scala. Learn how to work with complex nested data in apache spark using explode functions to flatten arrays and structs with beginner friendly examples. The ‘explode’ function in spark is used to flatten an array of elements into multiple rows, copying all the other columns into each new row. for each input row, the explode function creates as many output rows as there are elements in the provided array.

Spark Explode Array Of Struct To Rows Apache Spark Tutorial Learn how to work with complex nested data in apache spark using explode functions to flatten arrays and structs with beginner friendly examples. The ‘explode’ function in spark is used to flatten an array of elements into multiple rows, copying all the other columns into each new row. for each input row, the explode function creates as many output rows as there are elements in the provided array. This document explains the pyspark functions used to transform complex nested data structures (arrays and maps) into more accessible formats. the explode() family of functions converts array elements or map entries into separate rows, while the flatten() function converts nested arrays into single level arrays. In this article, lets walk through the flattening of complex nested data (especially array of struct or array of array) efficiently without the expensive explode and also handling. Def flatten array (frame: pyspark. sql. dataframe) > (pyspark. sql. dataframe, booleantype): have array = false aliased columns = list () i=0 for column, t column in frame. dtypes: if t column. startswith ('array<') and i == 0: have array = true c = explode (frame [column]). alias (column) . i = i 1 else:. Flattening rows in apache spark combines several fundamental steps — reading the nested data, exploding the array elements into rows, and then extracting the required fields. by efficiently utilizing these steps, you can transform complex data structures into simpler, flat dataframes.

Apache Spark Pyspark Flatten Embedded Structs All Into Same Level This document explains the pyspark functions used to transform complex nested data structures (arrays and maps) into more accessible formats. the explode() family of functions converts array elements or map entries into separate rows, while the flatten() function converts nested arrays into single level arrays. In this article, lets walk through the flattening of complex nested data (especially array of struct or array of array) efficiently without the expensive explode and also handling. Def flatten array (frame: pyspark. sql. dataframe) > (pyspark. sql. dataframe, booleantype): have array = false aliased columns = list () i=0 for column, t column in frame. dtypes: if t column. startswith ('array<') and i == 0: have array = true c = explode (frame [column]). alias (column) . i = i 1 else:. Flattening rows in apache spark combines several fundamental steps — reading the nested data, exploding the array elements into rows, and then extracting the required fields. by efficiently utilizing these steps, you can transform complex data structures into simpler, flat dataframes.

Pyspark Explode Arrays Into Rows Of A Dataframe Def flatten array (frame: pyspark. sql. dataframe) > (pyspark. sql. dataframe, booleantype): have array = false aliased columns = list () i=0 for column, t column in frame. dtypes: if t column. startswith ('array<') and i == 0: have array = true c = explode (frame [column]). alias (column) . i = i 1 else:. Flattening rows in apache spark combines several fundamental steps — reading the nested data, exploding the array elements into rows, and then extracting the required fields. by efficiently utilizing these steps, you can transform complex data structures into simpler, flat dataframes.

Pyspark Explode Arrays Into Rows Of A Dataframe

Comments are closed.