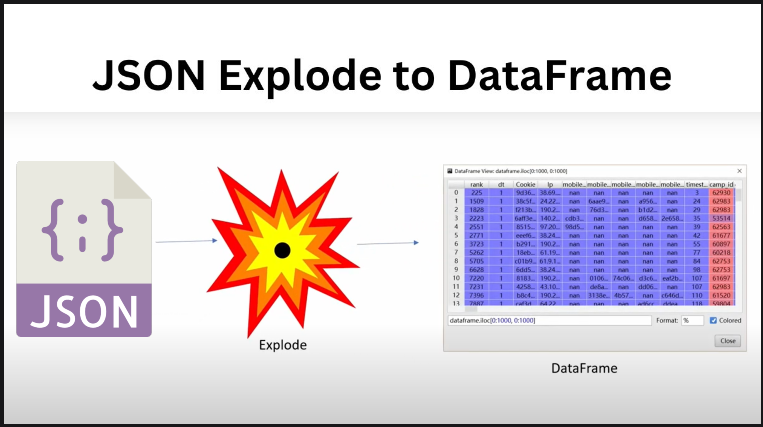

Exploding Nested Json In Pyspark A Comprehensive Guide

Reading Nested Json Files In Pyspark A Guide Saturn Cloud Blog Reading nested json files in pyspark can be a bit tricky, but with the right approach, it becomes straightforward. by understanding the structure of your data and using pyspark’s powerful functions, you can easily extract and analyze data from nested json files. Df = spark.read.option('multiline','true').json(ads file path).withcolumn("ads", explode("ads")).select("ads.*", "*").drop("ads") and then reusing this method if there are nested lists.

Python Nested Json Parsing Pyspark Stack Overflow In this guide, we will explore how to explode a nested json file that contains two lists at different levels using pyspark. Generalize for deeper nested structures for deeply nested json structures, you can apply this process recursively by continuing to use select, alias, and explode to flatten additional layers. If you are struggling with reading complex nested json in databricks with pyspark, this article will definitely help you out and you can close your outstanding tasks which are just. Exploding list columns into rows :param df: input pyspark dataframe :return: cleaned up dataframe """ df = map json column(df, raw response col) df = extract json keys as columns(df, raw response col) df = explode all list columns(df) return df ## single line item functions def explode array of maps( df: pyspark.sql.dataframe, array col: str.

Exploding Flattening Json Data Into A Structured Table Using Pyspark рџ If you are struggling with reading complex nested json in databricks with pyspark, this article will definitely help you out and you can close your outstanding tasks which are just. Exploding list columns into rows :param df: input pyspark dataframe :return: cleaned up dataframe """ df = map json column(df, raw response col) df = extract json keys as columns(df, raw response col) df = explode all list columns(df) return df ## single line item functions def explode array of maps( df: pyspark.sql.dataframe, array col: str. Learn how to handle and flatten nested json structures in apache spark using pyspark. understand real world json examples and extract useful data efficiently. We will learn how to read the nested json data using pyspark. df json = spark.read.format (“json”).load (“ mnt path file.json”) import required functions to explode the array values to rows. from. To work with json data in pyspark, we can utilize the built in functions provided by the pyspark sql module. these functions allow users to parse json strings and extract specific fields from nested structures. Flattening multi nested json columns in spark involves utilizing a combination of functions like json regexp extract, explode, and potentially struct depending on the specific json structure .

Comments are closed.