Etl And Data Pipelines For Beginners Extract Transform Load Explained

The Challenges Of Extract Transform And Load Etl For Data Learn how to extract data from various sources, transform it into a usable format, and load it into your target system for analysis. this video breaks down complex concepts into simple,. Etl stands for extract, transform, and load and represents the backbone of data engineering where data gathered from different sources is normalized and consolidated for the purpose of analysis and reporting.

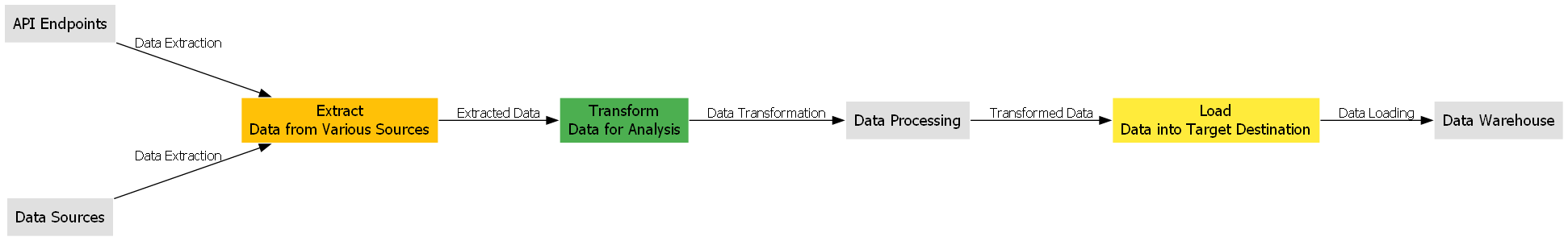

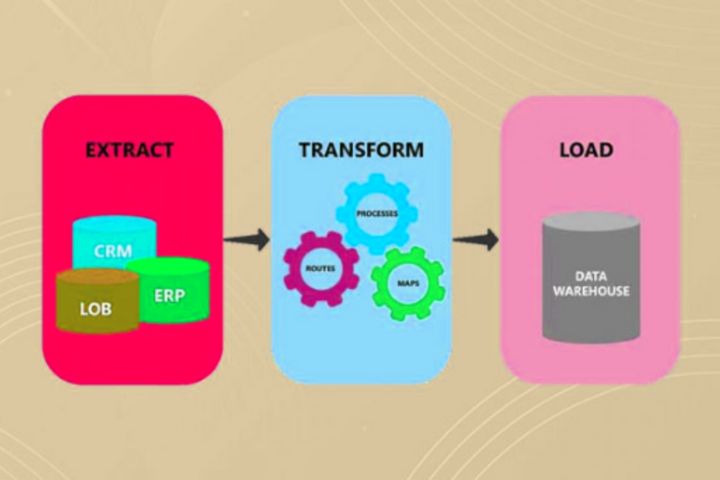

What S Etl Extract Transform Load Explained Bmc Software Blogs In this guide, we’ll break down the basics of etl (extract, transform, load) pipelines, why they’re crucial, and how they power modern data driven businesses. whether you’re just starting or. Etl (extract, transform, and load) is a fundamental process in data management and business intelligence. it involves extracting data from various sources, transforming it into a standardized, usable format, and loading it into a target system, such as a data warehouse or data lake. in this guide, we’ll examine:. By prioritizing etl pipelines, businesses transform raw data into a strategic asset, driving agility and competitive advantage. an etl pipeline comprises three foundational stages: extract, transform, and load. each plays a distinct role in preparing data for analysis or operational use. let’s break them down with real world contexts. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. it then transforms the data according to business rules, and it loads the data into a destination data store.

Understanding Etl Pipelines Extract Transform And Load By prioritizing etl pipelines, businesses transform raw data into a strategic asset, driving agility and competitive advantage. an etl pipeline comprises three foundational stages: extract, transform, and load. each plays a distinct role in preparing data for analysis or operational use. let’s break them down with real world contexts. Extract, transform, load (etl) is a data pipeline used to collect data from various sources. it then transforms the data according to business rules, and it loads the data into a destination data store. Etl—meaning extract, transform, load—is a data integration process that combines, cleans and organizes data from multiple sources into a single, consistent data set for storage in a data warehouse, data lake or other target system. etl data pipelines provide the foundation for data analytics and machine learning workstreams. Etl refers to the systematic process of collecting data from multiple sources, transforming it into the required format, and loading it into a centralized location such as a data warehouse or data lake. The extract, transform, load process (short: etl) describes the steps between collecting data from various sources to the point where it can finally be stored in a data warehouse solution. when large amounts of data are to be visualized, the individual stages come into play. what is etl?. Etl stands for extract, transform, load. it's a well established pattern used in data pipelines to collect data from various origins, reshape it, and then store it in a designated target system, often a data warehouse. think of it like preparing ingredients (extracting and transforming) before you start cooking (loading them into the final dish).

Extract Transform Load Etl Or Extract Load Transform Etl—meaning extract, transform, load—is a data integration process that combines, cleans and organizes data from multiple sources into a single, consistent data set for storage in a data warehouse, data lake or other target system. etl data pipelines provide the foundation for data analytics and machine learning workstreams. Etl refers to the systematic process of collecting data from multiple sources, transforming it into the required format, and loading it into a centralized location such as a data warehouse or data lake. The extract, transform, load process (short: etl) describes the steps between collecting data from various sources to the point where it can finally be stored in a data warehouse solution. when large amounts of data are to be visualized, the individual stages come into play. what is etl?. Etl stands for extract, transform, load. it's a well established pattern used in data pipelines to collect data from various origins, reshape it, and then store it in a designated target system, often a data warehouse. think of it like preparing ingredients (extracting and transforming) before you start cooking (loading them into the final dish).

Comments are closed.