Duckdb Hash Join Doesn T Scale With Number Of Threads Issue 2653

Duckdb Hash Join Doesn T Scale With Number Of Threads Issue 2653 Hi, i wrote a small benchmark to try to see how join scales with number of threads. the results seem to not have any significant effect on speed. to reproduce here is the source code to the benchmark: import duckdb import n. Does duckdb support multi threaded joins? i've configured duckdb to run on 48 threads, but when executing a simple join query, only one thread is actively working. here is an example using the cli.

Duckdb Webshop Duckdb Webshop Duckdb's hash join operator has supported larger than memory joins since version 0.6.1 in december 2022. however, the scale of this benchmark (coupled with the limited ram of the benchmarking hardware), meant that this benchmark could still not complete successfully. Furthermore, the query gets slower with an increase in number of threads in case of a nested loop join, but scales with the number of threads when we have a hash join. steps to reproduce the behavior. bonus points if those are only sql queries. select count(p.personid) as vcount from person p. select min(id) as id from t. Too many threads note that in certain cases duckdb may launch too many threads (e.g., due to hyperthreading), which can lead to slowdowns. in these cases, it’s worth manually limiting the number of threads using set threads = x. larger than memory workloads (out of core processing). Overall at least 12x faster than a tuned mysql instance at sf10. q1 for example is 176.5 seconds with mysql, and 1.26 with duckdb. change integers decimals on some columns to double to address temporary performance limitations with conservative handling of large aggregations.

Duckdb Webshop Duckdb Webshop Too many threads note that in certain cases duckdb may launch too many threads (e.g., due to hyperthreading), which can lead to slowdowns. in these cases, it’s worth manually limiting the number of threads using set threads = x. larger than memory workloads (out of core processing). Overall at least 12x faster than a tuned mysql instance at sf10. q1 for example is 176.5 seconds with mysql, and 1.26 with duckdb. change integers decimals on some columns to double to address temporary performance limitations with conservative handling of large aggregations. To force a particular join order, you can break up the query into multiple queries with each creating a temporary tables: create or replace temporary table t1 as ; join on the result of the first query, t1 create or replace temporary table t2 as select * from t1 ; compute the final result using t2 select * from t1. Could you perhaps check the join order used by postgres, disable the duckdb optimizer (pragma disable optimizer) and manually alter the query so duckdb uses the same join order as postgres?. This page demonstrates how to simultaneously insert into and read from a duckdb database across multiple python threads. this could be useful in scenarios where new data is flowing in and an analysis should be periodically re run. Duckdb doesn't seem to use multi threading. if i manually run that same query separately on the files pertaining to each customer (by splitting the third party output manually), and take the union of results afterwards, all my 4 cpu cores are busy and i get the results 4x faster. for i in range (m):.

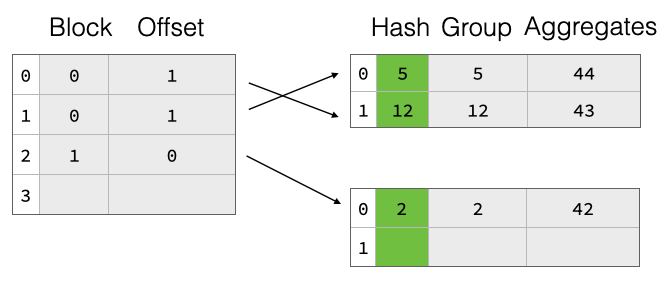

Parallel Grouped Aggregation In Duckdb Duckdb To force a particular join order, you can break up the query into multiple queries with each creating a temporary tables: create or replace temporary table t1 as ; join on the result of the first query, t1 create or replace temporary table t2 as select * from t1 ; compute the final result using t2 select * from t1. Could you perhaps check the join order used by postgres, disable the duckdb optimizer (pragma disable optimizer) and manually alter the query so duckdb uses the same join order as postgres?. This page demonstrates how to simultaneously insert into and read from a duckdb database across multiple python threads. this could be useful in scenarios where new data is flowing in and an analysis should be periodically re run. Duckdb doesn't seem to use multi threading. if i manually run that same query separately on the files pertaining to each customer (by splitting the third party output manually), and take the union of results afterwards, all my 4 cpu cores are busy and i get the results 4x faster. for i in range (m):.

Enable Concurrent Connection Issue 1343 Duckdb Duckdb Github This page demonstrates how to simultaneously insert into and read from a duckdb database across multiple python threads. this could be useful in scenarios where new data is flowing in and an analysis should be periodically re run. Duckdb doesn't seem to use multi threading. if i manually run that same query separately on the files pertaining to each customer (by splitting the third party output manually), and take the union of results afterwards, all my 4 cpu cores are busy and i get the results 4x faster. for i in range (m):.

Left Join Unnest Requires An On Clause Issue 7391 Duckdb Duckdb

Comments are closed.