Data Tagging Tutorial Supercomputing And Parallel Programming In Python And Mpi 7

Gather Tutorial Supercomputing And Parallel Programming In Python And Supercomputer playlist: watch?v=13x90stvknq&list=plqvvvaa0qudf9iw fe6no8scw avncfri&feature=sharewelcome to another mpi4py tutorial vi. In this series, you will learn not only how to build the supercomputer, but also how to use it by parallel programming with mpi (message passing interface) and the python programming language.

Pythonic Supercomputing Scaling Taichi Programs With Mpi4py By Mpi is a very powerful tool for parallel programming across a network of multi core systems. mpi can be used in many programming languages, such as c c , python, octave, etc. this tutorial will use python via the mpi4py module. ##setup before we get into writing any programs, lets focus on setting up our environment. This article will demonstrate how to use mpi with python to write code that can be run in parallel on your laptop, or a super computer. you will need to install a mpi application for your. Parallelization techniques message passing most popular method which explicitly passes data from one computer to another as they compute in parallel. assumes that each computer has its own memory not shared with the others, so all data exchange has to occur through explicit procedures. Demonstrated passing objects between nodes using dictionaries in mpi python. showed how to use tagging to send and receive multiple messages per node. highlighted the importance of using tags for message order and specificity in mpi python.

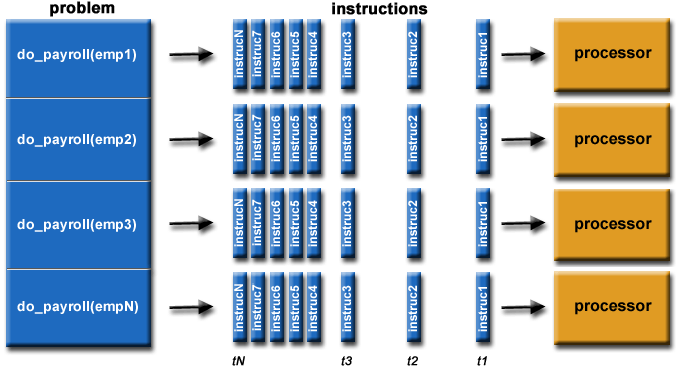

Python Mpi Introduction Parallelization techniques message passing most popular method which explicitly passes data from one computer to another as they compute in parallel. assumes that each computer has its own memory not shared with the others, so all data exchange has to occur through explicit procedures. Demonstrated passing objects between nodes using dictionaries in mpi python. showed how to use tagging to send and receive multiple messages per node. highlighted the importance of using tags for message order and specificity in mpi python. For this course's assignments, we majorly dealt with large datasets and worked on bridges 2 supercomputer at psc. languages and tools used: vscode, python (pandas, numpy, scikit learn), sql, pyspark, pytorch, tensorflow. topics covered were: large scale computing projects done at cmu, as a data science master's student. There is a lot of confusion about the gil, but essentially it prevents you from using multiple threads for parallel computing. instead, you need to use multiple python interpreters executing in separate processes. What is a supercomputer?. During this course you will learn to design parallel algorithms and write parallel programs using the mpi library. mpi stands for message passing interface, and is a low level, minimal and extremely flexible set of commands for communicating between copies of a program. using mpi running with mpirun.

Parallel And High Performance Programming With Python Unlock Parallel For this course's assignments, we majorly dealt with large datasets and worked on bridges 2 supercomputer at psc. languages and tools used: vscode, python (pandas, numpy, scikit learn), sql, pyspark, pytorch, tensorflow. topics covered were: large scale computing projects done at cmu, as a data science master's student. There is a lot of confusion about the gil, but essentially it prevents you from using multiple threads for parallel computing. instead, you need to use multiple python interpreters executing in separate processes. What is a supercomputer?. During this course you will learn to design parallel algorithms and write parallel programs using the mpi library. mpi stands for message passing interface, and is a low level, minimal and extremely flexible set of commands for communicating between copies of a program. using mpi running with mpirun.

Comments are closed.