Custom Row List Of Customtypes To Pyspark Dataframe Geeksforgeeks

Custom Row List Of Customtypes To Pyspark Dataframe Geeksforgeeks In this article, we are going to learn about the custom row (list of custom types) to pyspark data frame in python. we will explore how to create a pyspark data frame from a list of custom objects, where each object represents a row in the data frame. In order to achieve this i created my custom structtypes for myobject, myobject2 and my resulttype. val myobjschema = structtype(list( structfield("name",stringtype), structfield("age",integertype) )) val myobjschema2 = structtype(list( structfield("xyz",stringtype), structfield("abc",doubletype) )) val myrectype = structtype( list(.

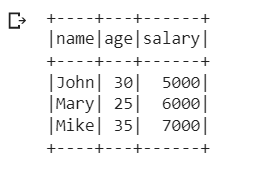

Convert Pyspark Row List To Pandas Dataframe Geeksforgeeks The approach we are going to use is to create a list of structured row types and we are using pyspark for the task. the steps are as follows: define the custom row class personrow = row("name","age") 2. create an empty list to populate later community = [] 3. create row objects with the specific data in them. Items = list(set(items)) df = pd.dataframe(items, columns=[column]) spark = get spark session() converts a column of a dataframe to a python list. pass unique=true to only get distinct. A row in pyspark is an immutable, dynamically typed object containing a set of key value pairs, where the keys correspond to the names of the columns in the dataframe. rows can be created in a number of ways, including directly instantiating a row object with a range of values, or converting an rdd of tuples to a dataframe. Here we can create a dataframe from a list of rows where each row is represented as a row object. this method is useful for small datasets that can fit into memory.

Get Specific Row From Pyspark Dataframe Geeksforgeeks A row in pyspark is an immutable, dynamically typed object containing a set of key value pairs, where the keys correspond to the names of the columns in the dataframe. rows can be created in a number of ways, including directly instantiating a row object with a range of values, or converting an rdd of tuples to a dataframe. Here we can create a dataframe from a list of rows where each row is represented as a row object. this method is useful for small datasets that can fit into memory. In this blog post, we’ll dive into the most essential dataframe operations that data engineers must know, showcasing code examples and their outputs to better understand their functionalities. Custom row (list of customtypes) to pyspark dataframe in this article, we are going to learn about the custom row (list of custom types) to pyspark data frame in python.we will explore how to create a pyspark data frame from. This tutorial will explain how to list all columns, data types or print schema of a dataframe, it will also explain how to create a new schema for reading files. this answer demonstrates how to create a pyspark dataframe with createdataframe, create df and todf. In this article, we are going to apply custom circuit into adenine data frame using pyspark in python. a distributed collection of rows under named columns is known as a pyspark data schuss.

Comments are closed.