Cuda Out Of Memory Understanding The Torch Cuda Outofmemoryerror

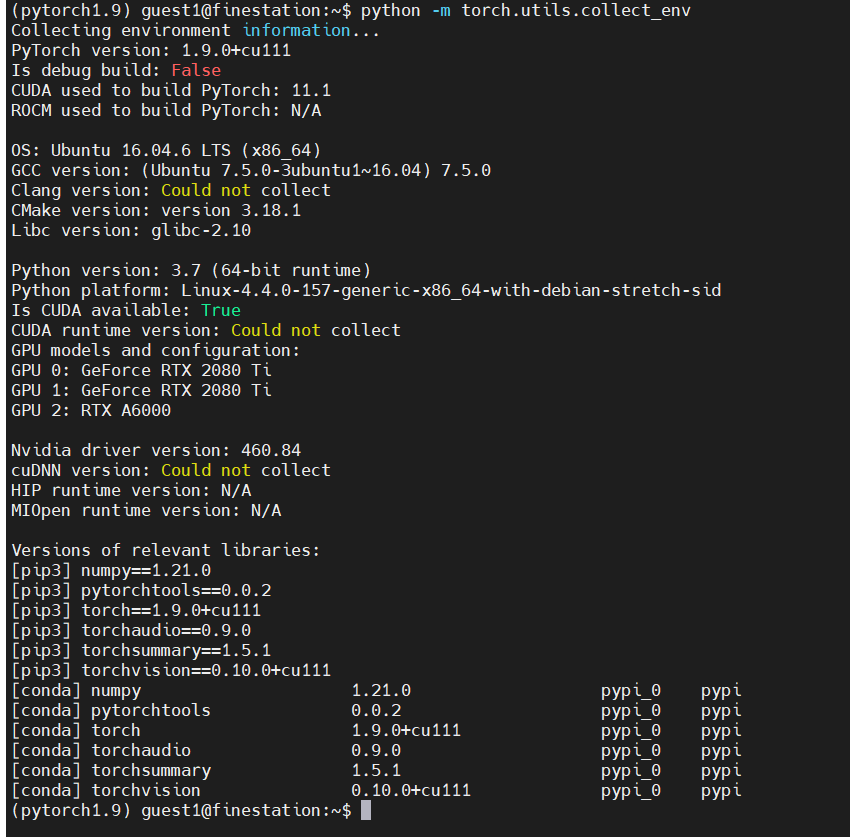

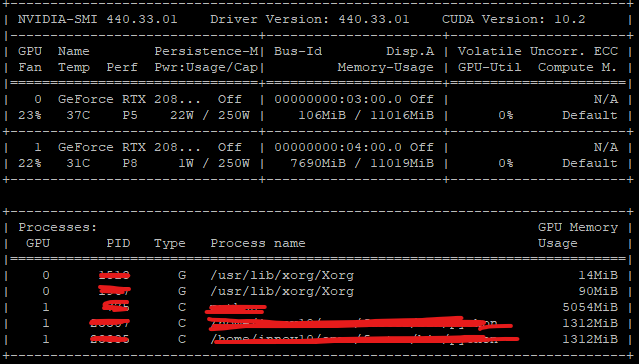

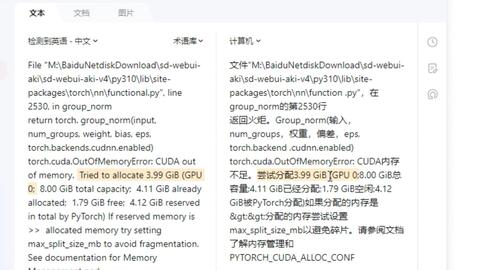

Cuda Out Of Memory Occurs While I Have Enough Cuda Memory Pytorch Forums You can manually clear unused gpu memory with the torch.cuda.empty cache() function. this command does not reset the allocated memory but frees the cache for other parts of your program. So reducing the batch size after restarting the kernel and finding the optimum batch size is the best possible option (but sometimes not a very feasible one). another way to get a deeper insight into the alloaction of memory in gpu is to use: torch.cuda.memory summary(device=none, abbreviated=false) wherein, both the arguments are optional.

Cuda Out Of Memory After Error Pytorch Forums In this guide, we’ll explore the pytorch cuda out of memory error in depth. you’ll learn why it happens, how to diagnose it, and most importantly, how to prevent and resolve it using practical tips and best practices. The 'cuda out of memory' error can be frustrating to deal with, but by understanding its common causes and implementing the solutions we have discussed, you can overcome it and train your deep learning models successfully. During your time with pytorch on gpus, you may be familiar with this common error message: torch. cuda. outofmemoryerror: cuda out of memory. tried to allocate 512.00 mib. gpu 0 has a total capacity of 79.32 gib of which 401.56 mib is free. Learn how to troubleshoot and fix the frustrating "cuda out of memory" error in pytorch, even when your gpu seems to have plenty of free memory available. training deep learning models often requires significant gpu memory, and running out of cuda memory is a common issue.

Cuda Out Of Memory Understanding The Torch Cuda Outofmemoryerror During your time with pytorch on gpus, you may be familiar with this common error message: torch. cuda. outofmemoryerror: cuda out of memory. tried to allocate 512.00 mib. gpu 0 has a total capacity of 79.32 gib of which 401.56 mib is free. Learn how to troubleshoot and fix the frustrating "cuda out of memory" error in pytorch, even when your gpu seems to have plenty of free memory available. training deep learning models often requires significant gpu memory, and running out of cuda memory is a common issue. When training deep learning models using pytorch on gpus, you may encounter the dreaded "cuda out of memory" error. this occurs when your model and data exceed the available gpu memory. here are some effective strategies to prevent this issue: gradient accumulation process multiple smaller batches before updating the model's parameters. Solved: how to avoid 'cuda out of memory' in pytorch … 1. reduce the batch size. 2. iterative transfer to cuda. 3. minimize gradient retention. 4. understand the real memory requirement. 5. clean up memory. 6. test with smaller data. 7. use automatic mixed precision (amp) 8. gradient accumulation. 9. resize input data. 10. One common issue that you might encounter when using pytorch with gpus is the "runtimeerror: cuda out of memory" error. this error typically arises when your program tries to allocate more gpu memory than is available, which can occur during the training or inference of deep learning models. Torch cuda oom error: cuda out of memory * learn what causes the torch cuda oom error. * get tips on how to fix the error and prevent it from happening again. * see working examples of how to fix the torch cuda oom error.

Cuda Out Of Memory Understanding The Torch Cuda Outofmemoryerror When training deep learning models using pytorch on gpus, you may encounter the dreaded "cuda out of memory" error. this occurs when your model and data exceed the available gpu memory. here are some effective strategies to prevent this issue: gradient accumulation process multiple smaller batches before updating the model's parameters. Solved: how to avoid 'cuda out of memory' in pytorch … 1. reduce the batch size. 2. iterative transfer to cuda. 3. minimize gradient retention. 4. understand the real memory requirement. 5. clean up memory. 6. test with smaller data. 7. use automatic mixed precision (amp) 8. gradient accumulation. 9. resize input data. 10. One common issue that you might encounter when using pytorch with gpus is the "runtimeerror: cuda out of memory" error. this error typically arises when your program tries to allocate more gpu memory than is available, which can occur during the training or inference of deep learning models. Torch cuda oom error: cuda out of memory * learn what causes the torch cuda oom error. * get tips on how to fix the error and prevent it from happening again. * see working examples of how to fix the torch cuda oom error.

Cuda Out Of Memory Understanding The Torch Cuda Outofmemoryerror One common issue that you might encounter when using pytorch with gpus is the "runtimeerror: cuda out of memory" error. this error typically arises when your program tries to allocate more gpu memory than is available, which can occur during the training or inference of deep learning models. Torch cuda oom error: cuda out of memory * learn what causes the torch cuda oom error. * get tips on how to fix the error and prevent it from happening again. * see working examples of how to fix the torch cuda oom error.

Comments are closed.