Create Dataframe Using Pyspark Dataframe Spark Pyspark

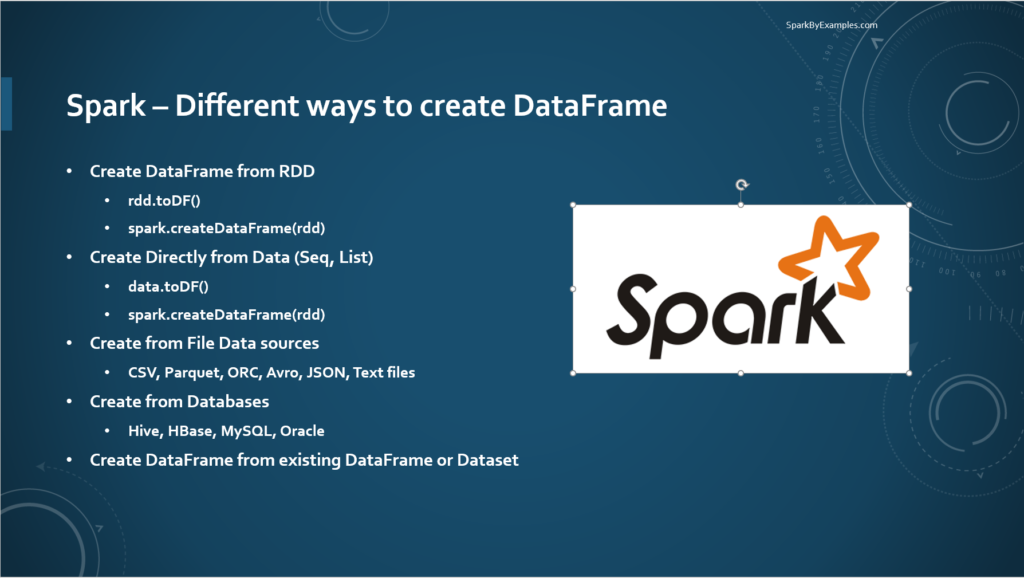

Spark Create Dataframe With Examples Spark By Examples Use csv() method of the dataframereader object to create a dataframe from csv file. you can also provide options like what delimiter to use, whether you have quoted data, date formats, infer schema, and many more. Creates a dataframe from an rdd, a list, a pandas.dataframe, a numpy.ndarray, or a pyarrow.table. new in version 2.0.0. changed in version 3.4.0: supports spark connect. changed in version 4.0.0: supports pyarrow.table.

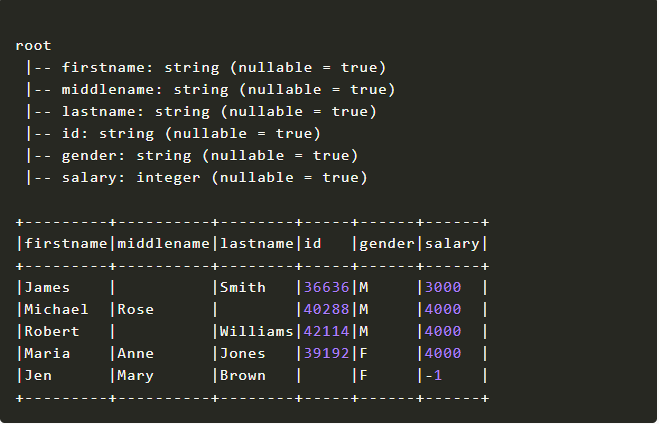

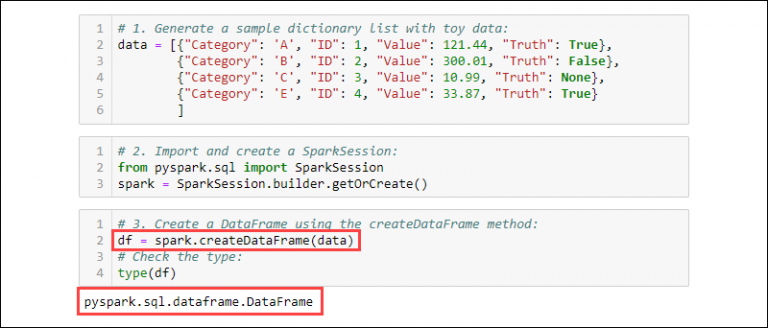

Pyspark Create Dataframe From List Spark By Examples Df = spark.createdataframe ( [ ], schema='a long, b double, c string, d date, e timestamp'): creates a pyspark dataframe using a list of tuples and an explicit schema that defines the column names and data types. Dataframes can be created using various methods in pyspark: you can create a dataframe from an existing rdd using a case class and the todf method: you can create dataframes from structured data files such as csv, json, and parquet using the read method: learn about dataframes in apache pyspark. There are three ways to create a dataframe in spark by hand: 1. create a list and parse it as a dataframe using the todataframe() method from the sparksession. 2. convert an rdd to a dataframe using the todf() method. 3. import a file into a sparksession as a dataframe directly. This section shows you how to create a spark dataframe and run simple operations. the examples are on a small dataframe, so you can easily see the functionality. let’s start by creating a spark session: some spark runtime environments come with pre instantiated spark sessions.

Pyspark Create Dataframe With Examples Spark Qas There are three ways to create a dataframe in spark by hand: 1. create a list and parse it as a dataframe using the todataframe() method from the sparksession. 2. convert an rdd to a dataframe using the todf() method. 3. import a file into a sparksession as a dataframe directly. This section shows you how to create a spark dataframe and run simple operations. the examples are on a small dataframe, so you can easily see the functionality. let’s start by creating a spark session: some spark runtime environments come with pre instantiated spark sessions. I am trying to manually create a pyspark dataframe given certain data: structfield("time epocs", decimaltype(), true), structfield("lat", decimaltype(), true), structfield("long", decimaltype(), true), this gives an error when i try to display the dataframe, so i am not sure how to do this. There are two common ways to create a pyspark dataframe from an existing dataframe: method 1: specify columns to keep from existing dataframe. df new = df.select('team', 'points') method 2: specify columns to drop from existing dataframe. df new = df.drop('conference'). To generate a dataframe — a distributed collection of data arranged into named columns — pyspark offers multiple methods. the following are some typical pyspark methods for creating a. Whether you’re new to spark or looking to enhance your skills, let us delve into understanding how to create dataframes and manipulate data effectively, unlocking the power of big data analytics with pyspark.

How To Create A Spark Dataframe 5 Methods With Examples I am trying to manually create a pyspark dataframe given certain data: structfield("time epocs", decimaltype(), true), structfield("lat", decimaltype(), true), structfield("long", decimaltype(), true), this gives an error when i try to display the dataframe, so i am not sure how to do this. There are two common ways to create a pyspark dataframe from an existing dataframe: method 1: specify columns to keep from existing dataframe. df new = df.select('team', 'points') method 2: specify columns to drop from existing dataframe. df new = df.drop('conference'). To generate a dataframe — a distributed collection of data arranged into named columns — pyspark offers multiple methods. the following are some typical pyspark methods for creating a. Whether you’re new to spark or looking to enhance your skills, let us delve into understanding how to create dataframes and manipulate data effectively, unlocking the power of big data analytics with pyspark.

How To Create A Spark Dataframe 5 Methods With Examples To generate a dataframe — a distributed collection of data arranged into named columns — pyspark offers multiple methods. the following are some typical pyspark methods for creating a. Whether you’re new to spark or looking to enhance your skills, let us delve into understanding how to create dataframes and manipulate data effectively, unlocking the power of big data analytics with pyspark.

Comments are closed.