Cpus Vs Gpus For Larger Machine Learning Datasets

Cpus Vs Gpus For Larger Machine Learning Datasets While most machine learning tasks do require more powerful processors to parse large datasets, many modern cpus are sufficient for some smaller scale machine learning applications. while gpus are more popular for machine learning projects, increased demand can lead to increased costs. Key differences between cpus and gpus. architecture: cpus: have fewer cores (usually 2 16) optimized for sequential processing. each core is powerful and can handle complex tasks. gpus: have thousands of smaller, less powerful cores designed for parallel processing. this makes gpus suitable for tasks that can be divided into many smaller tasks.

Cpus Vs Gpus For Larger Machine Learning Datasets Yes, cpu cores are important for machine learning, especially for tasks like data pre processing, model selection, and handling large datasets. while gpus excel at training complex models, cpus efficiently manage these pre training stages and leverage their multiple cores for faster sequential processing. Cpu vs. gpu: what’s best for machine learning? explore how cpus and gpus function within machine learning workflows, the impact of gpu shortages, and optimizing with ultra low latency databases for enhanced performance. Gpus are designed for parallel processing, making them ideal for training large machine learning models. cpus typically have fewer, more powerful cores, while gpus have thousands of smaller cores. Deciding whether to use a cpu, gpu, or tpu for your machine learning models depends on the specific requirements of your project, including the complexity of the model, the size of your data, and your computational budget. here's a quick guide to help you decide when to use each:.

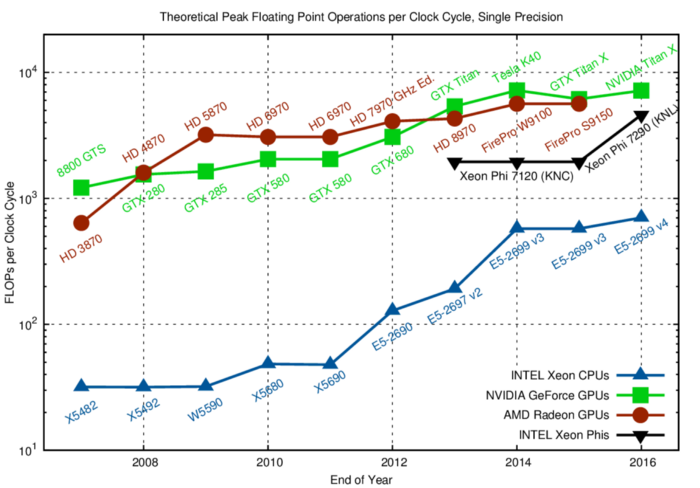

Cpus Vs Gpus For Larger Machine Learning Datasets Gpus are designed for parallel processing, making them ideal for training large machine learning models. cpus typically have fewer, more powerful cores, while gpus have thousands of smaller cores. Deciding whether to use a cpu, gpu, or tpu for your machine learning models depends on the specific requirements of your project, including the complexity of the model, the size of your data, and your computational budget. here's a quick guide to help you decide when to use each:. Parallelism vs. serial processing: gpus are highly parallel, making them superior for deep learning, while cpus are better for sequential tasks. 2. memory bandwidth: gpus have higher memory bandwidth, allowing faster data transfer during training. 3. Discover the key differences between cpus and gpus for machine learning in this insightful article. learn how gpus excel in training large neural networks with parallel processing, while cpus provide versatility for diverse data tasks. The fundamental difference between gpus and cpus is that cpus are ideal for performing sequential tasks quickly, while gpus use parallel processing to compute tasks simultaneously with greater speed and efficiency. cpus are general purpose processors that can handle almost any type of calculation. In a nutshell, gpus are ideal for complex computing needs such as machine learning, deep learning models, data analytics, and other artificial intelligence applications.

Why Do You Use Gpus Instead Of Cpus For Machine Learning Tsa Inc Parallelism vs. serial processing: gpus are highly parallel, making them superior for deep learning, while cpus are better for sequential tasks. 2. memory bandwidth: gpus have higher memory bandwidth, allowing faster data transfer during training. 3. Discover the key differences between cpus and gpus for machine learning in this insightful article. learn how gpus excel in training large neural networks with parallel processing, while cpus provide versatility for diverse data tasks. The fundamental difference between gpus and cpus is that cpus are ideal for performing sequential tasks quickly, while gpus use parallel processing to compute tasks simultaneously with greater speed and efficiency. cpus are general purpose processors that can handle almost any type of calculation. In a nutshell, gpus are ideal for complex computing needs such as machine learning, deep learning models, data analytics, and other artificial intelligence applications.

Gpus Vs Cpus Understanding Why Gpus Are Superior To Cpus For Machine The fundamental difference between gpus and cpus is that cpus are ideal for performing sequential tasks quickly, while gpus use parallel processing to compute tasks simultaneously with greater speed and efficiency. cpus are general purpose processors that can handle almost any type of calculation. In a nutshell, gpus are ideal for complex computing needs such as machine learning, deep learning models, data analytics, and other artificial intelligence applications.

Comments are closed.