Build A Rag Based Llm App In 20 Minutes Full Langflow Tutorial

Free Video Build A Rag Based Llm App In 20 Minutes Langflow Tutorial Haystack is an easy open-source framework for building RAG pipelines and LLM-powered applications, and the foundation for a handy SaaS platform for managing their life cycle Build a stronger back and bigger biceps with this 20-minute dumbbell workout — no pull-ups needed I tried this ‘tiny weights big burn’ 30-minute workout to sculpt strong arms — and I’m

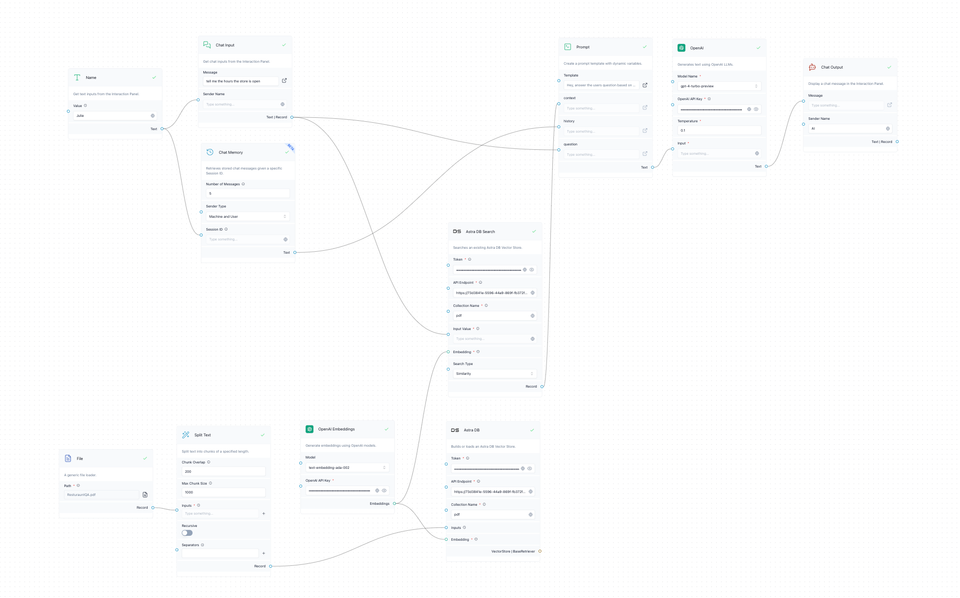

Building A Rag Based Llm App With Langflow This short routine is designed to develop full-body muscle, raise your heart rate, and boost your metabolism in just 20 minutes without a weight in sight Google has heated up the app-building space, today rolling out a generative AI-powered end-to-end app platform that allows users to create custom apps in minutes Today at Google Cloud Next, the They self-funded the company and by 2023, the founding team had launched Langflow, which quickly gained some traction as an early open source low-code/no-code tool for creating GenAI apps Today, the Montana-based data-as-a-service and cloud storage company Snowflake announced Cortex, a fully managed service that brings the power of large language models (LLMs) into its data cloud

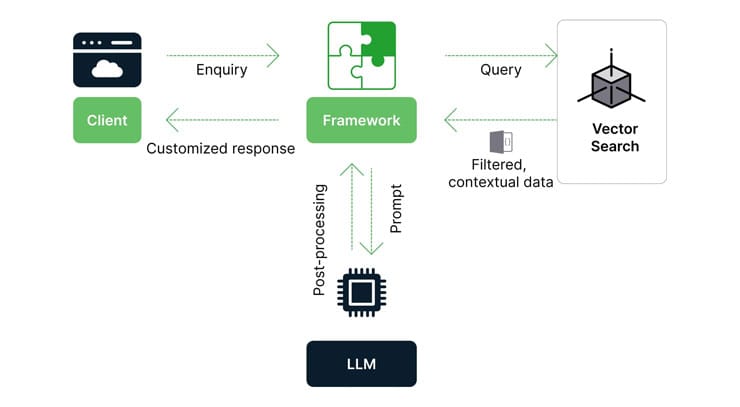

Langflow Tutorial 1 Build Ai Powered Apps Without Coding 54 Off They self-funded the company and by 2023, the founding team had launched Langflow, which quickly gained some traction as an early open source low-code/no-code tool for creating GenAI apps Today, the Montana-based data-as-a-service and cloud storage company Snowflake announced Cortex, a fully managed service that brings the power of large language models (LLMs) into its data cloud Enter retrieval-augmented generation, or RAG RAG is a technique used to augment an LLM with external data, such as your company documents, that provide the model with the knowledge and context it Retrieval augmented generation, or 'RAG' for short, creates a more customized and accurate generative AI model that can greatly reduce anomalies such as hallucinations

How To Build Scalable Rag Based Llm Applications Enter retrieval-augmented generation, or RAG RAG is a technique used to augment an LLM with external data, such as your company documents, that provide the model with the knowledge and context it Retrieval augmented generation, or 'RAG' for short, creates a more customized and accurate generative AI model that can greatly reduce anomalies such as hallucinations

Build An Llm Rag Chatbot With Langchain

Comments are closed.