Barycentrics Solid Wireframe Shader Opengl Khronos Forums

Etching Shader Opengl Khronos Forums Vec3 remap = smoothstep(vec3(0.0), dbarycoord, barycentrics); float closestedge = min(min(remap.x, remap.y), remap.z); albedo = vec3(closestedge); when i apply the shader to object, i am seeting weird thing that some of surfaces (mostly top bottom) are appears as black, what can be the reason? is it because of wrong normal set to its surface?. I'm working on drawing a terrain in webgl. the problem is that i'm only using 4 vertices to draw a single quad by using indices to share vertices. so i can't upload unique baricentric coordinates for each vertex, because it's shared. here's a picture that shows the problem more clearly.

Barycentrics Solid Wireframe Shader Opengl Khronos Forums This module uses barycentric coordinates to draw a wireframe on a solid triangular mesh. alternatively, it will simply draw grid lines at integer component values of a float or vector valued variable which you give it. We have a wireframe shader that calculates the barycentric coordinates in the geometry program, and out puts is into the triangle stream. looks like this: code: select all inversew is to counteract the effect of perspective correct interpolation so that the lines look the same thickness regardless of their depth in the scene. I’d be interested to see evidence that barycentric coordinates are “sitting around anyways” in fragment shader accessible memory, across multiple iterations of various hardware. interpolation is handled by the rasterizer, not by the fragment shader. Some time ago i've learned about a cool trick to use barycentric coordinates for drawing the wireframes. here's how it looks like in practice: what does it take to draw them? some modifications to the vertex and fragment shaders, another vertex attribute and generating corresponding barymetric coordinate for each point.

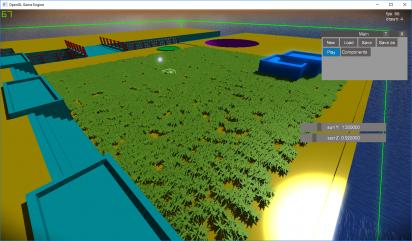

Grass Shader Opengl Advanced Coding Khronos Forums I’d be interested to see evidence that barycentric coordinates are “sitting around anyways” in fragment shader accessible memory, across multiple iterations of various hardware. interpolation is handled by the rasterizer, not by the fragment shader. Some time ago i've learned about a cool trick to use barycentric coordinates for drawing the wireframes. here's how it looks like in practice: what does it take to draw them? some modifications to the vertex and fragment shaders, another vertex attribute and generating corresponding barymetric coordinate for each point. Pixel shader accesses those parameters through flat and explicitinterpamd interpolators to establish the order of native barycentrics available through gl barycoordsmoothamd. performance matches the "speed of light". there is no measurable overhead from accessing barycentrics with this method. Gl ext fragment shader barycentric is a more vendor neutral version of both nvidia’s gl nv fragment shader barycentric extension, and amd’s gl amd shader explicit vertex parameter extension which allows fragment shaders to directly access the barycentric weights of the current sample. You could generate this data in a geometry shader. just take your 3 vertices and emit (1,0,0), (0,1,0) and (0,0,1) for barycentric coords then emit all 3 colors to each generated vertices. It appears to be the “perfect” way of doing wireframe over solid rendering. it uses both a vertex and fragment shader. optionally it is also possible to use a geometry shader to speed things up and handle a few nasty exceptional cases. nvidia have a demo that demonstrates the geometry shader approach.

Grass Shader Opengl Advanced Coding Khronos Forums Pixel shader accesses those parameters through flat and explicitinterpamd interpolators to establish the order of native barycentrics available through gl barycoordsmoothamd. performance matches the "speed of light". there is no measurable overhead from accessing barycentrics with this method. Gl ext fragment shader barycentric is a more vendor neutral version of both nvidia’s gl nv fragment shader barycentric extension, and amd’s gl amd shader explicit vertex parameter extension which allows fragment shaders to directly access the barycentric weights of the current sample. You could generate this data in a geometry shader. just take your 3 vertices and emit (1,0,0), (0,1,0) and (0,0,1) for barycentric coords then emit all 3 colors to each generated vertices. It appears to be the “perfect” way of doing wireframe over solid rendering. it uses both a vertex and fragment shader. optionally it is also possible to use a geometry shader to speed things up and handle a few nasty exceptional cases. nvidia have a demo that demonstrates the geometry shader approach.

Shader Problem Presumably Opengl Basic Coding Khronos Forums You could generate this data in a geometry shader. just take your 3 vertices and emit (1,0,0), (0,1,0) and (0,0,1) for barycentric coords then emit all 3 colors to each generated vertices. It appears to be the “perfect” way of doing wireframe over solid rendering. it uses both a vertex and fragment shader. optionally it is also possible to use a geometry shader to speed things up and handle a few nasty exceptional cases. nvidia have a demo that demonstrates the geometry shader approach.

Comments are closed.