Append Pyspark Dataframe Without Column Names Append Dataframe Without Header Learn Pyspark

Append Dataframe Pandas Without Column Names Learn Easy Steps Learn easy steps to append pyspark dataframe without column names. step by step explanation with an example of transactional data. I want to read the csv file which has no column names in first row. how to read it and name the columns with my specified names in the same time ? for now, i just renamed the original columns with my specified names like this: df = spark.read.csv("user click seq.csv",header=false) df = df.withcolumnrenamed(" c0", "member srl").

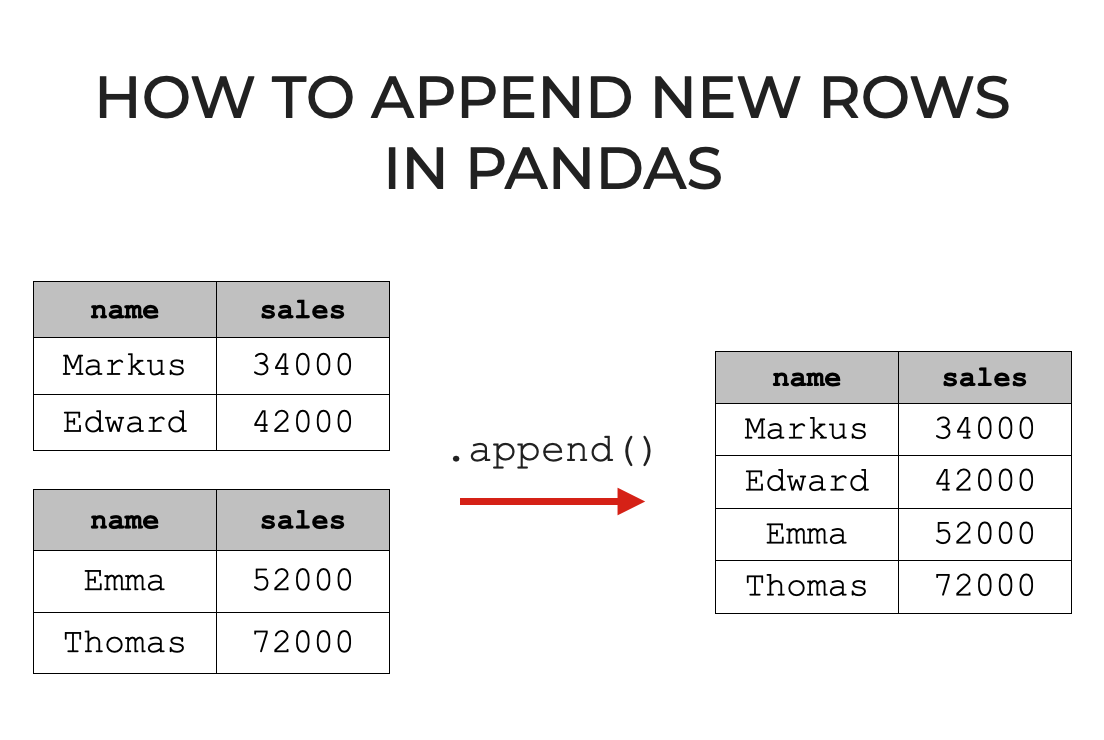

Append Dataframe Pandas Without Column Names Learn Easy Steps Easy steps to append dataframe without header in pyspark. explained in step by step approach with an example. more. In this article, we are going to see how to append data to an empty dataframe in pyspark in the python programming language. method 1: make an empty dataframe and make a union with a non empty dataframe with the same schema. Learn how to use the writeto () function in pyspark to save, append, or overwrite dataframes to managed or external tables using delta lake or hive. In spark 3.1, you can easily achieve this using unionbyname () for concatenating the dataframe. syntax: dataframe 1.unionbyname (dataframe 2) where, example: output: functools module provides functions for working with other functions and callable objects to use or extend them without completely rewriting them. syntax:.

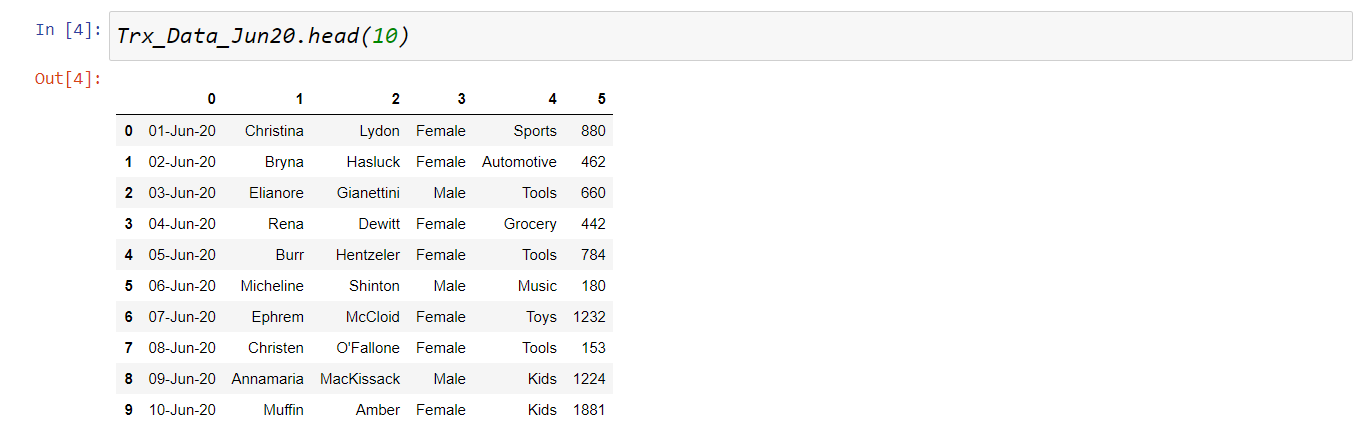

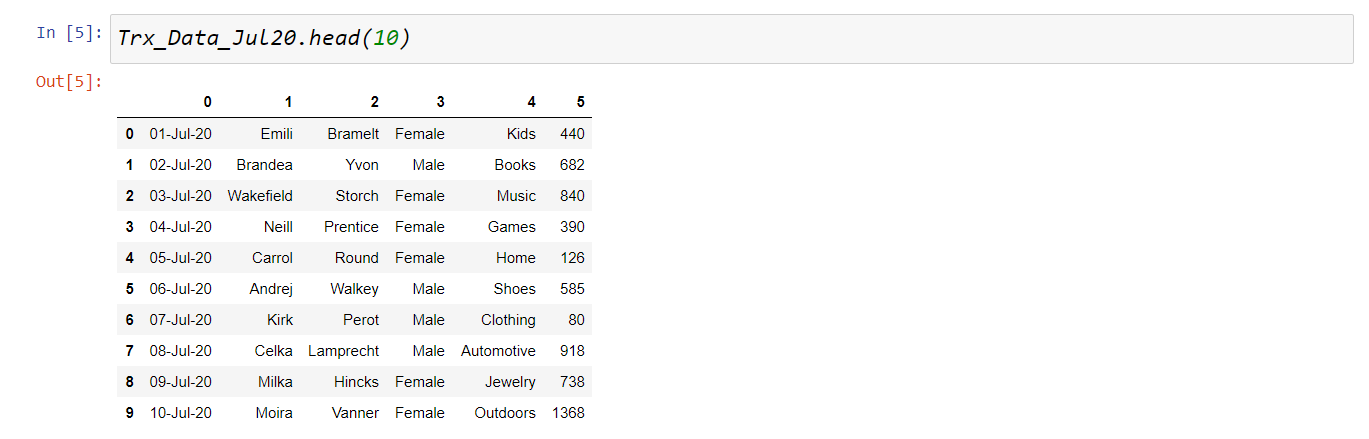

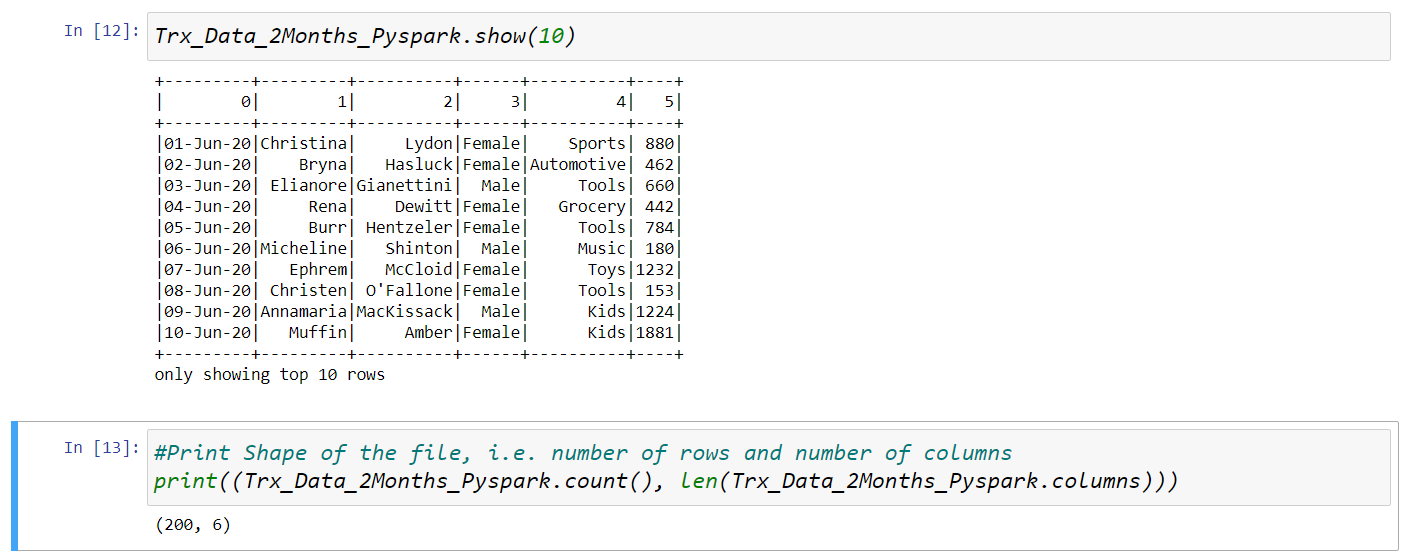

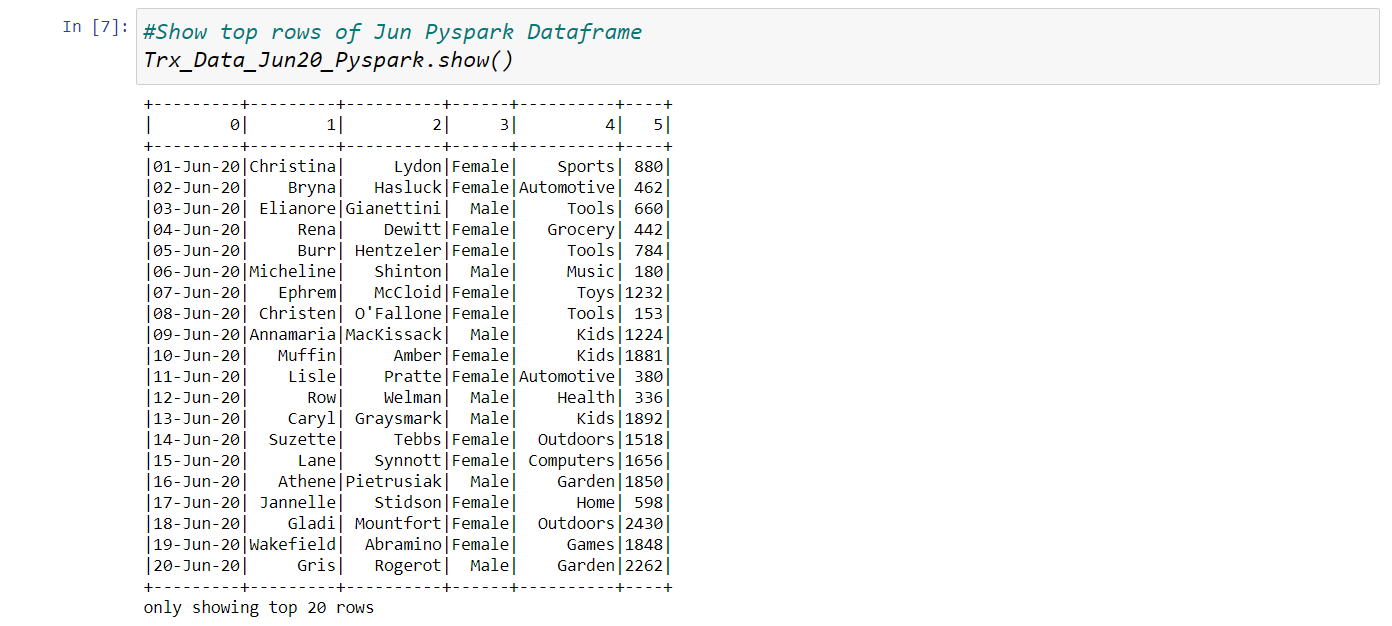

Append Dataframes With Diffe Column Names Infoupdate Org Learn how to use the writeto () function in pyspark to save, append, or overwrite dataframes to managed or external tables using delta lake or hive. In spark 3.1, you can easily achieve this using unionbyname () for concatenating the dataframe. syntax: dataframe 1.unionbyname (dataframe 2) where, example: output: functools module provides functions for working with other functions and callable objects to use or extend them without completely rewriting them. syntax:. Pyspark has function available to append multiple dataframes together. this article discusses in detail how to append multiple dataframe in pyspark. john has multiple transaction tables available. he has 4 month transactional data april, may, jun and july. each month dataframe has 6 columns present. the columns are in same order and same format. 2 solutions for that. you compare your current data with the previous one and write only the line which do not exist. df old, how="left anti", on="", # add proper join condition here . you concatenate both old and new, dedup the data, then rewrite them. df.unionall(df old).distinct().write( ) thanks for contributing an answer to stack overflow!. Try by creating empty dataframe with result dataframe schema then do unionall. i have a task of combining multiple spark dataframes generated from a for loop together. so i thought to create an empty dataframe before running the for loop and then combine them by unionall. res. I am creating an empty dataframe and later trying to append another data frame to that. in fact i want to append many dataframes to the initially empty dataframe dynamically depending on number of rdds coming. the union () function works fine if i assign the value to another a third dataframe.

Append Pyspark Dataframe Without Column Names Learn Easy Steps Pyspark has function available to append multiple dataframes together. this article discusses in detail how to append multiple dataframe in pyspark. john has multiple transaction tables available. he has 4 month transactional data april, may, jun and july. each month dataframe has 6 columns present. the columns are in same order and same format. 2 solutions for that. you compare your current data with the previous one and write only the line which do not exist. df old, how="left anti", on="", # add proper join condition here . you concatenate both old and new, dedup the data, then rewrite them. df.unionall(df old).distinct().write( ) thanks for contributing an answer to stack overflow!. Try by creating empty dataframe with result dataframe schema then do unionall. i have a task of combining multiple spark dataframes generated from a for loop together. so i thought to create an empty dataframe before running the for loop and then combine them by unionall. res. I am creating an empty dataframe and later trying to append another data frame to that. in fact i want to append many dataframes to the initially empty dataframe dynamically depending on number of rdds coming. the union () function works fine if i assign the value to another a third dataframe.

Append Pyspark Dataframe Without Column Names Learn Easy Steps Try by creating empty dataframe with result dataframe schema then do unionall. i have a task of combining multiple spark dataframes generated from a for loop together. so i thought to create an empty dataframe before running the for loop and then combine them by unionall. res. I am creating an empty dataframe and later trying to append another data frame to that. in fact i want to append many dataframes to the initially empty dataframe dynamically depending on number of rdds coming. the union () function works fine if i assign the value to another a third dataframe.

Comments are closed.