Apache Spark Sql Loading And Saving Data Using The Json Csv Format

Explain Spark Sql Json Functions Projectpro To load a json file you can use: find full example code at "examples src main python sql datasource.py" in the spark repo. to load a csv file you can use: find full example code at "examples src main python sql datasource.py" in the spark repo. the extra options are also used during write operation. Hi bluephantom, i tried your suggestion as follows: df = (spark.read .format(csv) \ .schema(myschema) \ .option("multiline", true) \ .load(datapath) ) but i got the same table.

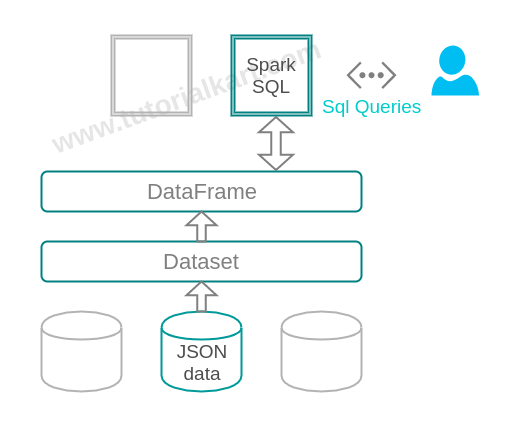

Loading Json File Using Spark Scala This article will explore how to work with json data using apache spark, providing hands on examples that you can follow along with. Use spark.read.format() to specify the format of the data you want to load. spark supports all major data storage formats, including csv, json, parquet, and many more. you can use .printschema() to see the schema of the data you loaded. you can write your dataframe with the write attribute. In this comprehensive guide, we’ll explore how to work with json and semi structured data in apache spark, with a focus on handling nested json and using advanced json functions . To read json files into a pyspark dataframe, users can use the json() method from the dataframereader class. this method parses json files and automatically infers the schema, making it convenient for handling structured and semi structured data.

Scala Loading Json To Spark Sql Stack Overflow In this comprehensive guide, we’ll explore how to work with json and semi structured data in apache spark, with a focus on handling nested json and using advanced json functions . To read json files into a pyspark dataframe, users can use the json() method from the dataframereader class. this method parses json files and automatically infers the schema, making it convenient for handling structured and semi structured data. Spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. this conversion can be done using sparksession.read.json () on either a dataset [string], or a json file. Spark sql can automatically infer the schema of a json dataset and load it as a `dataset [row]`. this conversion can be done using `sparksession.read.json ()` on either a `dataset [string]`, or a json file. note that the file that is offered as a json file is not a typical json file. In this guide, we explored 7 ways to load json data in apache spark, from basic techniques like spark.read.json() to advanced methods such as loading with custom schemas or parsing json. In apache spark, reading a json file into a dataframe can be achieved using either spark.read.json("path") or spark.read.format("json").load("path"). both methods are essentially equivalent in functionality but offer different syntactic styles.

Loading Json File Using Spark Scala Spark sql can automatically infer the schema of a json dataset and load it as a dataset [row]. this conversion can be done using sparksession.read.json () on either a dataset [string], or a json file. Spark sql can automatically infer the schema of a json dataset and load it as a `dataset [row]`. this conversion can be done using `sparksession.read.json ()` on either a `dataset [string]`, or a json file. note that the file that is offered as a json file is not a typical json file. In this guide, we explored 7 ways to load json data in apache spark, from basic techniques like spark.read.json() to advanced methods such as loading with custom schemas or parsing json. In apache spark, reading a json file into a dataframe can be achieved using either spark.read.json("path") or spark.read.format("json").load("path"). both methods are essentially equivalent in functionality but offer different syntactic styles.

How To Load Data From Json File And Execute Sql Query In Spark Sql In this guide, we explored 7 ways to load json data in apache spark, from basic techniques like spark.read.json() to advanced methods such as loading with custom schemas or parsing json. In apache spark, reading a json file into a dataframe can be achieved using either spark.read.json("path") or spark.read.format("json").load("path"). both methods are essentially equivalent in functionality but offer different syntactic styles.

Nested Json Data Processing With Apache Spark Ppt

Comments are closed.